Here’s How Hackers Could Spoof Self-Driving Vehicles

Autonomous vehicles are still in development by automakers and startups, if a bit slower and less capable than initially quoted a decade ago.

As a result, academic researchers are still keen on exploring the range of possibilities, with a collaboration between UC Irvine and Japan’s Keio University finding some concerning probabilities in lidar object detection.

Laser spoofing could result in a lack of detection or falsified object detection by autonomous vehicles, potentially leading to unpredictable and dangerous driving behavior.

The rhetorical heyday of autonomous vehicles has seemingly come and gone. The technology is still under development, with legacy manufacturers and commercial operators continuing to invest in self-driving development programs at a tempered rate.

Even so, the proliferation of the technology is strong enough (and maybe strange enough, too) that the realm of academic research is still deeply invested. It is the future, after all, or at least that's what we were told. But the idyllic future of self-driving cars may not be so safe and sound, either, according to researchers at the University of California Irvine.

That's because there is an insidious, potentially hazardous vulnerability baked into the technological recipe that makes autonomous vehicles possible.

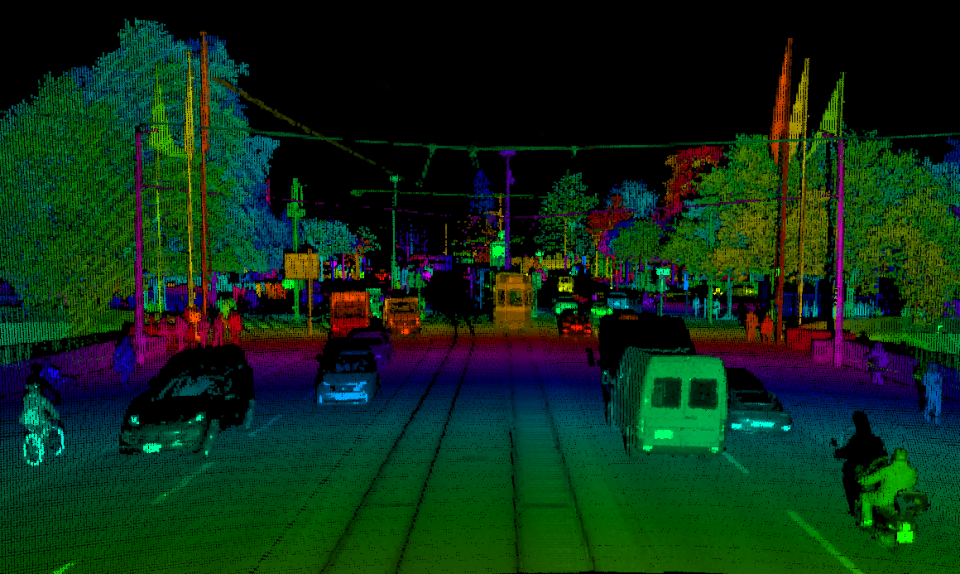

To add redundancy to its navigation and sensing systems, many autonomous vehicle developers use lidar, known in the long form as Light Detection and Ranging.

Using pulsed lasers, lidar systems make a map of the environment ahead as the signals rebound and the distance between is calculated.

Developed by NASA in the 1990s, lidar is now par for the course on most autonomous programs including Waymo and Cruise, backing up the radar and camera-based monitoring systems.

However, because lidar relies on an accurately returning laser beam, computer scientists and electrical engineers at UC Irvine and Japan’s Keio University suspected and then tested the possibility of bad-faith laser spoofing.

And the results are certainly concerning, across the testing spectrum of nine commercially available lidar systems.

The team found deficiencies in aging and newer generation lidar systems, potentially leading to abrupt, unnecessary, and dangerous driving behavior from autonomous vehicles.

Depending on the parameters set by the developer, these attacks could lead to emergency stops or strong swerves away from a sensed object that doesn't actually exist.

This is problematic from a road safety perspective, as human drivers could be right in identifying a lack of hazard ahead, potentially leading to confusion-based collisions. In testing, injecting falsified objects was technically possible for both new and old lidar systems, but the latest systems were nearly impossible to trick into object detection thanks to randomized laser pulsing.

However, researchers also tested an even more concerning possibility: laser attacks focused on blocking the object detection capabilities of autonomous vehicles altogether. Instead of tricking an autonomous vehicle into avoiding a perceived object, these attacks aim to block object detection entirely. The consequences of this aren't hard to imagine.

Before anyone panics, it's very important to note that researchers believe the possibility of such an attack working is slim. Because autonomous vehicles are moving targets, concentrating enough laser power continuously in front of an autonomous vehicle to block randomized laser pulses is logistically challenging and likely ineffective.

Furthermore, the team of researchers also tested defense mechanisms against both types of laser spoofing attacks. In the case of falsified objects, most new-generation lidar systems are capable of filtering out these attacks on their own. However, the team suggests a further emphasis on laser pulse randomization to limit the effects of both attacks.

As with any academic study, there are always limitations, and the research team wasn't able to test this hypothesis on a car moving at high speeds. Similarly, some lidar brands like Luminar were not included in this test as a result of their longer distance capabilities. Waymo's private Robotaxi lidar was also precluded from this test.

But why would someone want to spoof autonomous vehicles? Beyond a philosophical discussion on the evils of technology (consult The New Yorker or Jacobin for that), this kind of spoofing is prolific in the airline industry, with pilots flying over regions of global conflict regularly reporting similar styles of radar and GPS database spoofing.

Skeptics abound in any industry and some people take it too far. Regardless, this base of research provides autonomous manufacturers with a better blueprint of how to make their systems even more redundant. We may not see full-scale autonomous cars just yet, but the work is still being done.

"This is to date the most extensive investigation of lidar vulnerabilities ever conducted," said lead author Takami Sato. "Through a combination of real-world testing and computer modeling, we were able to come up with 15 new findings to inform the design and manufacture of future autonomous vehicle systems."

What would make you trust an autonomous vehicle to drive you and your family around? Please share your thoughts below.

Yahoo Autos

Yahoo Autos