Google experiments with using video to search, thanks to Gemini AI

Google has already admitted that video platforms like TikTok and Instagram are eating into its core Search product, especially among younger Gen Z users. Now it aims to make searching video a bigger part of Google Search, thanks to Gemini AI. At the Google I/O 2024 developer conference on Tuesday, the company announced it will allow users to search using a video they upload combined with a text query to get an AI overview of the answers they need.

The feature will initially be available as an experiment in Search Labs for users in the U.S. in English.

This multimodal capability builds on an existing search feature that lets users add text to visual searches. First introduced in 2021, the ability to search using both photos and text combined has helped Google in areas that it typically struggles with — like when there's a visual component to what you're looking for that's hard to describe, or something that could be described in different ways. For example, you could pull up a photo of a shirt you liked on Google Search, and then use Google Lens to find the same pattern on a pair of socks, the company had suggested at the time.

Now with the added ability to search via video, the company is reacting to how users, particularly young users, engage with the world through their smartphones. They often take videos not photos and express themselves creatively using video as well. It makes sense, then, that they'd also want to use video to search, at times.

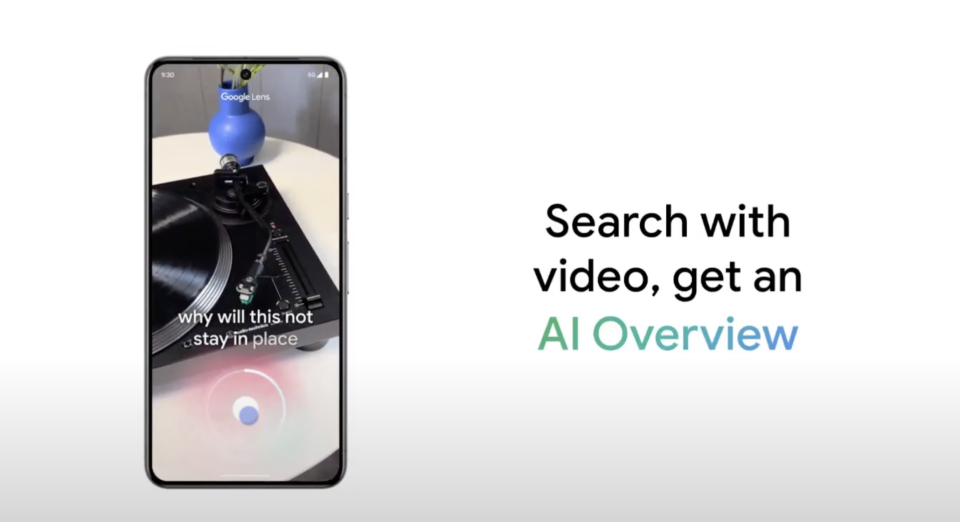

The feature allows users to upload a video and ask a question to form a search query. In a demo, Google showed a video of a broken record player whose arm would not stay on the record. The query included a video of the problem along with the question, "Why will this not stay in place?" (referring to the arm). Google's Gemini AI then analyzes the video frame-by-frame to understand what it's looking at and then offers an AI overview of possible tips on how to fix it.

If you want to dive in deeper, there are also links to discussion forums, or watch a video about how to rebalance the arm on your turntable.

While Google demoed this ability to understand video content in conjunction with Google Search queries, it has implications in other areas as well, including understanding the video on your phone, those uploaded to private cloud storage like Google Photos, and those publicly shared via YouTube.

The company didn't say how long the new Google Labs feature would be in testing in the U.S., or when it would roll out to other markets.

We’re launching an AI newsletter! Sign up here to start receiving it in your inboxes on June 5.