What to Know About Google’s AI Apparel Try-on

Google has turned its considerable tech chops to the fashion shopping experience by debuting a new virtual apparel try-on feature for humans developed with artificial intelligence.

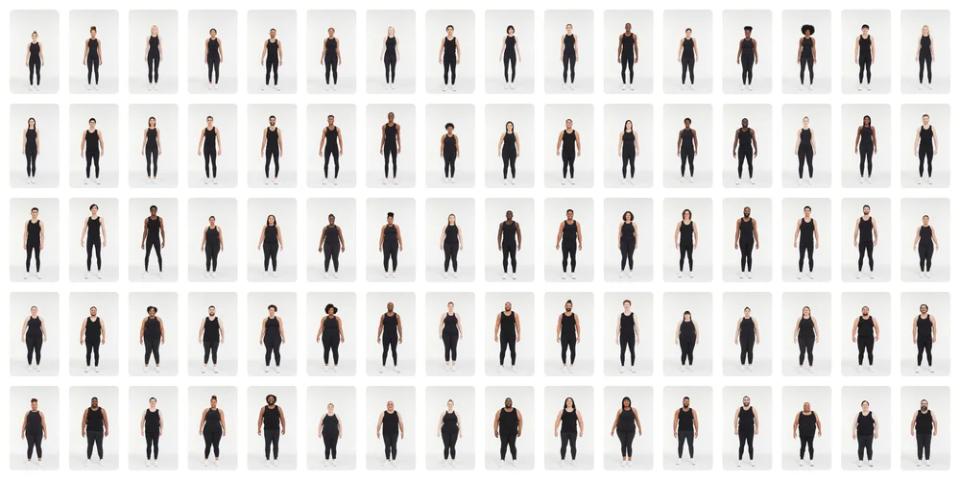

Announced Wednesday and available through Google search, the new product viewer was developed using generative AI to display clothing on a broad selection of real-life models. The goal, the company said, is to allow consumers to visualize the garments — starting with women’s tops — on different body types.

More from WWD

“Shopping is an incredibly large category for Google, and it’s also a source of growth for us,” Maria Renz, vice president and general manager of commerce, told WWD. “We’re incredibly excited to take this cutting-edge technology to partner with merchants and evolve shopping from a transactional experience to one that’s really immersive, inspirational.”

In reality, the consumer-facing experience isn’t actually new, since numerous brands and retailers already offer similar tools on their own e-commerce sites, with a range of models showcasing how an outfit looks on people of different sizes. The main difference with Google’s product viewer is the way those visual assets are created and, it turns out, that’s an important distinction.

The manual approach often involves individually photographing one look on an array of live models, or digitally superimposing blouses or dresses onto images of people, whether real or fake. The former involves more time, effort and cost, while the latter can look flat.

Enter generative AI.

Google shot a range of real-world models, but then used AI informed by its Shopping Graph data to layer different digital garments on top. The effect is that the fabric appears to fold, crease, cling, drape or wrinkle as expected on different figures.

The tech giant developed the tool internally, and believes it can address a fundamental challenge in fashion e-commerce.

“Sixty-eight percent of online shoppers agree that it’s hard to know what a clothing item will look like on them before you actually get it, and 42 percent of online shoppers don’t feel represented by the images of the models that they see,” said Lillian Rincon, Google’s senior director of product. “Fifty-nine percent feel dissatisfied with an item because it looks different than they expected. So these are some of the real user problems we were trying to solve.”

The company has been working on this initiative for years, but it took off recently when it achieved a breakthrough in stable diffusion — an AI model that can generate images, or mitigate visual noise to hone or improve them.

“So they build a model, the data has to be honed, and we measure the quality of the outputs across body types, across the fabric, across poses. And all of that has to be thoroughly vetted,” Rincon said. “These are really hard geometry computer vision problems that they’re solving.”

As an iterative process, the company wanted to be thoughtful about the development and rollout of the tech, said Shyam Sunder, group product manager at Google in charge of the project, noting that developers spoke to more than a dozen fashion brands last year, trying to learn more about their pain points and problems.

Google trained its AI models according to its Shopping Graph — a massive commerce-specific data set encompassing more than 35 billion listings — took in the brands’ feedback, and then retrained the AI models. It decided to move slowly, starting with women’s tops. But since it has already captured male models, it can expand easily into men’s wear. It also shot full-body images in a range of poses, facilitating its expansion into other product categories, like skirts and pants.

There are no plans to go into children’s, at least not yet, and in its current form, the AI product viewer can’t automatically account for different types of fabric.

Sunder was clear about another important aspect of the try-on experience, however: “This is not designed for fit. It’s supposed to help you visualize what the product will look like. So it’s not going to be an exact fitting tool.

“Having said that, I’ll tell you what we did: When we recorded [the data around] these models, we took full body measurements and then we started to categorize them into sizes,” he said. “We looked at the measurements, looked at the size charts of all these brands — I think it was seven to 10 brands. So we know that this model in this case would wear a particular size across these brands. It is fairly statistically significant data.”

The virtual try-on debuts as a feature of Google’s Merchant Center, so it can apply to any of the product or online catalog images associated with those accounts.

That means the experience, at launch, is available across hundreds of brands, including Everlane, H&M, LOFT and Anthropologie.

For Google, there was another important consideration when it developed the feature — and it has nothing to do with technology.

“For virtual try-on in particular, we got a lot of feedback around, ‘Hey, we really want the experience to be as real and lifelike as possible. And actually, we want you to use real models. So that was one of the things that we prioritized,” said Rincon, adding that its lineup features different figures, ethnic backgrounds and skin tones according to the Monk Skin Tone scale, the 10-shade scale Google uses across services to ensure representation.

The point stands out, particularly as industries stand at a precipice of a new AI-driven business landscape. As capabilities expand, new nuances are coming to the fore, as companies learn to strike a balance between humans and bots.

In an era when it’s easy to generate a range of AI fashion models, instead of actually hiring a diverse set of humans, the technical challenges may be diminishing. But the human challenge may be just beginning.

Best of WWD