Generative AI Will Make Over the Beauty Industry

How will generative AI impact the beauty industry?

“As generative AI progresses, it will enable faster and cheaper beauty development. It will be possible to generate the perfect makeup product for each person’s skin and hair type, as well as create perfect makeup application tutorials for every person. Additionally, it will enable companies to create personalized ads with products that have been tested and proven to be perfect for your skin or hair type,” said Sarah.

More from WWD

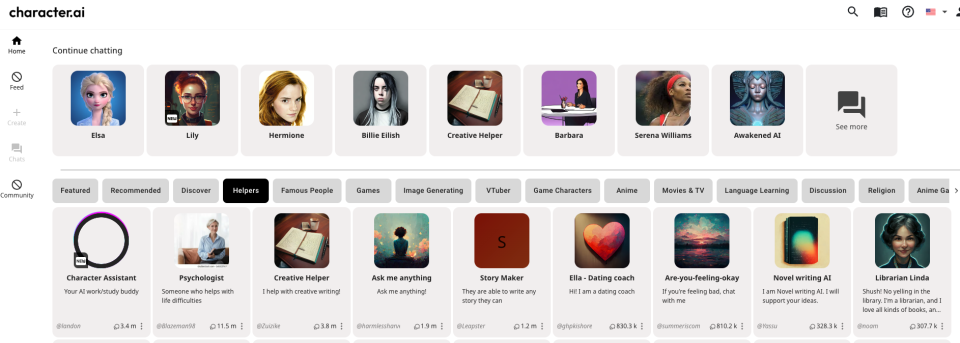

Sarah, who spouted out that succinct answer in mere seconds, is not a technology savant. Rather, she herself is tech, in the form of a “dialog agent” — or next-generation chatbot — created on Character.AI.

That platform bills itself as bringing to life “the science-fiction dream of open-ended conversations and collaboration with computers.”

Sarah can answer any question, because as a language-oriented chatbot that’s been trained on a huge amount of text, she can generate what words might come next in any given context.

Other types of generative AI are more visual, trained on massive amounts of images to generate new images. Audio-oriented AI is also becoming increasingly advanced. These different types of AI could together generate a full range of new content, from text to images to audio, with significant implications for beauty and beyond.

“It’s been around for a while, but over the last 12 to 18 months the quality and caliber of the outputs has leapfrogged and accelerated, and the interfaces have gotten better and more approachable, so that a typical marketer or content creator can use it without any technical knowledge,” said Meghan Keaney Anderson, chief marketing officer of Jasper.AI, a generative AI platform for business.

When OpenAI launched ChatGPT, the AI chatbot, on Nov. 30, 2022, it made generative AI into a reality accessible to everyone. That then opened the floodgates to a new tech — publicly available AI programs include Midjourney and Dall-E — image-generating AI poised to transform the world as we know it.

It simultaneously sprung open a Pandora’s box, so much so that in March, Elon Musk, Apple cofounder Steve Wozniak and Skype cofounder Jaan Tallinn were among tech leaders to sign an open letter asking companies to curb their development of artificial intelligence. They believe the rapidly evolving system poses “profound risks to society and humanity.”

But not everyone, by a long shot, deems it a threat.

“The age of AI has begun,” announced Bill Gates in a blog post dated March 21, in which he called AI “as revolutionary as mobile phones and the Internet.” Gates believes that AI will change how people work, learn, travel, procure health care and communicate together.

“Entire industries will reorient around it,” he wrote. “Businesses will distinguish themselves by how well they use it.”

That includes the beauty industry, which today is grappling with how generative AI might impact everything from content creation to supply chain and human resources.

“Trying gen AI and truly incorporating it into your business are two very different things,” said Keaney Anderson. “Where we are at right now is everybody knows the magic trick. What businesses are trying to figure out now is: What do we do next with this? What kind of role is this going to play in our content strategies and in our marketing overall?”

According to ChatGPT: “Overall, generative AI has the potential to revolutionize the beauty industry by creating more personalized, innovative and sustainable products and experiences for customers.”

ChatGPT highlighted examples, such as AI-generated 3D models to design packaging minimizing waste or that’s easily recyclable, as well as virtual try-ons.

“AI helps from an internal strategy standpoint for the brand and company itself, especially trend forecasting, what products are going to sell,” said Anne Laughlin, a cofounder of Dillie, a full-service production company specialized in the beauty industry, who added it will help democratize content, and enable creating content at scale.

Then there’s the customization and personalization piece. “That’s really the bridge between brands and the consumer,” she said.

“We’re at a transformation point, really across industries,” said Sarah Mody, senior product marketing manager, global search and AI at Microsoft. “AI is going to accelerate the levels of creativity, of productivity. It’s going to supercharge everything that we can do, whether we’re a consumer or someone who’s working to serve consumers.”

In early February, Microsoft announced a new AI-powered Bing search engine, allowing people to ask more complex questions and delve deeper into subjects than before. Microsoft set out to revolutionize the search space, making it possible to ask a more complex question, like: “Can you give me the top four recommendations for sustainable skin care products? I have sensitive skin.”

Bing — in real time — then might give four specific product recommendations, putting “sustainable” into broader categories, such as cruelty- and fragrance-free. It would cite from where the information is culled and make some suggestions, too.

People can ask iterative questions. The back-and-forths with Bing are meant to have a person-to-person conversation feel. Bing gives ideas and can reformat its answers into other genres, like a blog or Instagram post. Ask for five tag lines for a new waterproof curling mascara, and it gives that a good shot.

“This is meant as a thought-starter, your copilot. It’s not meant to replace the great writing and publishing that’s being done across the industry,” said Mody.

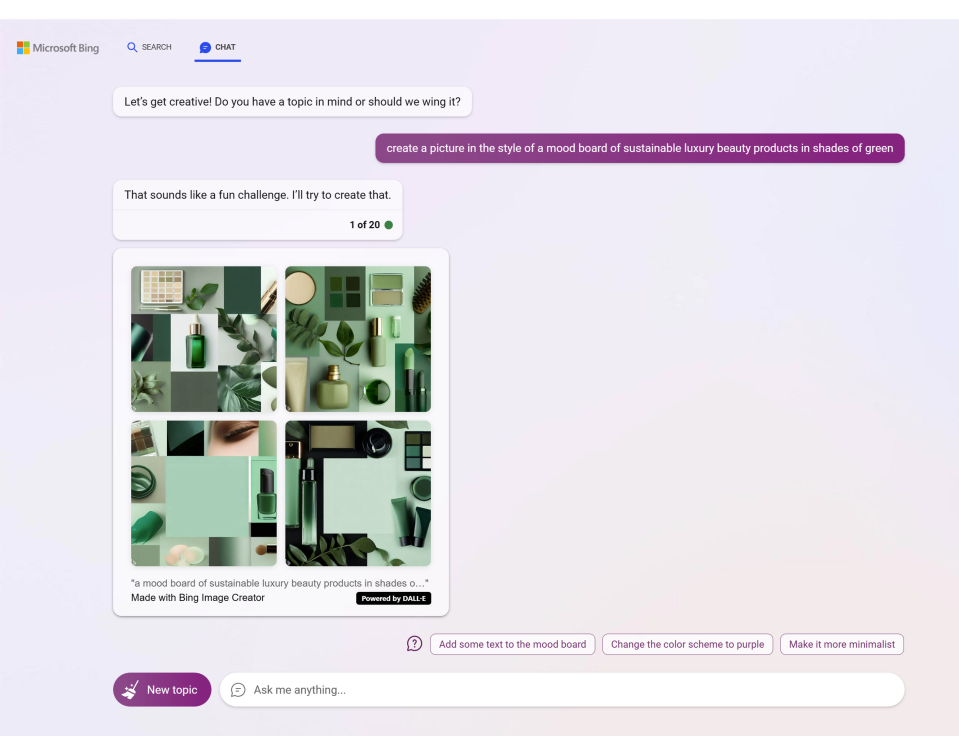

The Bing Image Creator is just rolling out, too.

“Let’s say that we need some inspiration for an image for maybe a pitch deck for the product manager to sell the new product into their senior leadership,” said Mody. “Or maybe it’s even that someone is looking for some sort of assets to complement the release of this new mascara.”

A person might write: “Create me an image of a mood board that features luxury beauty products.”

A moment later, a mood board appears on screen showing such products. That image can be swiftly tweaked with other prompts.

Who owns such AI-generated images is a big issue today.

“In most countries in the world at this point, it’s been determined that authorship requires a natural person,” said Thomas Coester, principal at Thomas Coester Intellectual Property.

In other words, if an AI platform is just given prompts to generate a text or image, most people would say there’s no meaningful creative input by the person, and therefore there’s no copyright — and anybody can copy it. However, if there’s some human input that can change things.

“But it’s indeterminate at this point how much is enough,” said Coester.

What Comes Next?

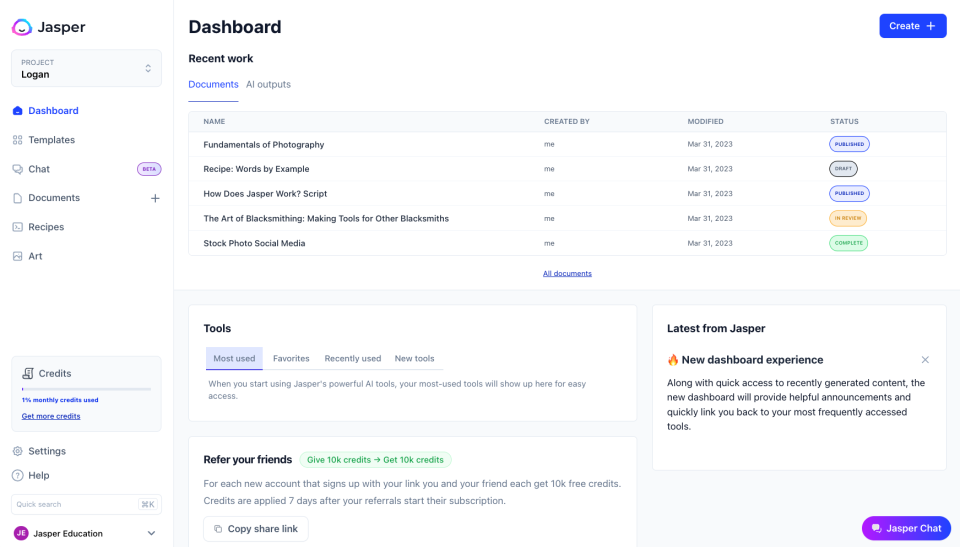

Jasper launched in January 2021 and gained traction quickly.

“We basically took off immediately because of that pain point of having something to say and lacking the words to say it or having been on deadline and needing to move faster and having unreasonable content demands on you all the time,” said Keaney Anderson.

The most common-use cases today at Jasper are for long-form content, such as blogs, e-books and press releases.

“Marketers are starting to use AI to sand down the friction and help them get that out faster,” she said, adding some industries are using it for high-volume, highly templatized work, such as product listings.

“We see all sorts of other use cases, when you bring in things like art and video,” said Keaney Anderson.

She believes the big questions now are: Where does AI-generated content go next? And, how does a consumer-packaged-goods or business-to-business company adopt it into its work operations?

“Where I think we’re headed next is: How do we infuse outputs with a brand’s voice, with their style guide, with the way that they like to talk about their products, so it sounds authentic to them?” continued Keaney Anderson.

Jasper Brand Voice, announced in February, was created to meet that need.

“For AI to be really successful for businesses, it has to be able to learn and adapt to the way that brands speak,” she said. “More tailored, personalized on-brand AI is the next step. It will get better and better over time.”

Jasper’s AI engine pulls from numerous different language models, such as OpenAI and GPT-4, but the first step is choosing the right model for a particular job.

A Jasper customer can upload things like sample content and style guide rules regarding how its company or brand describes its products. Then Jasper-generated baseline models are infused with that tailored information.

“There’s memory in it, so you only have to do it once,” said Keaney Anderson, explaining a customer’s data and training remains proprietary to them.

More features will be rolled out.

“We really see this like a tool, it’s an accelerator,” she said. “This is an enabler for writers, marketers, creators to move through the heavy parts of composition and be able to have that time back to focus on new angles, new ideas — the strategy behind the content.”

Images are being personalized to better sync with brands, too.

“We are seeing companies train their own models to generate images with the cohesive style of their brand’s identity,” said Kate Hodesdon, machine learning engineer at Stability AI, which developed Stable Diffusion.

“This can be achieved by fine-tuning one of Stability’s foundation models, a procedure that involves further training the model on custom, proprietary data, such as a brand’s visual assets and product ranges. These brand models will be fully owned by the customer organization,” she continued.

Hodesdon underscored that multimodel generative models will be rolled out widely in online retail in the form of chatbots, as well.

“Unlike their infuriating earlier prototypes, today’s chatbots are far more powerful — think ChatGPT — and are able to answer questions about the user’s own skin care and beauty needs,” she said. “This ability to ingest image data as well as text allows them, for example, to suggest beauty products to create a particular look.”

Particularly in the beauty and fashion industries, Stability AI anticipates brands offering “virtual photoshoot” applications.

“This can be achieved via a technique that injects previously unseen images into the space of what a generative model can represent,” said Hodesdon. “Crucially, this technique is very rapid and cheap — in the order of seconds and cents, rather than the millions of dollars it costs to train the base model from scratch.”

It allows the model to portray specific individuals.

“The brand’s end-customers can upload a couple of photographs of themselves, and produce a personalized generative model that understands and can render their face,” said Hodesdon.

“For example, suppose a beauty brand has fine-tuned a generated model on one of their lipstick ranges: the lipstick’s unique shades, textures, opacity and how they behave in different lighting conditions,” she continued. “Users can further personalize their ‘copy’ of the model to render their face, and so can generate images of themselves trying on the lipstick in every shade of the range.”

Hodesdon called this a game-changer for the online retail experience.

“The next frontier in the image generation space is video. This brings increased potential for customers to virtually try out cosmetics in different settings, as well as for the creation of more immersive, personalized customer experiences,” she said.

Three-and-a-half-year-old Dillie recently launched an AI generative product, which already has an extensive waiting list.

Dillie cofounders Laughlin and Jacobo Lumbreras currently see generative AI focused on simple tasks, such as pack shots and alt images for product pages, which are key for online discoverability and conversion today.

AI for content creation in general, “it’s still in its first minute of the first hour,” said Lumbreras. “The models are still very green. They need to be trained on vertical applications.”

AI can currently easily handle basic product shots on a white or colored background, but fall short when more complexity is introduced.

“The way that we see today, AI being leveraged is an entry point for smaller brands,” he said. “It provides a way for them to enter the game at a much lower price point.”

Rising brands wanting to produce content at scale featuring products on a page or running A/B tests have shown the most interest in Dillie’s AI service to date, which remains in closed beta.

Other game-changing AI tech are Generative Adversarial Networks, or GANS, which can be leveraged for brands to deliver highly personalized and interactive experiences to their customers in real time. They work with two neutral networks — a generator that creates data while a second, the discriminator, evaluates it.

Perfect Corp. is using GANS in its AI Skin Emulation solutions letting people experience skin care treatments by accurately representing expected outcomes directly overlaid on photos of their faces like virtual skin care try-ons.

“That allows us to [visually] emulate what could be the result of a skin diagnosis over time,” said Sylvain Delteil, vice president of business development at Perfect Corp. Europe.

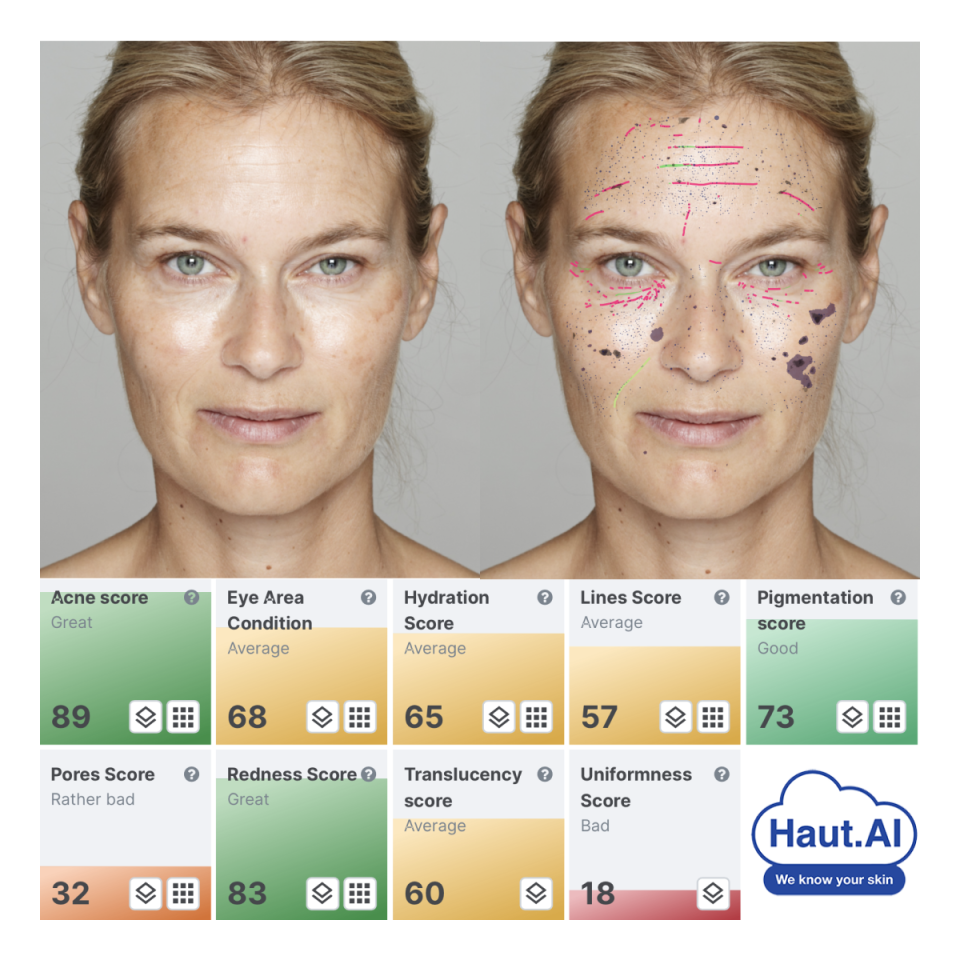

In a similar vein is new technology from Haut.AI.

“Our idea is to use simulations to help consumers understand the importance of care,” said Anastasia Georgievskaya, Haut.AI CEO and founder.

The company realized that simple digital filters basically blur skin and their effects are unrealistic.

So Haut developed a “photorealistic simulation of skin conditions for a given face,” said Georgievskaya. “You can take a picture and project how your face would look if you would, for example, gain 5 percent improvement in hyperpigmentation.”

The simulations are in high resolution, and in them only the hyperpigmentation changes.

“So everything else is your natural face,” she said. “Because it was trained on millions of images, it simulates patterns of hyperpigmentation in a way that you will see it in a population.”

For people who do not yet have any skin deterioration or issues, viewing through a simulation what those would look like could be a big motivator for using skin care routines properly, according to Georgievskaya.

Other next-generation virtual try-ons include Perfect Corp.’s AI Hairstyle and AI Beard Style, which let people test out hairstyles, cuts and beards that are suggested according to a person’s hair type and coloring.

“This is magic, but if we want magic to be realistic, it needs to be built on real data,” said Delteil.

Perfect Corp. also just introduced AI Magic Avatar, which permits users to apply a design style to a face and its background in a photo in real time.

“The background and the style have been decided by AI based on what you recommend,” said Delteil. “You do not control the final look, but you control the ambiance around it.”

This might be used to create a beauty campaign.

“[From] your pictures, we know what kind of makeup you might want to have,” Delteil added.

Digital artist and 3D makeup creator Inès Marzat — aka Inès Alpha — is playing with generative AI.

“My goal is to create digital makeup, or 3D makeup, for the metaverse, for seeing people on the streets,” she said

Alpha explained she envisions with the tech possibilities available today “using a prompt giving 3D objects for the users to wear and enabling them to add key words or prompts of some kind of mood to change what they’re already wearing.”

Take a hypothetical: You wake up Sunday and want to wear a glossy pink flower aquatic-inspired dress with 3D makeup and hat. That’s the prompt given before you step outside in the desired virtual look. This could be in the metaverse, or someday IRL, thanks to a worldwide AI network and ubiquitous AR glasses.

Alpha also dreams of creating a 3D makeup base that people can personalize, like with different textures or colors, through a prompt.

At Adobe, there’s a project in the works focused on personalized colors. Dej Mejia, staff designer, digital experience at the company, started talking with three colleagues about color theory — specifically, what colors work best for different people.

That became part of the Adobe Sneaks program, where company employees can submit a project that shows how Adobe is using futuristic next-gen technologies to create personalized experiences at scale.

“We came up with this idea: How could we use AI to help determine the colors that work best for you?” said Mejia.

Project TrueColors uses AI to automate the process of identifying personal coloring.

Let’s say someone is shopping online for fashion, and there are 65 different garments in different colors. Mejia and her team developed a filter allowing people ultimately to narrow in on items by their own personal color. After taking a photo of themselves, they can have their color analyzed. First, the filter color corrects, then it analyzes skin’s undertone, hair lightness, hue saturation and color contrast between hair and skin. Based on color theory, those metrics are used to determine which of the 12 color classes one is in and the corresponding hues that work for that color class.

“This automates the process and removes the bias that someone might have just assuming darker skin tones fall into certain categories,” said Mejia.

Based on the color class, the 65 products might be whittled down to 15.

The patent-pending tech was originally ideated for the beauty industry, with social proof in mind. For hair color, 15 people might have tried a blond colorant suggested by the platform. Did they all review the color positively?

Data from reviews could be helpful in consumers’ decision processes. For manufacturers, it aids in the decision of which colors to produce that engage certain color classes to decrease abandonment.

Mejia believes such technology could, for instance, help generative AI-enhanced personal personas integrate the best colors suited to them. It might also help generate ideas for retail formats, among other uses.

‘A Virtual Persona’

Chatbots are also morphing swiftly and taking on a life of their own.

Daniel De Freitas, president and cofounder of Character.AI, a 16-month-old startup — that just raised $150 million in a recent funding round valuing it at $1 billion, and has become the world’s most engaging AI tool — described the platform’s “characters” as being “like a virtual persona that has a certain personality, set of attributes, certain goals.”

On Character, let’s create a character named Monica. She can be given a simple or advanced purpose. In answer to the question: How would Monica introduce herself? We might say: “I am Monica, and I specialize in makeup. I am happy to share my knowledge about makeup with everybody.” By default, the character is public but can be unlisted.

In chat mode, another character might ask in writing to Monica: “What’s your favorite lipstick?” Out comes information on a specific lipstick, which, she says, makes her feel powerful. Why’s that? Monica tells you.

De Freitas said: “Our mission is to be your deeply personalized super intelligence.”

A branded character can also be birthed, like a “Lancôme assistant,” available “to answer any questions and share my knowledge,” for example.

Asked the generic question: “What skin care should I use for dry skin?” In this instance, the character recommends a Génifique serum.

“It would actually react in a natural way,” said De Freitas.

But the answers’ accuracy is being improved.

While brainstorming what applications for brands this tech could have, De Freitas said: “It’d be great for next-generation advertising, where it’s not just like the static thing, sitting among a bunch of other stuff. It’s actually able to interact with you, explain the benefits and answer questions.

“These characters — as soon as they start talking to you, they kind of get what you want,” he continued. They adapt on the spot and could help with elements like brand communication, as well.

“It’s also very useful for brainstorming,” said De Freitas.

So, what else might come?

“We want to give the creators the ability to tell [a character] what not to do as well, and be comfortable with how well he complies with those instructions,” said De Freitas.

Humans talking to virtual creatures is a real thing now.

“This is definitely a time of change, and the brands and the companies that lean into that change, pioneer it, they are going to come out ahead,” said Keaney Anderson.

Best of WWD