Flipping is much easier than walking

- Oops!Something went wrong.Please try again later.

I wrote about half of last week’s Actuator on Wednesday in an empty office at MassRobotics after meeting with an early-stage startup. I’m not ready to tell you about them just yet, but they’re doing interesting work and have one of the wilder founding stories of recent vintage, so stay tuned for that. Also, shoutout to Joyce Sidopoulos for being a very gracious host to a reporter stuck between meetings in Boston for a few hours. I would leave a five-star Airbnb rating if I could.

From MassRobotics, I headed to Cambridge to have a nice long chat with Marc Raibert at the Boston Dynamics AI Institute. The newly founded institute is headquartered in the new 19-floor Akamai building, directly across the street from Google’s massive building and a stone’s throw away from the MIT lab where the seeds of the entire Boston Dynamics project were planted.

Akamai is currently leasing at least four of the building’s floors, owing to some really unfortunate timing. The construction project was completed toward the end of 2019, which meant the space had a few good months before all hell broke loose. In May 2022, Akamai announced that it was offering permanent work from home flexibility to 95% of its 10,000 staff. Obviously not everyone who is allowed to work from home does, but in the wake of the pandemic, it’s safe to assume that many or most will.

All the better for Raibert and the institute, I suppose. With a massive infusion of cash from Boston Dynamics parent, Hyundai, the organization is ready to take on some of robotics' and AI’s toughest problems. But first, growth. Raibert tells me a sizable chunk of his day is spent interviewing candidates. There are currently something like 35 job listings with more on the way. And then there’s a listing under the title “Don’t see what you’re looking for?” with the description, “If you do not see a job posting for a role that matches your experience/interest, please apply here. We are still interested in hearing from you!”

Nice work if you can get it, as they say.

Currently the space looks like that of a standard startup, which could be a source of frustration for an organization looking to really lean into the laboratory setting. I get the sense that the next time I’m afforded the opportunity to visit, it will look very different — and be a lot more full. What it does have currently, however, is a whole bunch of Spot robots. There’s basically a doggy day care full of them off to one side. Raibert notes that the Spots were purchased and not given to the institute, as it and Boston Dynamics are separate entities, in spite of the name.

Along another way are artists' conceptions of how robots might integrate into our daily lives in the future. Some are performing domestic tasks, others are fixing cars, while others still are doing more fun acrobatic activities. Some of the systems bear an uncanny resemblance to Atlas and others are a bit more out there. Raibert says his team suggested the scenarios and the artist took them in whatever direction they saw fit, meaning nothing you see there should be taken as insight into what their robot projects might look like, going forward. The tasks they’re trying to solve, on the other hand, may well be represented in the drawings.

Digging through some old MIT Leg Lab photos. Check out this shot of Boston Dynamics' Marc Raibert (right) on the set of the Connery/Snipes/Crichton flick, Rising Sun circa 1992. pic.twitter.com/DGtugbDaY1

— Brian Heater (@bheater) April 26, 2023

In front of these are several dusty robots from Raibert’s Leg Lab days that were “rescued” from their longtime homes in the MIT robot museum. That’s since taken me down a real rabbit hole, checking out the Leg Lab page, which hasn’t been updated since 1999 but features a pre-Hawaiian shirt Raibert smiling next to a robot and three dudes in hazmat suits on the set of 1993’s Rising Sun.

Also, just scrolling through that list of students and faculty: Gill Pratt, Jerry Pratt, Joanna Bryson, Hugh Herr, Jonathan Hurst, among others. Boy howdy.

Thursday was TechCrunch’s big Early Stage event at the Hynes Convention Center. For those who couldn’t make it, we’ve got some panel write-ups coming over the next week or so. I made sure the TechCrunch+ team published one of mine an hour or two ago, because I wanted to talk about it a bit here. The panel wasn’t explicitly about robotics, but we covered a lot of ground that’s relevant here.

The role of the university post-research is a topic I’ve been thinking and writing a fair bit about over the past few years. It was a subject I was adamant we devote a panel to at the event, given that Boston’s apparently home to a school or two. My coverage has tended to approach the subject from the point of view of the schools themselves, asking simply whether they’re acting as a sufficient conduit. The answer is increasingly yes. Not to be too crass about it, but they’ve historically left a lot of money on the table there. That’s been a big piece of historical brain drain, as well. Look at how much better CMU and Pittsburgh have gotten at keeping startups local.

Pae Wu, SOSV & CTO at IndieBio (SOSV) talk about "How to Turn Research Into a Business" at TechCrunch Early Stage in Boston on April 20, 2023. Image Credits: Haje Kamps / TechCrunch

You would be hard pressed to find someone with a more informed perspective on the other side of the equation than Pae Wu, general partner at SOSV and CTO at IndieBio. A bit of context is probably useful here. Last week’s event primarily featured talks from VCs targeted at an audience of early-stage investors. Wu spent part of her talk discussing how “recovered academics” can be successfully integrated into a founding team. But not every professor wants to embrace the “recovered bit.”

I should say that I’ve experienced plenty of scenarios where professors appear to walk that line well. Just looking at the Berkeley Artificial Intelligence Research Lab, you’ve got Ken Goldberg and Pieter Abbeel who are currently on the founding teams for Ambi Robotics and Covariant, respectively.

“There are some sectors where it can work very well to have members of your founding team who remain in academia,” Wu says. “We see this all the time in traditional biotech and pharma. But in other types of situations, it can become, frankly, a drag on the company and problematic for the founders who are full-time. This is a very tough conversation that we have quite frequently with some of our committed academics: Your stake in this company isn't really aligned with your time commitment.”

Wu points to scenarios where the professor remains in a leadership role, while going on autopilot for the day-to-day. The issue, she explains, is when they frequently pop their heads in for suggestions divorced from the very time- and resource-intensive work of running a company.

“Academics really love to say, ‘Well, I'm actually really good at multitasking, and therefore I can do this,’” she explains. “The majority of the time, the academic founder will come in and out and provide their sage wisdom to the full-time founders who have committed their lives and risked everything for this company. It starts to create challenges in getting the company to move forward. It creates interpersonal challenges as well for the founding team, because you have to be a special kind of saint to say, 'I'm working 100 hours a week, I don't make any money, and my whole financial future rests on the success of this company. And this guy keeps coming in to tell me some random thing that he read on the Harvard Business Review.'”

Wu also offers a word of caution about university involvement at the early stage:

If you want to be a VC-backed startup, beware the helpful university stuff. It might feel very, very comfortable. They do a great job of incubating companies, but I don’t know that they do a great job of accelerating companies. You get a lot of ‘free stuff,’ and [people often say] 'Oh, this is a convenient EIR (education, innovation and research) program to work with, and this guy started 15 companies before.' But this guy started 15 companies in sectors that have absolutely nothing to do with what you’re trying to do. You would absolutely be better off finding somebody who actually cares about your mission, isn’t paid by the university, but is incentivized to care deeply about your company.

You can read (and agree or disagree with) the full article over on TechCrunch+.

Image Credits: Tufts

Friday, coincidentally, turned out to be university day for me. I didn’t manage to see much research on my last trip top Boston, so I made sure to carve out a day this time. The morning kicked off with a trip to Tufts. The last time I visited the school was in 2017. Human-Robot Interaction Lab director Matthias Scheutz told the video crew and myself about training robots to trust.

Scheutz and I sat down again for a separate — but related — discussion. Before taking up the professor role, he received a joint PhD in cognitive science and computer science from Indiana University and another PhD in philosophy from the University of Vienna. It’s an unlikely combination that informs much of the work he does. With all of the ethical and moral detours during our conversation, I couldn’t stop thinking that it would have made a good podcast.

At the heart of the interview is the team’s efforts to develop a shareable knowledge set between robots. In a subsequent demo in the nearby lab, one team member asked a Fetch research robot to assemble a screw caddy, but it doesn’t have the requisite knowledge. The human coworker then instructs the robot how to perform the process, step by step — “Execute while learning,” as they put it. Once it gains the knowledge, it runs through around 100 simulations in quick succession to determine the probability that it will perform the task correctly.

Another team member asks a nearby Nao robot whether the Fetch knows how to perform the tasks. The two robots communicate nonverbally. Fetch runs through simulations again and shares the information with the Nao, who then communicates it to the human. The demo offers a quick shorthand for how networked robots can pass along the information they learned through a kind of network in the cloud — or a sky net, if you will.

I’ve excerpted some of the more interesting bits from our conversation below.

On Wednesdays, we use fetch to locate screws 🤖 #TryTech pic.twitter.com/Vbx3T2X6rV

— TechCrunch (@TechCrunch) April 27, 2023

[I'm told the above is a Mean Girls reference.]

Conversation with Matthias Scheutz

Distraction vs. overload

If I can detect that you’re distracted, maybe what I need to do is get your attention back to the task. That’s a completely different interaction you want from one where you’re overloaded. I should probably leave you alone to finish the thing. So, imagine a robot that cannot distinguish between the distraction state, which should be very engaged and get the person back on task, compared with the person being overloaded. In that case, if the robot doesn’t know it, the interaction might be counterproductive for the team. If you can detect these states, it would be very helpful.

Shared mental models

One of the videos we have up on our web page shows you can teach this one robot that doesn't know how to squat, how to squat, and the other one can immediately do it. The way to do it is to use the same shared mental model. We can’t do this. If you don't know how to play piano, I cannot just put that in your head. That’s also part of the problem with all these deep neural nets that are very popular these days. You cannot share knowledge at that level. I cannot implant in your neural network (this goes for people, too) what I have been trained over many years of practicing piano. But if you push the abstraction a little bit higher, where you have a description that is independent of how it's realized in the neural network, then you can share it.

On multitasking

The hot stove is a good example. The moment [the touch is issued by the brain], you may already realize it’s on, but it’s maybe too late. There’s a level of abstraction where I can intervene consciously and cognitively with the sequencing and I can alter things. There’s a level of discrete actions where sequencing occurs, also automatically. For example, years ago, when I was at Notre Dame, I drove to campus, but I didn’t want to go to campus. But I was thinking about work, and so this automatic system of mine drove me, and the next moment I was in a parking lot. I can talk to you and prepare food, no problem. But it needs to be rehearsed. If the activity is not rehearsed, you can’t do it. We have a layer in the [robotic] architecture that does that automatic sequencing. That’s the level at which we can share more complex actions.

Your Roomba doesn’t know you exist

There’s a fine line, where you need to have these mechanisms in place, because otherwise harm will follow if they’re not in place. You get this with autonomous cars; you get this in a lot of contexts. At the same time, we don’t want to prevent all robots, because we want to use them for what they’re good for — what they’re beneficial for. They need to have more awareness of what’s happening, what they’re being used for. It’s not a problem with a Roomba because it doesn’t even know you exist. They have no notion of anything. But if you look at YouTube, you will find videos of Roomba shoving pets under the couch. They have no awareness of any person or any thing in the environment. They don’t even know they’re doing a vacuuming task. It’s as simple as that. At the same time, you can see how much more massively sophisticated they would have to be in order to actually cognitively comprehend all the eventualities that could happen in an apartment.

When robots say no

Years ago, we had this video of a robot saying no to people. In some cases, people may not be aware of the state the robot is in, give it an instruction, and if the robot carried out that instruction, it would be bad for the robot or a person. So the robot needs to say no. But it can’t just say no and nothing else. It needs to tell you why not. If the robot just doesn’t do it, that’s not good. You won’t trust that robot to do something again.

Editorial interjection here. The “no” video caused a bit of a stir for its perceived violation of Asimov’s second law, “A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.” Obviously there’s a clause baked into that second law that says the robot should not obey an order if doing so will injure a human (first law).

But Scheutz doesn’t stop there. He paints a scenario in which a robot is carrying a box as it was told to do. Suddenly a different human tells the same robot to open a door, but doing so would cause it to drop — and damage — the box. In this case, the “no” isn’t about protecting a human, but it is about following orders. Immediately following the second order effectively negates its ability to carry out the first.

It's here, then, that the robot is required to say, “No, but don’t worry, I got you once I get this box where it needs to go."

Conversation with Pulkit Agrawal

Image Credits: MIT CSAIL

After our chat, I head back to Cambridge for my first time back at MIT CSAIL since the pandemic started. Due to a last-minute change of plans, I wasn’t able to reconnect with Daniela Rus, unfortunately. I did, however, get a chance to finally speak to Electrical Engineering and Computer Science (EECS) assistant professor Pulkit Agrawal. As it happened, I had recently written about his team’s work to teach a quadruped to play soccer on difficult surfaces like sand and mud.

When I arrived, I commented on the Intel RealSense box in front of me. I’ve been looking for any reason to discuss the move away from LiDAR I’ve been seeing among roboticists of late, and this was a great in. Again, some highlights below.

On why more roboticists are moving away from LiDAR

Right now, we are not dealing with obstacles. If [the ball] does go away from the robot, it does need to deal with it. But even dealing with obstacles, you can do it with RGB. The way to think about this is that people wanted depth because they could write programs by hand of how to move the robot given a depth recording. But if we are going to move toward data-driven robotics, where I’m not writing my own program, but computers are writing it, it doesn’t matter whether I have depth or RGB. The only thing that matters is how much data I have.

On grippers

Why do people use suction cups? Because they’re like five bucks. It’s broken, you replace it. Even to get them to use a two-finger gripper, it’s $100. Even Amazon doesn’t want to put more sensors. Suppose you go to a company and say, "I want to add this sensor, because it might improve performance." They’ll ask how much the sensor is going to cost and multiply it by the volume of how many robots they have.

On “general purpose” robots

Suppose you have one target, say, lifting boxes and putting them on the conveyer belt, and then there was pick and place and then loading them on the truck. You could have constructed three different robots that are more efficient at three different tasks. But you also have different costs associated with maintaining that different robot and integrating those different robots as part of your pipeline. But if I had the same robot that can do these different things, maybe it costs less to maintain them, but maybe they’re less performant. It comes down to those calculations.

When I made reference to the Mini Cheetah robots the team has been working with, Agrawal commented, “It actually turns out flipping is much easier than walking.”

"Speak for yourself," I countered.

He added, “What is intuitive for humans is actually counterintuitive for machines. For example, playing chess or playing go, machines can beat us. But they can’t open doors. That’s Moravec's paradox.”

TC+ Investor Survey

Image Credits: Bryce Durbin/TechCrunch

As promised, another question from our recent TechCrunch+ investor survey.

Following the lead of robot vacuums, how long will it take before additional home robot categories go truly mainstream?

Kelly Chen, DCVC: Medium-term, at best. Alphabet’s folding of Everyday Robots goes to show how even large resources cannot make home service robots viable today. The home is a highly unstructured environment. Going beyond the relatively simple exceptions that existing vacuum robots need to learn, the rest of the home is much more difficult. Additional tasks are in 3D, which may mean more sensors, actuators, manipulation, different grippers, force control, and much less predictability. We are not there yet in making this reliable and economical.

Helen Greiner, Cybernetix Ventures: Five years for lawn mowers in the USA, based on vision-based navigation (combined with GPS) coming out. No setup wires and better UIs will drive demand. The network effect (neighbors copying neighbors) will drive demand after initial adoption. Thirteen years for a humanoid helper, based on Agility and X1 cost reduced and smarter.

Paul Willard, Grep: It has already started. Labrador robots help mobility-challenged folks fetch and carry things around the house. So medication is always handy and taken on time, grocery delivery from the front door can be carried to the fridge and pantry to be put away, and dinner can be carried from the stove to the dining table while the customer has their hands full perhaps with a walker, a cane or a wheelchair. There will be more as the hardware that makes up the robots gets less expensive and more capable, simultaneously.

Cyril Ebersweiler, SOSV: Most robotics companies eyeing B2C have moved to B2B over the years. The time, scale and amounts of money required to launch such a brand these days isn't exactly what VCs are looking for.

News

Image Credits: Robust.AI

Had a chance to catch up with Rodney Brooks again (we have to stop meeting like this). This time he was joined by his Robust.AI co-founder and CEO, Anthony Jules. The startup’s work was shrouded in mystery for some time, leading to this fun headline from IEEE. We’ve got a much better handle on what the team does now: It makes a robotic cart and software management system for the warehouse.

Brooks was understandably a bit hesitant to jump back into the hardware game. Jules told me:

We started off trying to be a software-only company. We started looking at the space and decided there was a great opportunity to really create something that was transformative for people. Once we got excited about it, we did very standard product work to understand what the pain points are and what it was that would really help people in this space. We had a pretty clear vision of what would be valuable. There was one day I literally said to Rod, ‘I think I have a good idea for a company, but you’re going to hate it, because it means we might have to build hardware.’”

Late last week, Robust announced a $20 million Series A-1, led by Prime Movers Lab and featuring Future Ventures, Energy Impact Partners, JAZZ Ventures and Playground Global. That follows a $15 million Series A announced back in late 2020.

A Veo Robotics setup in a human-robot co-working environment. Image Credits: Veo Robotics

This week, Massachusetts-based Veo Robotics announced that it has closed a $29 million Series B. That includes $15 million from last year and $14 million from this year. Safar Partners and Yamaha Motor Ventures participated, but the most notable back is Amazon through its $1 billion Industry Innovation Fund.

It’s easy to view these investments as a kind of trial run for potential acquisitions, though the company has largely denied that it uses the money as an on-boarding process. That said, it is certainly a vote of confidence and, at the very least, probably an indication that the company will begin piloting this technology in its own workspaces — assuming it hasn’t already.

“The newest generation of intelligent robotic systems work with people, not separately from them,” says co-founder and CTO Clara Vu. “Unlocking this potential requires a new generation of safety systems — this is Veo’s mission, and we’re very excited to be taking this next step.”

Veo develops a software safety layer for industrial robots, allowing them to operate alongside human co-workers outside of the cages seen on many floors.

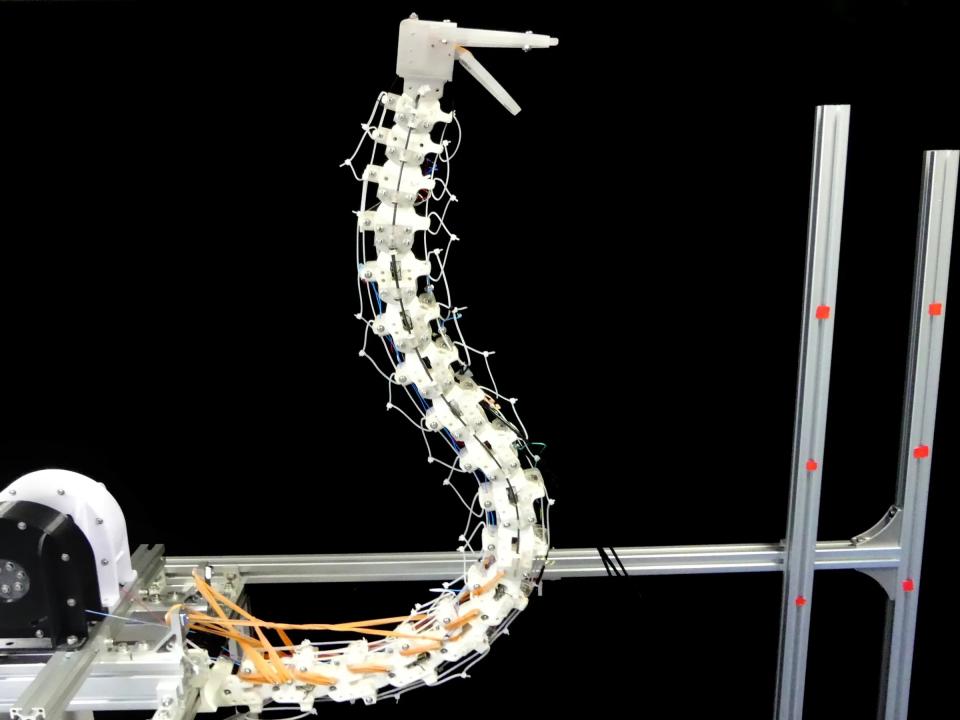

Image Credits: University of Tokyo

We all remember the Digit predecessor Cassie, right? OSU’s robot took strong cues from ostriches for its bipedal locomotion. RobOstrich (robot ostrich. Slow clap) is more concerned with the upper half of the world’s largest flightless bird.

“From a robotics perspective, it is difficult to control such a structure,” the University of Tokyo’s Kazashi Nakano told IEEE. “We focused on the ostrich neck because of the possibility of discovering something new.”

The system features 17 3D printed vertebrae, with piano wire serving as muscles. The system is actually a long robotic manipulator that offers a flexible compliance that other systems lack.

Let’s do some more robot job listings next week. Fill out this form to have your company listed.

Image Credits: Bryce Durbin / TechCrunch

Take your head out of the sand already. Subscribe to Actuator.