Am I falling for ChatGPT? The dark world of AI catfishing on dating apps

Talk to anyone currently engaged in the fool’s errand that is attempting to find love online, and they’ll tell you how unimaginably bleak the experience is. Endless swiping; matches who never write back; matches who engage in days of conversation before disappearing off the face of the planet; strings of promising dates that end in an abrupt and unexplained ghosting. And now a new threat is set to make the whole rigmarole even less enjoyable.

Picture the scene: you’ve matched with someone on an app who actually seems like a normal, attractive person. You start messaging and, instead of leaving you hanging for days at a time, they always reply quickly. They ask questions – praise be! – and seem genuinely interested in you and your life. When asked to send more pictures and even a video, they swiftly comply. They even agree to a video chat. It almost feels too good to be true… But it must be real, right? How could it not be?

Artificial Intelligence – that’s how. The unstoppable rise of AI, which is developing at a pace that’s alarming for anyone who’s watched too many films set in a “dystopian future”, already has massive implications for the way we live. Tools like Midjourney and DreamStudio can create entirely new artwork based on a loose description; ChatGPT is tempting a generation of students to hand over their essay-writing to a computer instead of doing it themselves. And the advancements in this kind of tech could have a dark impact on the world of online romance.

On the one hand, some people will be actively seeking out a facsimile of a relationship – searches for “AI Girlfriend” saw a 2,400 per cent increase after ChatGPT was initially released, according to Google Trends data. The monthly search volume for this now averages 49,500. Then there are the single people who may be tempted to use AI to “enhance” themselves on dating apps. Perhaps they aren’t even thinking of it as catfishing, but simply as a way to level the playing field in a competitive market. “AI catfishing is increasing and is likely to rise further,” warns Dr Jessica Barker MBE, the author of Hacked: The Secrets Behind Cyber Attacks and co-founder of cybersecurity firm Cygenta. “The reasons for this can range from people simply seeking to improve their chances of success on the apps to deeply manipulative and damaging scams.”

Catfishing – the act of taking on another identity online – has already long been an issue for people making connections via social media or dating apps. According to a 2020 survey, 41 per cent of US adults said they had been catfished at some point in their life – an increase of 33 per cent since 2018 – while 27 per cent of online daters in the UK reported being catfished.

But, while previously there were trusted techniques you could rely on when trying to identify if someone you were talking to was the real deal – running a reverse image search on them, say, or insisting on a live video call – the development of AI could put paid to that. Between January 2023 and February 2024, there were 187,160 Google searches for terms related to “AI for dating profile”, with a 212 per cent increase in search volume across 18 similar terms. Meanwhile, there’s been a 2,000 per cent increase in the term “AI dating profile generator free”.

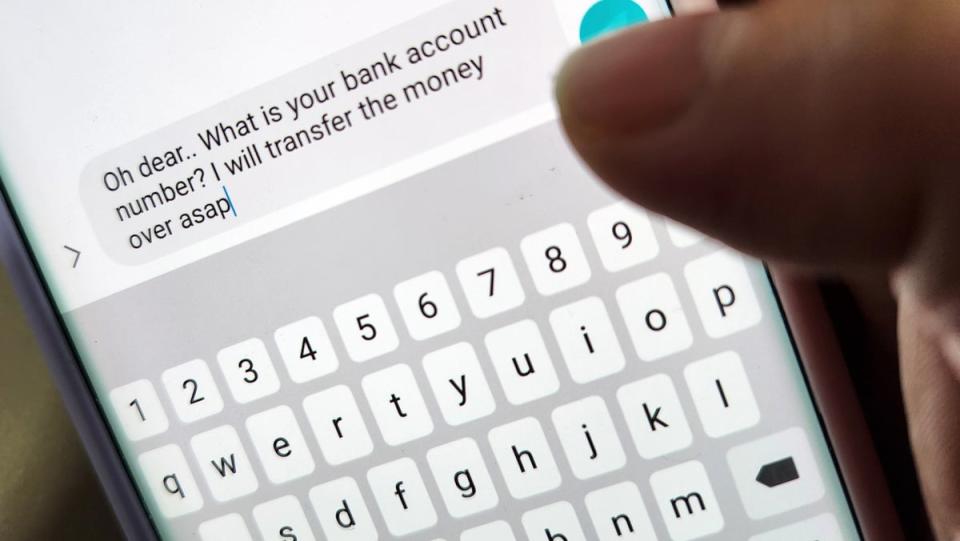

On the more damaging end of the spectrum, there’s a very real danger of a rapid increase in romance fraud, whereby people are tricked into parting with their cash by an online suitor. “Fraudsters use AI catfishing to add speed, scale and sophistication to their romance fraud,” explains Barker. “Scammers can manipulate many more targets, much quicker and easier, with the aid of AI.” AI catfishes can be harder to spot, she warns, with the “accessibility of deepfake technology making previous advice about checking the legitimacy of a potential love interest less reliable”.

The use of large language models (LLMs) – machine learning models that can comprehend and generate human language text, such as ChatGPT – allows scammers to generate multiple dating profiles and conduct numerous conversations at once, as well as effectively eliminating any potential language barriers. But the real game changer is the development of AI video tools such as Soros.

Fraudsters use AI catfishing to add speed, scale and sophistication to their romance fraud

Dr Jessica Barker MBE

Launched by OpenAI in February, this revolutionary software can produce realistic footage of up to a minute long based on a simple text prompt, and adheres to users’ instructions on style as well as subject matter. More incredible still – and more worrying when it comes to catfishing potential – it’s also able to create a video based on a still image or extend existing footage with new material. So when that potential paramour sends you a video of themselves to verify their identity, who’s to say they haven’t simply fed in a photo – real or AI-generated itself – into the program?

“Sora is a double-edged sword,” says relationship expert Steve Phillips-Waller from online personal development resource A Conscious Rethink. “It demonstrates great AI progress, but on the other hand, it significantly amplifies the risk of catfishing, a deceit that already plagues the online dating world. The data clearly suggests people already use ChatGPT and AI to falsify their online profiles.” He encourages online daters to be “extra careful when the technology is released to validate they are dating who they think they are”.

Sometimes, AI-manipulated profiles are easy to spot. Over-the-top, cheesy language is one tell-tale sign; so-called “hallucinations” on images are another, such as extra fingers on people’s hands. But it’s not always the case, especially with the rapid pace of development leading to increasingly sophisticated technology. Thankfully, dating apps are at least aware of the issue – and in some cases are stepping up to combat fake profiles or improve safety for users. Bumble, for example, announced the introduction of an AI “Deception Detector” in February to help squash catfishing by blocking scam accounts and fake profiles. Tinder has recently released a new security feature, Share My Date, which lets users share their date plans – location, date, time, and a photo of their match – directly from the app with friends and loved ones.

As AI continues to advance at speed, vigilance is key. Barker advises looking out for “hallucinations” on pictures or videos, or dating profiles that contain bios you’ve seen before. “If you always get immediate responses to every message you send, this can be the sign of a bot,” she adds. “It’s a red flag if your online date never wants to speak on the phone or have a live video chat, or if they don’t share candid photos of themselves – but be aware that with AI tools, all of this becomes easier for scammers to fake, so it cannot be relied on fully.”

She also recommends paying attention to the stories your online date shares with you; if they never want to talk about themselves and only want to talk about you, this could be a warning sign, as could someone who always calls you by pet names rather than your actual name. This is “a tactic used by scammers so that they don’t have to keep track of the names of their multiple targets”.

Love bombing – whereby someone showers you with intense and extreme displays of attention and affection – is another tactic often used by scammers to accelerate the relationship, alongside the offer to send you gifts, “which can make you feel that you owe them something”. The latter can also be used as a ploy to manipulate you into sharing personal information that can lead to identity fraud. But the biggest give-away, if someone is using AI catfishing to defraud you, is that at some point talk will inevitably turn to money.

“They often spend a long time working on targets, making the relationship feel real,” says Barker. “But one way or another, it will ultimately come down to trying to separate you from money, whether by outright asking for it, making you feel sympathy for them (putting you in a position where you offer, thinking it’s your idea) or sharing details of a supposed ‘investment opportunity’ with you (that they are actually running as a scam).”

Further protect yourself by ensuring you don’t connect your dating profiles to other social media platforms, recommends Phillips-Waller. “It might be tempting, for convenience reasons, to just log in on a dating app with your Facebook account or your main Google account, or link to your Instagram. However, it is strongly recommended not to do this, as it’s a potential privacy hazard. Just think about it – you’re connecting a profile intended for friends and family to a platform where you’ll contact tons of people you don’t know.” He also advises avoiding using pictures of yourself on a dating app that are found elsewhere online; “A reverse image search allows someone to easily use your dating profile pictures against you,” he warns. “This way, they’ll easily find out your personal information, even if you didn’t include it on your dating profile.”

When it comes right down to it, avoiding an AI catfish isn’t so different from avoiding the myriad time-wasters that plague dating apps, promising the world and then never delivering. However well messaging is going, however good a connection feels, if the other party always finds excuses not to meet up IRL – you might as well be dating ChatGPT.