How AI image generation changed art and design forever in 2023

AI image generation was everywhere in 2023. Text-to-image diffusion models had burst on to the scene in 2022, but this was the year that they started to become mainstream, and designers had to take notice. Of major significance for creatives, Adobe launched its own AI model, Firefly. But existing AI image generators also made leaps in the quality and reliability of their input, adding the ability to handle text and logos.

The number of apps proliferated, as did the amount of AI-generated content online: some of it good, much of it terrible. Inevitably brands and media outlets also began experimenting with a technology that is quickly becoming unavoidable. As we reach the end of the year, we look back at the major milestones in AI art and AI image generation in 2023 and consider where things are going. See our pick of the best AI image generators for a review of the tools available.

1) Adobe launches Firefly

One of the biggest developments in AI image generators for designers was the launch of Adobe Firefly in March. Until then, the main AI image generators had been new names. Their UIs weren't always the easiest or most intuitive to use, and designers interested in trying them would have to seek them out. There were also serious questions about copyright because the models were trained on datasets scraped from the internet without the permission of artists.

The launch of Adobe Firefly made AI image generation readily accessible to designers who already use Creative Cloud, Adobe's suite of industry standard apps for everything from graphic design to photo and video editing. Its contains useful tools for designers since as well as generating images from text prompts, it can generate vectors and text. And the tool is more palatable for professional use since the model was trained exclusively on public domain images and assets from Adobe Stock. Adobe even offers indemnification in its enterprise version.

Shortly after the launch, Adobe began rolling out Firefly-powered tools in other Adobe programs, including Generative Fill and Generative Expand in Photoshop. Next came Text-to-Vector Graphic for Illustrator, Lens Blur for Lightroom and auto transcribe and search with words in Premiere Pro. It plans to introduce higher resolution image generation, video, 3D and more.

2) AI images become more realistic

A post shared by Chris Perna (@chrisperna)

A photo posted by on

Diffusion models like DALL-E 2, Stable Diffusion and Midjourney had already made a big leap in reliability and the quality thanks to their training on massive datasets, but 12 months ago there were still a lot of quirks in their output, making many generations barely usable without substantial editing. Human figures had unconventional numbers of fingers and text would come out as a nonsense scribble.

This year, almost all of the big AI image generators advanced massively, coming very close to the capability to generate images that look realistic enough to fool anyone. DALL-E 3, Google’s Imagen 2 and most recently Midjourney V6 can all produce much more realistic images than their predecessors, from human hands with the right number of fingers to convincing details and texture in skin, lighting and more.

If viral images like Pope Francis in a puffer jacket were already fooling people early in the year, now we have little chance of immediately identifying an AI-generated deepfake.

5) New AI models can handle text and logos

Another big problem with the output of text-to-image generators up until this year was their inability to render words. Text from their training data would appear as nonsensical scribbles, even if it was a brand logo (or the Getty Images watermark, cough). This meant that many generations would include random scribbles on signs and objects that would have to be edited out to make them usable, although KitKat and Heinz both made clever use of the phenomenon in amusing ad campaigns).

At the same time, it wasn't possible to put phrases in text prompts and have the AI include them in the generated image. If you wanted to put legible text on an AI generated image, you would have to do it manually by editing the image in another program. But that's starting to change. Dall-E 3, Google Imagen 2 and now Midjourney V6 can all recognise and render prompts asking them to generate text in images. It's still a bit hit and miss, but they're only going to get better. Google is even plugging logo generation as a feature of Imagen 2 for brands. I wouldn't recommend a business entrust its branding to AI, but the tool can generate abstract emblems and marks.

4) New AI apps appear by the day

As well as quality, 2023 brought quantity. AI image generator apps seemed to spring up by the day, many of them based on Stable Diffusion or DALL-E. As well as standalone text-to-image generators, we now have AI image generation available directly in the Bing search engine (responsible for that Pixar pets posters trend), we have AI drawing apps like Drawww, which can turn doodles into finished pieces, there are Text-to-3D apps like Masterpiece X - Generate, and there are such creepy delights as the AI Human Generator an even an app that allows one to have a conversation with a photo.

Many of these apps have few professional applications, but they're making AI-generated imagery commonplace on social media. This could create a couple of different situations. It's normalising the use of 'non-human' visuals for a range of uses, which could make more brands consider using AI image generation. On the other hand, AI saturation could mean that non-AI imagery starts command a premium. This could mean more creators start to consider adding Content Credentials metadata to their work.

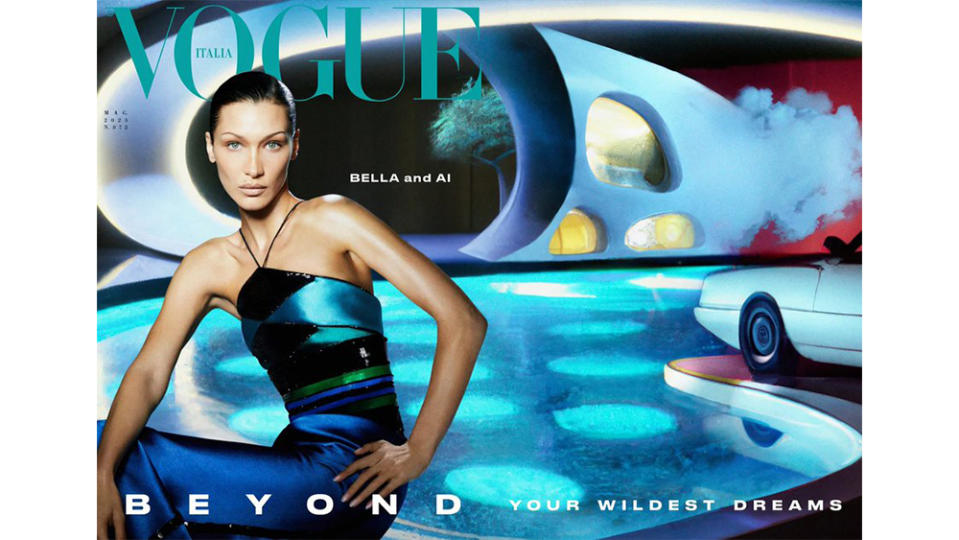

5) AI images appear on magazine covers

With AI image generation everywhere, brands began to pay serious attention. Levis was one of the first to announce it was exploring the use of AI models (all to improve inclusion and user experience, obviously). and AI-generated 'photos' and 'co-creations' made it to the cover of magazines, generating some controversy in the process

A Barbie-inspired cover for the July edition of Glamour in Bulgaria featured an AI-generated Lisa Opie, and Vogue Italia ran an AI cover shoot with Bella Hadid featuring “real shots on imaginary backgrounds created by DALL-E". Photographer Carlijn Jacobs took the photos and combined them with AI imagery with assistance from AI artist Chad Nelson. The idea was that DALL-E 2 would only be used to generate the background, but "unexpected turns" led to AI-generated imagery in the foreground too on some shots. Jacobs described the project as a "fascinating interplay between human creativity and artificial imagery". Despite the controversy it showed us the kind of role that AI image generation could play in photography and design in the future.

6) Big design and photography platforms add AI image generation

It wasn't only Adobe that contributed to the mainstream explosion of AI in design in 2023. Several major design and photography platforms added text-to-image generators to their products. Canva, which is popular among small businesses, launched Magic Studio in October. It includes tools to instantly converts designs into a range of formats, remove backgrounds or expand images and morph words and shapes into colours, textures, patterns and styles. In the stock photography sector, both Getty Images and Shutterstock added text-to-image tools. The former can generate images from scratch, while the latter can be used to remix photos from the existing Shutterstock library.

7) The world learns how to prompt

Last year, writing prompts for AI image generators was considered some kind of arcane art. You might recall people using the term prompt engineer as if it might become an actual job description. The kind of phrasing to use in prompts has almost become general parlance now.

If you're still struggling, some of the big players are working on ways to help. DALL-E 3 has an integration with ChatGPT to help generate prompts, and Google has created a game, Say What You See, to help people practice (but is the AI training us, or are we training the AI?)

As we enter 2024, it feels like AI image generation is reaching maturity. It might seem that the technology can't go much further in terms of photorealism, although we will surely still see some further advances here. But in 2024, many of the improvements may be in the addition of new and more flexible editing tools and easier, more accessible UI that allows creatives and non creatives to incorporate AI tools into their workflows. Midjourney is moving to a dedicated website from Discord, providing a more intuitive experience that's likely to appeal to the less tech-savvy and which could allow one of the most powerful AI models to add new features.

What seems certain is that creatives of all kinds will need to at least be aware of the developments in AI image generation and how it's starting to affect their discipline. We'll be trying to keep up with it all, reporting on new developments and updating our roundup of AI art tutorials.