How to make Adobe Firefly generative AI work for you, not against you

As a middle-aged photographer and videomaker I’m no stranger to the evolution of technology. As a kid in the 1970s, I visited my dad’s office and saw my first photocopier. I quickly realized that if I drew a spaceship I could copy it multiple times and create an entire space fleet in a few minutes! The concept of a machine helping me be creative blew my mind!

As a videomaker in the 1980s, I needed to lug a large camera that was plugged into an even larger (and heavier) video recorder. I then had to edit the footage by copying clips from tape to tape. Fast forward to the 21st Century and I’m much happier I can shoot superior-quality HD footage on a palm-sized smartphone and use the phone’s non-linear editing app to make and share shows that other people can view on their smartphones! When it comes to being creative I’ve always happily adopted advancements in technology, and I view AI-generated imagery as another welcome development in a long line of change.

To me, AI is a tool I can enjoy using to complement my creative photography, not to replace it. Sure, I could type in a description of an image into an AI image generator such as - ‘angry man shouts at his computer with steam coming out of his ears’ and AI would generate a complete picture in seconds. However, if you try this text prompt in Adobe Firefly you get a cartoonish and unconvincing image that’s a crude mix between CGI and cheesy clip art. I’ve got no interest in creating a stand-alone image using AI. I do however find AI-generated imagery is a useful tool when it comes to my hobby - toy photography!

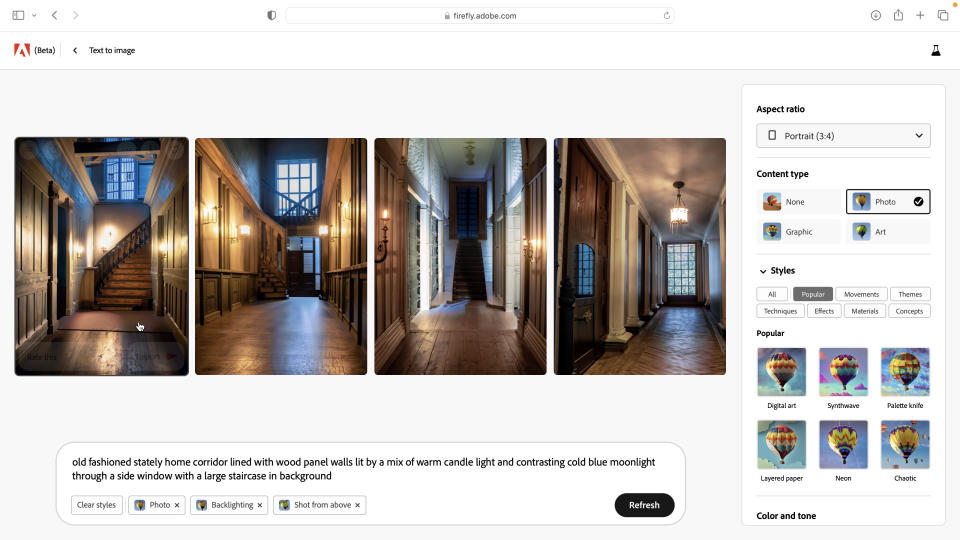

As a lifelong Doctor Who fan I’ve recently enjoyed taking pictures of real-world locations and compositing Doctor Who toys into those places. I get creative satisfaction in making the lighting of the toys in my home studio match the lights in the location to produce a convincing composite. Occasionally I’ll fancy adding a background that I don’t have a photo of, such as the hall of a spooky Victorian house or a Martian landscape. By typing a description of a location into a text field in Adobe Firefly I can summon up a suitable background in around 20 minutes. This involves tweaking the text prompt and changing drop-downs to adjust the computer-generated image’s lighting and camera angle so that it suits the lighting and composition of my action figure shot.

Once I’ve downloaded my AI-generated image I place it in the background of my shot and blur it to create a shallow depth-of-field effect. The AI background is secondary to the toys, rather than being the main focus of the image, so I feel that I still ‘own’ the finished piece. Plus I enjoy the creative process of refining the description and tweaking settings in Adobe Firefly to help its AI component get the look I’m after. Like my dad’s office photocopier, AI-generated imagery is yet another marvelous technological springboard to enabling my creativity, not a replacement for it.

George Cairns can be found playing with his toys over at Instagram as @scifitoyphoto

Interested in toy photography, find out how to shoot model toy photography that looks like real life with this guide. Or if you want some more inspiration check out this LEGO Eifell tower shoot, or send your Star Wars toys to a galaxy far far away.