This tool makes AI models hallucinate cats to fight copyright infringement

Artists and computer scientists are testing out a new way to stop artificial intelligence from ripping off copyrighted images: “poison” the AI models with visions of cats.

A tool called Nightshade, released in January by University of Chicago researchers, changes images in small ways that are nearly invisible to the human eye but look dramatically different to AI platforms that ingest them. Artists like Karla Ortiz are now “nightshading” their artworks to protect them from being scanned and replicated by text-to-photo programs like DeviantArt’s DreamUp, Stability AI’s Stable Diffusion and others.

“It struck me that a lot of it is basically the entirety of my work, the entirety of my peers’ work, the entirety of almost every artist I know’s work,” said Ortiz, a concept artist and illustrator whose portfolio has landed her jobs designing visuals for film, TV and video game projects like “Star Wars,” “Black Panther” and Final Fantasy XVI.

“And all was done without anyone’s consent — no credit, no compensation, no nothing,” she said.

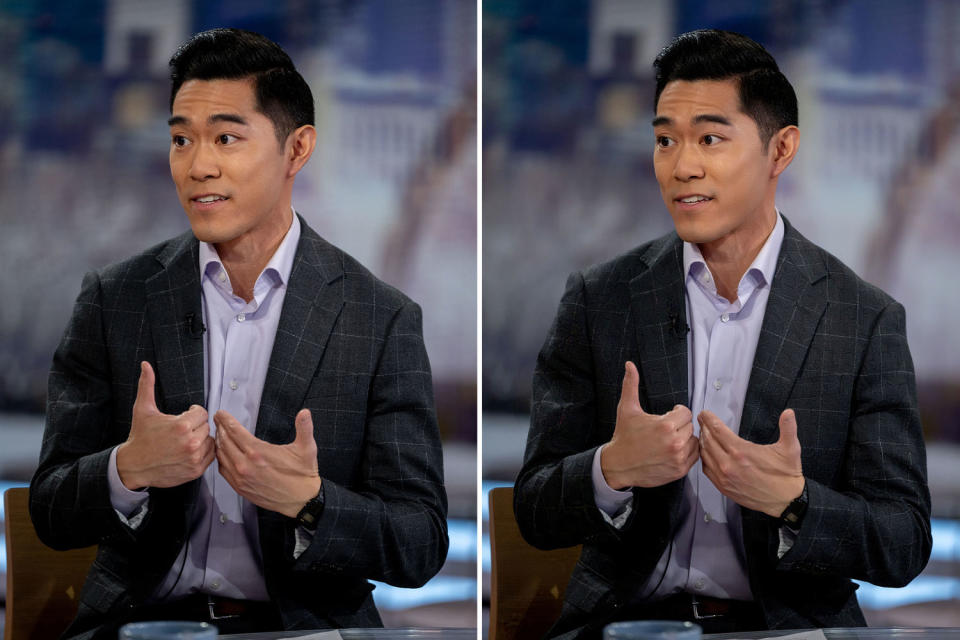

Nightshade capitalizes on the fact that AI models don’t “see” the way people do, research lead Shawn Shan said.

“Machines, they only see a big array of numbers, right? These are pixel values from zero to 255, and to the model, that’s all they see,” he said. So Nightshade alters thousands of pixels — a drop in the bucket for standard images that contain millions of pixels, but enough to trick the model into seeing “something that’s completely different,” said Shan, a fourth-year doctoral student at the University of Chicago.In a paper set to be presented in May, the team describes how Nightshade automatically chooses a concept that it intends to confuse an AI program responding to a given prompt — embedding “dog” photos, for example, with an array of pixel distortions that read as “cat” to the model.

After feeding 1,000 subtly “poisoned” dog photos into a text-to-photo AI tool and requesting an image of a dog, the model generates something decidedly un-canine.

Nightshade’s targeted distortions don’t always go feline, though. The program decides case-by-case what alternative concept it intends its AI targets to “see.” In some instances, Shan said, it takes just 30 nightshaded photos to poison a model this way.

Ben Zhao, a computer science professor who runs the University of Chicago lab that developed Nightshade, doesn’t expect mass uptake of the tool anywhere near a level that would threaten to take down AI image generators. Instead, he described it as a “spear” that can render some narrower applications unusable enough to force companies to pay up when scraping artists’ work.

“If you are a creator of any type, if you take photos, for example, and you don’t necessarily want your photos to be fed into a training model — or your children’s likenesses, or your own likenesses to be fed into a model — then Nightshade is something you might consider,” Zhao said.

The tool is free to use, and Zhao said he intends to keep it that way.

Models like Stable Diffusion already offer “opt-outs” so artists can tell datasets not to use their content, and a spokesperson for Stability AI noted it was among the first companies to participate in a “Do Not Train” registry. But many copyright holders have complained that the proliferation of AI tools is outpacing their efforts to protect their work.

The conversation around protecting intellectual property adds to a broader set of ethical concerns about AI, including the prevalence of deepfakes and questions about the limits of watermarking to curb those and other abuses. Although there is growing recognition within the AI industry that more safeguards are needed, the technology’s rapid development — including newer text-to-video tools like OpenAI’s Sora — worries some experts.

“I don’t know it will do much because there will be a technological solution that will be a counterreaction to that attack,” Sonja Schmer-Galunder, a professor of AI and ethics at the University of Florida, said of Nightshade.

While the Nightshade project and others like it represent a welcome “revolt” against AI models in the absence of meaningful regulation, Schmer-Galunder said, AI developers are likely to patch their programs to defend against such countermeasures.

The University of Chicago researchers acknowledge the likelihood of “potential defenses” against Nightshade’s image-poisoning, as AI platforms are updated to filter out data and images suspected of having gone through “abnormal change.”

Zhao thinks it’s unfair to put the burden on individuals to shoo AI models away from their images to begin with.

“How many companies do you have to go to an individual to tell them not to violate your rights?” he said. “You don’t say, ‘Yeah, you should really sign a form every time you cross the street that says, “Please don’t hit me!” to every driver that’s coming by.’”

In the meantime, Ortiz said she sees Nightshade as a helpful “attack” that gives her work some degree of protection while she seeks stronger ones in court.

“Nightshade is just saying, ‘Hey, if you take this without my consent, there might be consequences to that,’” said Ortiz, who is part of a class-action lawsuit filed against Stability AI, Midjourney and DeviantArt in January 2023 alleging copyright violation.

A court late last year dismissed some of the plaintiffs’ arguments but left the door open for them to file an amended lawsuit, which they did in November, adding Runway AI as a defendant. In a motion to dismiss earlier this year, Stability AI argued that “merely mimicking an aesthetic style does not constitute copyright infringement of any work.”

Stability AI declined to comment on the litigation. Midjourney, DeviantArt and Runway AI didn’t respond to requests for comment.

This article was originally published on NBCNews.com