The Paris Attacks Were Tragic, but Cryptography Isn’t to Blame

We have met the enemy, and it is math.

That’s the clear takeaway from the latest round of outrage over gadgets and apps that use encryption to ensure no third parties can see your data.

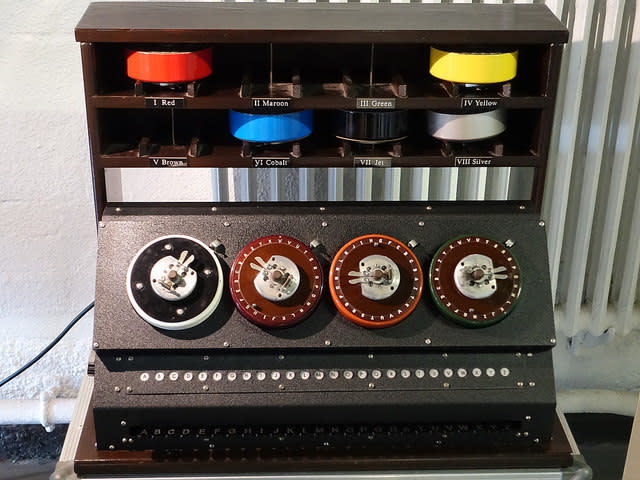

The ‘Bombe’ —a machine invented by British mathematician Alan Turing to decrypt the German Enigma code in WWII (Photo: Garrett Coakley/Flickr).

Is privacy protected by complex cryptographic equations — what we rely on to shield our online banking from snoops and to keep the data on our phones safe from thieves — a bad thing? Well, apparently it is when terrorists might use those same tools.

Emphasis on “might”: Despite initial speculation about the plotting behind the Paris attacks requiring encrypted communications, some of the murderers responsible used plain old text messaging.

Crypto in the cross hairs

A lack of supporting evidence (see also the groundless panic over Syrian refugees in the U.S.) has not stopped politicians from denouncing strong encryption as shielding the members of the Daesh death cult who fancy themselves an “Islamic State.”

“The Achilles heel in the Internet is encryption,” Sen. Dianne Feinstein (D.-Calif.) said on CBS’s Face the Nation Sunday. The vice chairman of the Senate Select Committee on Intelligence then cited a Paris-attacks theory that had already been debunked: “Terrorists could use PlayStation to be able to communicate, and there’s nothing that can be done about it.”

(If only they would: Sony’s game console doesn’t employ the kind of encryption that would shut out police with a warrant.)

On that same program, Rep. Michael McCaul (R-Tex.) voiced a similar thought: “The biggest threat today is the idea that terrorists can communicate in dark space, dark platforms, and we can’t see what they’re saying.”

A former speechwriter for U.K. prime minister David Cameron, Clare Foges, phrased things a little more bluntly in an op-ed in the Telegraph: “Terrorists want ever-safer spaces to operate in, and the tech giants say ‘Sure! Here’s an end-to-end encrypted product that is impossible to crack.’”

(Note that Yahoo Tech’s publisher has been testing end-to-end e-mail encryption software developed with help from Google.)

We’ve had this argument before

The remedy you hear most often proposed is to require vendors of encrypted software to include a mechanism that would let the government see encrypted content after getting a search warrant—“a kind of secure golden key,” the Washington Post’s editorial board memorably wrote last year.

That sounds great in principle, but in practice it means impaired security. As 15 veteran cryptographers explained in a paper posted in July, such a system will always make encryption more fragile and gives attackers—from organized crime to other governments—a high-value target in the form of whatever third party holds these backup keys.

The Typex machine, built by the British during WWII to emulate Enigma (Photo: Micastle/Flickr).

It also breaks “forward secrecy,” in which a new key is used for each exchange to ensure that older data can’t be exposed by the compromise of a key currently in use.

The lack of such exceptional access does not leave law enforcement powerless. Investigators can use the flood of metadata generated by communications apps, even encrypted ones, to trace relationships. They can override encryption by compromising a device with malware. They can pose as an insider.

But suppose that my old employer’s editorial board’s wish came true, and that Apple and Google could engineer a system in which your data stays protected until a court orders otherwise.

Then you’d have a problem that’s especially relevant when dealing with adversaries in other countries: Apple and Google do not have a monopoly on writing encrypted software or getting people to use theirs.

In other words, if the bad guys really want encryption, they don’t have to use government-certified software with a spare key for law enforcement.

Software wants to be freely distributed

Any programmer in the world can code comparable tools, with help from numerous open-source libraries of cryptographic code. And anybody else online can use the results.

That’s why, for instance, the U.S. State Department helps fund the development of Tor, an anonymous and private communications system that helps dissidents escape the surveillance of their repressive governments.

Advocates for a ban on strong crypto say that aftermarket alternatives would be harder to use.

A German Enigma machine on display at Bletchley Park (Photo: Micastle/Flickr).

“Law enforcement might be happy with a world where encryption is available but only in dank corners from dubious suppliers,” e-mailed Stewart Baker, former general counsel for the National Security Agency. “Any supplier big enough to have a reputation and to do the crypto right is likely also big enough to reach with regulation – or even liability.”

But the authors of the atrocities in Paris and across the Mideast, to judge from the technical advice they hand out, already strive to do things the hard way—including using Tor for encrypted communication.

The result, as former homeland security secretary Michael Chertoff summed up at an event in Washington in September: “All you would do is reduce security for the good guys.”

The “you geniuses can figure it out” copout

Political leaders rarely respond to this dilemma with an unambiguous defense of Americans’ right to encryption like those offered by Sen. Ron Wyden (D.-Ore.) in an essay Monday and presidential candidate Sen. Rand Paul (R.-Ky.) at a Yahoo News conference two weeks ago.

You’re more likely to see vague calls for collaboration between government and industry. For example, Democratic presidential candidate Hillary Clinton suggested in a recent speech before the Council on Foreign Relations that we “challenge our best minds in the private sector to work with our best minds in the public sector to develop solutions that will both keep us safe and protect our privacy.”

You can read that as accepting the unworkability of crypto that’s both secure and police-friendly (her former advisor Alec Ross thinks so, to judge from his tweets). But it also fits into a long line of pleas by politicians imploring the tech industry to put its thinking cap on and try harder to solve a problem it consistently labels impossible.

Punting an intractable tech-policy problem over to Silicon Valley is not a new habit. I heard a similar line some 15 years ago, when Hollywood and its friends in Washington kept urging the tech geniuses to create a system of “digital rights management” controls that would ensure nobody could ever copy a song or a movie without permission.

Well, no still means no. And all the time spent demanding that the tech industry square the circle is time that could have been spent working on better ways to find and catch those who would do us harm.

Email Rob at rob@robpegoraro.com; follow him on Twitter at @robpegoraro.