Google built a machine that’s better at games than you are

Google is busy ensuring that our eventual demise at the hands of robots that are super-good at Breakout is inevitable.

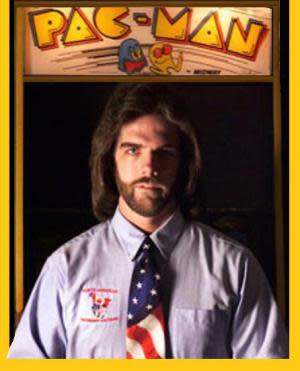

Above: Look out, Pac-Man record holder Billy Mitchell. The machines are coming for you.

Image Credit: Guinness World Records

The search giant built an artificially intelligent machine that teaches itself how to play games, according to a study published in the journal Nature (via BBC News). Google’s scientist designed the system to operate and learn like the human brain does. It was able to learn the rules and how to win in 49 different games for the Atari 2600 gaming system. In the majority of these games, it was able to eventually play better than nearly anyone alive. Sounds like a challenge for gaming’s John Henry: Billy Mitchell, who is a world record holder in several classic games and was the first person to achieve a perfect score in Pac-Man.

This computer achievement is the latest example of Google’s machine-learning technology, which its DeepMind Technologies subsidiary has developed over the last several years. Google is very interested in creating computers that think like people, since that will help the company to better understand web content and serve it up to its customers. It will also enable the company to expand its efforts to get into the Internet of things, which enables real-world devices — like thermostats — to make decisions for you. In 2014, Google purchased DeepMind for between $400 million and $500 million.

In a demonstration that aired on BBC News, DeepMind vice president of engineering Dr. Demis Hassabis ran through an explanation of how its A.I. teaches itself to play Breakout — a game where you move around a bar-shaped bat to destroy blocks with a bouncing ball.

“After 30 minutes of gameplay, the system doesn’t really know how to play the game yet,” he said. “But it’s starting to get the hang of it — that it’s supposed to move the bat to the ball. After an hour, it’s a lot better, but it’s still not perfect. And then, if we leave it playing for another hour, it can play the game better than any human. It almost never misses the ball, even when it comes back at very fast angles.”

But DeepMind’s supersmart robot didn’t stop once it accomplished superfast reflexes. The company left its A.I. running for another couple of hours, and the machine used that time to discover an optimal strategy where it dug a tunnel through the side of the bricks and got the ball to bounce around the back.

“[This is a strategy] that the programmers and researchers working on this project didn’t even know [about Breakout],” said Hassabis. “We only told it to get the highest score it could.”

This is one of the best examples of how A.I. is reaching out into new frontiers. Previously, the technology of computer reasoning focused on simple tasks. But games have a lot going on and they are often difficult for humans, and now A.I. has conquered that.

It is this type of artificial awareness that will power things like self-driving cars and autonomous robots in the very near future.

Marketing technologist? We're studying the big marketing clouds. Fill out our 5-minute survey, and we'll share the data with you.