OpenAI’s Sora Shines a Spotlight on The Need for ‘Ethically Sourced’ AI | Commentary

OpenAI’s cinematic quality AI video generator Sora — and the power of what it represents — shook Hollywood just weeks ago. Its shocking quality certainly elevates the issue of what AI means for future Hollywood productions. But Sora also, once again, puts the spotlight on the fundamental issue of AI “training” on copyrighted works without consent.

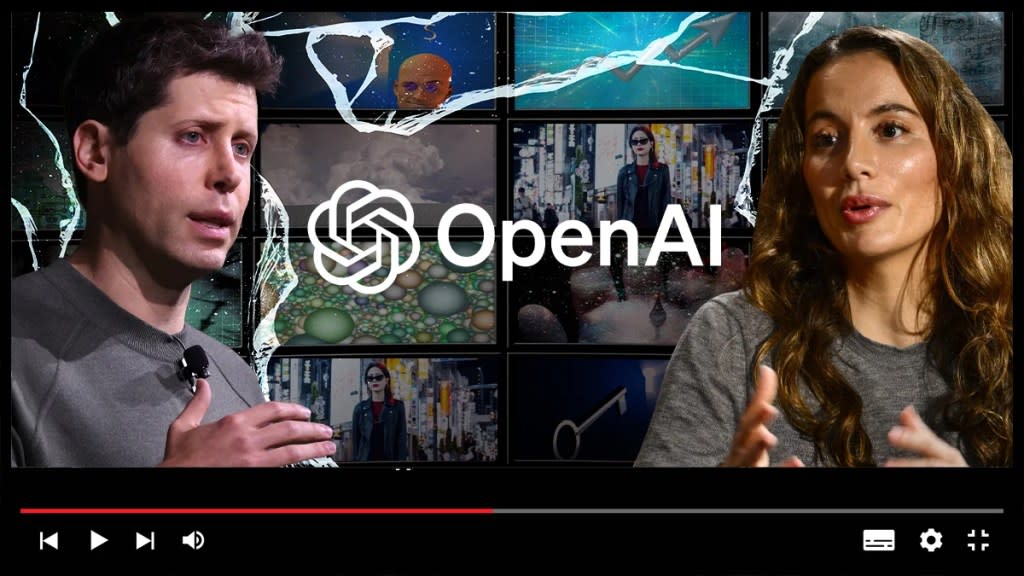

Of course, when asked, OpenAI — like most generative AI companies — never comes right out and says that’s what it does. The company simply says that it trains Sora on “publicly available” works. While that sounds innocuous enough, it really isn’t. If it were, why would the company be so cagey about it? When directly asked whether Sora trained on YouTube videos, OpenAI’s CTO Mira Murati deflected. “I’m actually not sure about that,” she said.

We now know that it’s what we’ve suspected all along. “Publicly available” means simply that the food OpenAI uses for training its AI – because that’s what it is to OpenAI’s voracious AI pet – is content accessible online, much of which is copyrighted of course. Thanks to some intrepid journalistic digging by The New York Times, it’s clear now that OpenAI trained its ChatGPT Large Language Model (LLM) on over one million hours of YouTube videos, all without payment or consent.

And here’s a big tell about how OpenAI itself really feels about what it’s doing. The name for its internal speech-recognition program that takes YouTube videos and transcribes them into text for training purposes is Whisper, as in, let’s keep things on the down low. I’m no linguist, but it certainly seems like an admission of some sort to me. (OpenAI did not respond to a request for comment from TheWrap.)

Apparently, even YouTube — the copyright infringing OG — agrees. YouTube doesn’t precisely couch the issue in those terms, of course, perhaps because Google is reportedly training its own AI using YouTube videos. Earlier this month, CEO Neal Mohan bemoaned the fact that Sora’s non-consensual training on millions of its videos violates its terms of service. That’s a rich claim coming from YouTube, since YouTube built its initial base by enabling users to upload any videos they wanted – including copyrighted videos like SNL’s notorious “Lazy Sunday” that blew the lid off the platform– without securing licenses or compensating rights holders. One could say that the U.S. copyright laws and notices were the relevant “terms of service” at the time.

Given that inconvenient truth, some would say YouTube’s position sounds a bit, shall we say, hypocritical. But putting that aside for the moment, Mohan has a point. Why should OpenAI — or any other LLM — be able to feed off the works of others in order to build its value as a tool (or whatever you call generative AI)? And even more pointedly, where are the creators in this equation?

Apart from trying to dodge those specific questions, OpenAI, predictably, tries to turn the tables on Google and contend that what it does is effectively no different than what Google itself did when it vacuumed up the entire Internet world – millions of copyrighted works — for its “books” project in order to make them searchable online. At the time, the Ninth Circuit Court of Appeals blessed Google’s actions as being a “fair use,” in a seminal case that is always cited by those in tech who feel that creative works should be considered fodder for some kind of higher calling of infinite progress.

But Google showcased only snippets of those books in its search results – not the whole enchilada. There was no market substitution here. Once a user found the copyrighted work through search, they still would need to actually go out and buy the real thing. That’s a fundamentally different proposition than Sora’s. Sora doesn’t call attention to other copyrighted works and build new channels of monetization for them. Sora, instead, competes directly with them (at least it will when it becomes widely available).

Anyway, if OpenAI were so confident in the righteousness of its position, why be so cagey about it? Because it isn’t. Generative AI tech without content is essentially useless. We know that, and they know that. That means artists and creators of those creative works should be compensated. You can’t re-use my article here without my permission simply because it’s been posted. And that basic fact doesn’t change simply because you’ve sucked millions of works into your training vortex. It’s not just about the outputs generated by AI (that’s a separate copyright matter). It’s about the inputs as well.

At a minimum, it’s hard to argue that OpenAI’s opacity about what’s really going on should be confronted head on. All of us (creators and consumers alike), for a whole host of reasons, deserve to know precisely what OpenAI uses in its training data sets.

That kind of transparency is precisely what President Joe Biden’s Executive Order about AI calls for. Congress finally took Biden’s hint when just last week U.S. Rep. Adam Schiff introduced “The Generative AI Copyright Disclosure Act.” Following the European Union’s own historic legislation on the subject, Schiff’s act would require anyone that uses a data set for AI training to send the U.S. Copyright Office a notice that includes “a sufficiently detailed summary of any copyrighted works used.”

Essentially, this is a call for “ethically sourced” AI and transparency so that consumers can make their own choices. Think of it like nutritional labeling on food products for consumer safety reasons. “Trust and safety” logically should apply here too, and artists certainly agree. Two weeks ago leading musicians like Billie Eilish penned an open letter to the tech community to knock it off and stop training their LLMs without consent or compensation. So the heat is most definitely on, and it’s up to the creative community to keep the issue on the front burner.

So let’s first pull the curtain on what’s really going on in the AI sausage factory via demands for transparency. Then we can all directly confront the copyright legal issues head on with reality we all understand. To infringe, or not to infringe (because it’s fair use)? That is the question – and it’s a question winding through the federal courts right now that will ultimately find its way to the U.S. Supreme Court.

And when it does, my prediction is that ultimately even this wacky court will find a way to protect artists in the most basic of ways by following its surprising (to many) recent decision in the Andy Warhol Prince copyright case – in which it defined a new kind of direct harm to creator exception to fair use – it will rule in favor of creators. It will reject Big Tech’s efforts to train their LLMs on copyrighted content without consent or compensation, properly finding that AI’s raison d’etre in those circumstances is to build new systems to compete directly with creators – in other words, market substitution.

Simply because something is “publicly available” doesn’t mean that you can take it. It’s both morally and legally wrong. I’m an IP lawyer and welcome a healthy debate on that subject. But for god’s sake, be transparent about what you’re doing.

Reach out to Peter at peter@creativemedia.biz. For those of you interested in learning more, sign up to his “the brAIn” newsletter, visit his firm Creative Media at creativemedia.biz, and follow him on Threads @pcsathy.

The post OpenAI’s Sora Shines a Spotlight on The Need for ‘Ethically Sourced’ AI | Commentary appeared first on TheWrap.