My only Google I/O excitement was a two-second glimpse of secret Google hardware

What you need to know

At Google I/O 2024, Google announced Project Astra, a multimodal AI assistant.

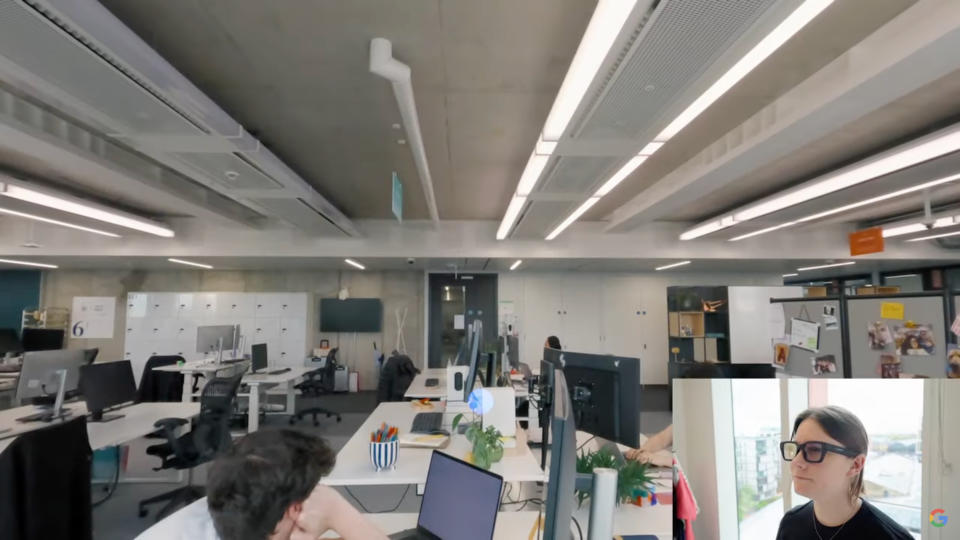

In its filmed demo, a Google engineer wears AR glasses that film your surroundings for Gemini to respond to.

We've heard rumors about Project Iris, Google's AR prototype, but Google hasn't officially announced anything.

The first day of Google I/O 2024 went all-in on AI, neglecting any mention of hardware we hoped to see like the Pixel 9 or Pixel Fold 2. But one unannounced Google hardware project made a blink-and-you-miss-it appearance: a secret AR glasses prototype that looks surprisingly consumer-ready.

Demoing several Gemini AI upgrades, Google revealed Project Astra, a real-time multimodal AI from the Google DeepMind team that looks at your surroundings through a camera and answers your questions based on that visual context.

You can watch the demo below. And if you jump to 1:25, you can see the moment when the Google engineer puts on a thick-rimmed pair of glasses before the camera view changes to her eyeline.

Google didn't call attention to these glasses, and we don't know what they're called. But they're clearly AR glasses, given the holographic dot and text in the visual center that displays Gemini AI answers to her questions. Google claims it was "captured in a single take, in real time," which suggests the footage is real and undoctored.

Meta Ray-Ban smart glasses also have a AI question function, but with photo snapshots instead of video and Meta AI instead of Gemini. Plus, it has no visual way to show the AI's answer, relying solely on audio. That's where Google's mysterious AR glasses would potentially step things up.

We've waited for a Google Glass successor for years. Behind the scenes, we knew about Google's "Project Iris," alleged VR/AR glasses with real-time Google Translate capabilities. Alleged Iris project difficulties had us worried that Iris might never come to fruition.

This demo, however, has us wondering whether Google might be able to sell AR glasses powered by Gemini AI sooner than we anticipated. That would certainly be more exciting than many of the straightforward Google I/O 2024 AI demos today, like AI Overview search summaries.

Google's big XR project is developing mixed-reality software (AndroidXR) for Samsung's upcoming XR headset, supposedly due out this year. In theory, Google's Iris AR glasses could follow after Samsung's headset with its own spin on AndroidXR, designed to be worn out in the world.

Imagine Google AR glasses that can interpret your surroundings in real time, along with other Google app capabilities like Google Maps directions or real-time Google Translate. If it's really coming soon, that could spice up a rather dull I/O presentation.