Instagram to blur nude images sent by under-18s

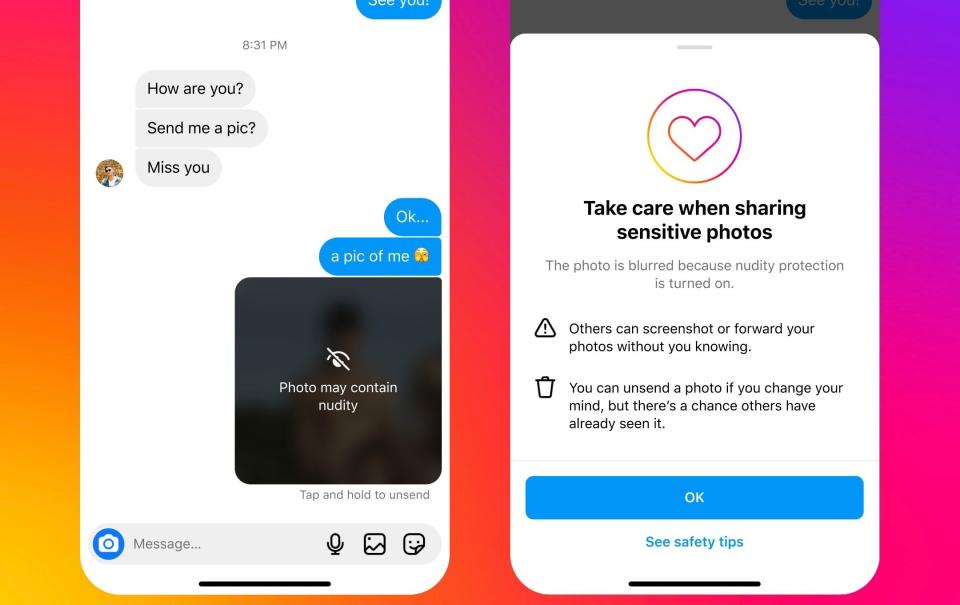

Instagram will blur explicit photos sent to under-18s in an effort to protect children from unsolicited images.

The social media app will automatically prevent young users from seeing images if its algorithms detect nudity being sent in private messages.

It comes amid growing concerns about social media and smartphones’ negative impact on teenagers, which has led to calls for younger teenagers to be banned from owning smartphones, and the rise of online blackmail using intimate photos of victims.

Instagram will also warn under-18s when they share explicit images of themselves that sending the pictures could leave them vulnerable to scams or bullying.

The app said it was introducing the change to protect children from sextortion scammers, who blackmail young people after encouraging them to share intimate images, either seeking money or requiring them to obtain and share intimate images of other children.

In addition, the app will force users to confirm before they forward explicit images sent to them to another account, warning them that it may be illegal to do so.

The feature will be automatically turned on for under-18s and adults will be asked if they want to activate it.

However, Instagram will use an AI algorithm within the app to detect if a photo features nudity, rather than scanning it as it is sent through Instagram’s servers.

This means the feature will work even if messages are end-to-end encrypted, but also that the company will leave it up to users to decide whether to take action on potentially illegal activity. It will only take action when images are reported by users themselves.

Meta, Instagram’s parent company, said it was also developing technology that would identify accounts that might be carrying out sextortion scams and that it would block them from finding and messaging accounts owned by teenagers.

Social media companies are under growing pressure to protect children as new online safety laws come into force in Britain.

This week, it emerged that ministers were considering more proposals including a ban on under-16s buying phones.

Last year, a former Instagram employee said Meta’s management had ignored his warnings about harms to teenagers on the app.

Emails released by a US Congressional committee in January showed that chief executive Mark Zuckerberg rejected pleas from Sir Nick Clegg, its head of global affairs, to invest more in child safety, although the company said at the time that the documents were “cherry picked” and lacked context.