HBM supply from SK hynix and Micron sold out until late 2025

The chief executive of SK hynix this week said that the company's supply of high bandwidth memory (HBM) has been sold out for 2024 and for most of 2025. The claims come on the heels of similar comments made by the CEO of Micron back in March, who said that the company's HBM production had been sold out throughout late 2025. Essentially, this means that demand for HBM exceeds supply.

SK hynix's announcement is more impactful than that of Micron due to the company's larger market presence. Currently holding between 46% to 49% of the HBM market, SK hynix's saturation contrasts starkly with Micron's smaller 4% to 6% market share, based on data from TrendForce. The combined fully booked production capacities of both SK hynix and Micron mean that over half of the industry’s total supply of HBM3 and HBM3E for the upcoming quarters is already sold out. While Samsung (the only remaining HBM producer) yet has to comment about its HBM bookings, it is likely that it faces similar demand and its high-bandwidth memory products are also sold out for quarters to come. That said, it is safe to say that HBM demand can barely meet its supply.

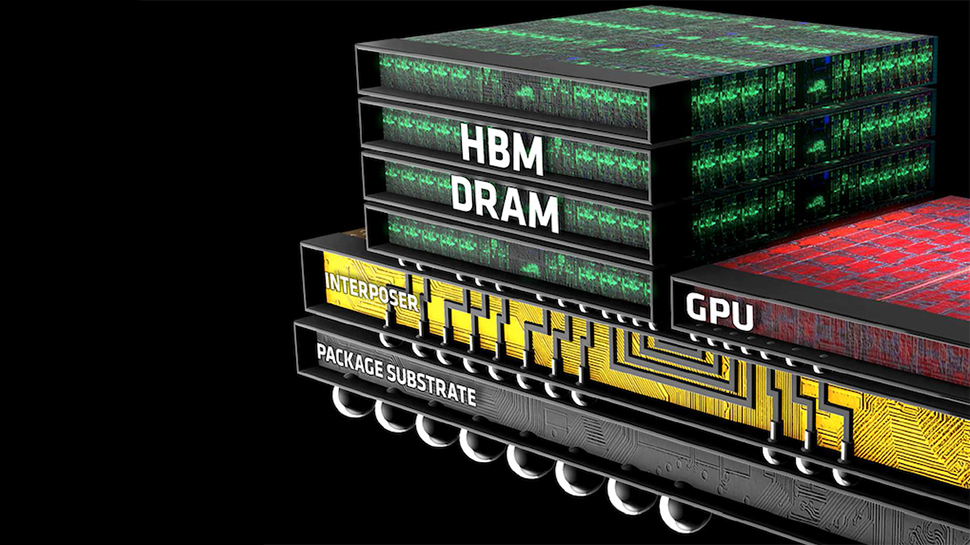

This is not particularly surprising as escalating demand for advanced processors for AI training and inference is increasing demand for associated components, notably HBM memory and advanced packaging services. SK hynix, the company which first introduced high-bandwidth memory in 2014, is still the largest supplier of HBM stacks, to a large degree because it sells to Nvidia, the most successful supplier of GPUs for AI and HPC. Nvidia's H100, H200, and GH200 platforms rely heavily on SK hynix for its supply of HBM3 and HBM3E memory.

The surge in processor demand is directly linked to the expansion of AI adoption and processors like Nvidia's H100 and H200 needing fast and high-capacity memory, something traditional DDR5 and GDDR6 just cannot provide. As a result, HBM3 and HBM3E are witnessing unprecedented demand. Meanwhile, Nvidia is not alone with its demand for HBM as Amazon, AMD, Facebook, Google (Broadcom), Intel, and Microsoft are also ramping up production of their latest processors for AI and HPC.

In addition, the company said that it had begun sampling its new 12-Hi 36GB HBM3E stacks with customers, with plans to start mass shipments in the third quarter of this year. This development is part of SK hynix's strategy to maintain its leadership and meet the growing needs of its customers that need high-capacity HBM3E solutions.