Google I/O recap: New 'Project Astra' AI agent revealed along with Gemini and Android updates

Google's big summer developer conference, Google I/O, happened on Tuesday in California.

AI was the big theme, with updates to Gemini, Android, and a prototype "Project Astra" AI agent.

Business Insider was in attendance and covered the biggest announcements — you can catch up below.

Google revealed what it's been quietly working on in a big summer event on Tuesday.

CEO Sundar Pichai took the stage to kick off Google I/O, the company's annual developer conference.

Google showed off some big updates to its latest AI models — including an impressive new AI agent called "Project Astra" — along with a look at what the future of Google Search looks like with generative AI built-in.

Project Astra is a prototype from @GoogleDeepMind exploring how a universal AI agent can be truly helpful in everyday life. Watch our prototype in action in two parts, each captured in a single take, in real time ↓ #GoogleIO pic.twitter.com/uMEjIJpsjO

— Google (@Google) May 14, 2024

The latest features coming to Android were also detailed, like Circle to Search.

The keynote was a chance for Google to respond after its rival, OpenAI, seemingly tried to upstage the company with an event of its own the day before, where it showed off a new flagship model, GPT-4o, and the improvements it brings to ChatGPT.

Overall, it delivered, with a strong pipeline of impressive AI features on the way.

Business Insider was in attendance at Google I/O and covered the biggest announcements — keep scrolling for a recap.

That's a wrap!

This was a confident Google I/O for the company.

It's in an AI sweet spot. Yes, OpenAI is giving Google a run for its money, but CEO Sundar Pichai seems pretty happy about the battle ahead, with plenty of updates on the way for Gemini, Google Search, and Android.

Sundar Pichai says the word "AI" was said 120 times during today's Google I/O keynote.

Google's CEO takes the stage to close out the keynote, and got some laughs as he reveals he asked Gemini to count how many times "AI" was said today (120 times).

Pichai says he has a feeling that someone out there may be counting how many times Google mentioned AI today, and since a big theme today is letting Google do the work for you, it went ahead and counted so that you don't have to.

The count then changed to 121.

As we near the close of the keynote, Google talks about its open-source models, Gemma, and broader safety considerations.

Toward the end of the keynote, Google discusses a series of open-source AI models called Gemma.

This is a very different approach from the main Gemini AI models.

The Gemini offerings are closed tightly. Outside developers can't see the code behind Gemini, or the weights that Google used to build these models. You just have to use Gemini off the shelf, and really through Google's Cloud for enterprise use cases.

Contrast this with Meta, which has mostly open-sourced its Llama model. That's way more open than Google. This means there's a less clear way that Meta will make money from its huge investments in Llama models.

Google's path is more clear: It gets subscribers and cloud users to just pay cold hard cash to use these tools. This is partly why the Gemma open-source models seemed more like an afterthought at the end of the IO keynote. Now, Meta may get a bigger developer community coalescing around its Llama models. That may pay off down the line. But for now, most of the top AI companies are taking the closed route in AI. Including, ironically "Open"AI.

On the safety front, Google says it's been "red-teaming" AI updates to stress test them for vulnerabilities ahead of release.

When it comes to combating misinformation and increasing security, Google's SynthID helps watermark AI images.

Google is getting to the money part of its new AI models.

This is where Wall Street is likely watching carefully in an attempt to better understand an important business question: How will Google make money from all this new technology?

Generative AI is a huge change for the company, which has lived on digital ads for well over a decade.

One example from the I/O keynote on Tuesday: For the big Gemini AI model, Google will charge $7 per 1 million tokens for the top Gemini 1.5 Pro model. This is how much it costs for developers to drop massive amounts of data into the AI model.

There's a smaller 1.5 Flash version of the Gemini AI model too, which isn't as powerful, but it's lighter weight and more cost-efficient. Google said today that it will cost 35 cents for 1 million tokens for 1.5 Flash. That's a big saving. And it shows how expensive all this is for Google to process, especially with the biggest 1.5 Pro model.

Gemini Nano with multimodality is coming to Pixel phones later this year.

Google's accessibility feature TalkBack is getting some updates later this year. If someone sends you a photo, you get a description of what it looks like; or if you're shopping online, you get a description of the product.

The Gemini Nano demo on an Android phone got a very big round of applause.

Thanks to Gemini Nano, @Android will warn you in the middle of a call as soon as it detects suspicious activity, like being asked for your social security number and bank info. Stay tuned for more news in the coming months. #GoogleIO pic.twitter.com/wtc3rrk0Gc

— Google (@Google) May 14, 2024

Google showed off how it works if you get a scam call offering to transfer a user's money to a new account. An alert pops up saying it might be a scam.

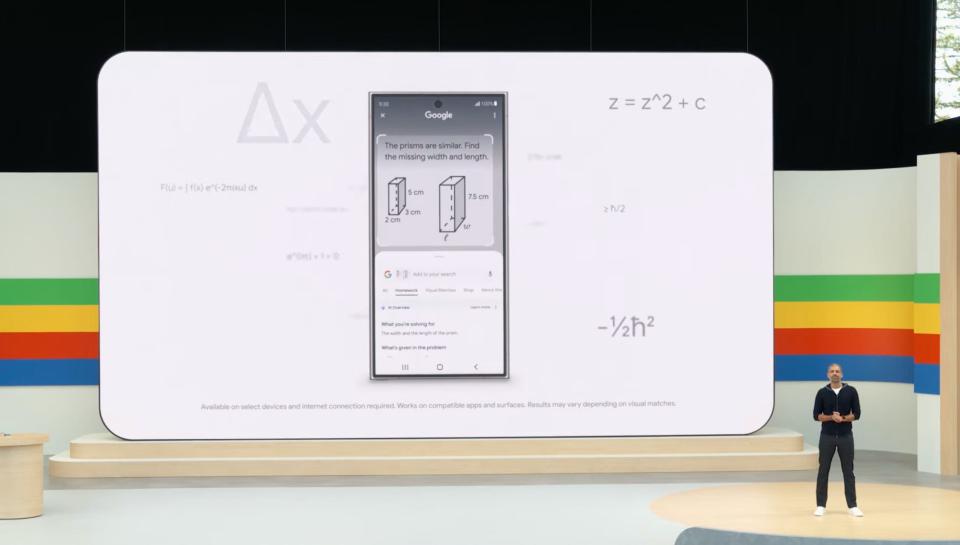

We're getting into some specific Android AI features, like Circle to Search...

The Android presentation comes at the end of the keynote at this year's I/O conference, which shows how much Google's priorities have changed lately. (There's another whole Android developer event later on Tuesday, so it's not entirely a backwater still).

But a lot of the talk today is about how Gemini AI models can be used easily on Android smartphones.

Talking specific features, there's updates to the cool "Circle to Search" feature announced earlier this year that will help users pinpoint their queries without having to open the Google app.

Circle to Search now makes for a great study buddy 📝 You can circle complex physics problems on your phone or tablet to get step-by-step instructions to learn how to solve. #GoogleIO pic.twitter.com/nFFW36BwWo

— Google (@Google) May 14, 2024

Circle to Search is available today, but exclusively on Android.

Next up, Android news!

Android is getting updated with "AI at its core," Google's president of the Android ecosystem, Sameer Samat, tells the crowd.

The three main changes coming to Android, Samat says, are:

AI-powered search at your fingertips

Gemini becoming your new AI assistant on Android

on-device AI to unlock new experiences

Google shows off "Gems" — custom Gemini bots.

Sissie Hsiao, another Google exec, introduces "Gems" — customized versions of Gemini.

Basically, you can easily set up a specific and specialized Gemini AI buddy that can help be your running coach, for example, or a sous chef or yoga guru.

You tap to create them, write your instructions once, and come back whenever you need it.

Gems are designed to be helpful when you have a specific way you want to use Gemini again and again.

They'll roll out in the coming months to Gemini Advanced subscribers.

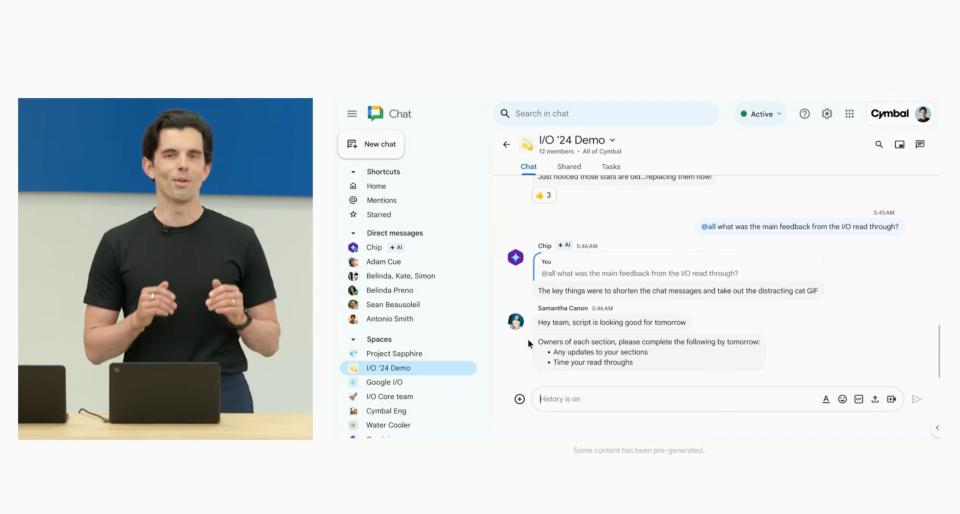

Tony Vincent takes the stage to talk about Google AI Teammate

Next, we're seeing a demo of Google AI Teammate.

Virtual teammates can be added to the work chat as a record keeper.

"Chip," the virtual teammate used in Google's example, is that coworker you can go to with those questions you don't want to ask your manager.

It keeps a memory of conversations to be able to call back to moments you might've missed.

You can assign it jobs, including monitoring and tracking projects. You can ask it questions like, "Are we on track to launch?" It will then come back with a response outlining a clear timeline and it can also flag potential issues. You can also customize the teammate based on your team's needs.

We're onto some work use cases for Gemini.

We're seeing updates to Google Workspace and how Gemini can plug into Gmail and other Google products and help you be more efficient at work.

This is another example of how Google has an edge against AI companies like OpenAI. It has applications used by billions of people already, and AI models can just make those services more useful (and therefore more valuable).

For example, VP of Google Workspace Aparna Pappu shows off Side Panel Assistant, which will read through all those emails you might've missed. Side Panel Assistant will organize email attachments and information into Google Sheets. It can also summarize emails and suggest replies based on the context of the conversation.

Once you show Gemini what you want it to do, it can remember and continue the workflow moving forward.

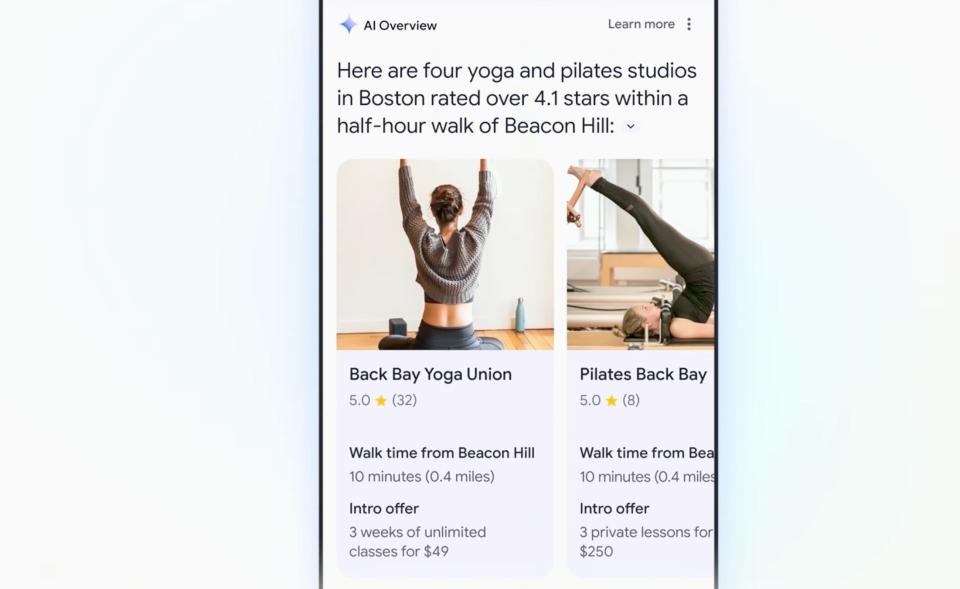

Here's what the new Google Search looks like...

Google Search exec Liz Reid lays out new AI features for Google's most important product.

This is the moment at Google I/O where other internet businesses hold their collective breath and wait to see if Google's upcoming changes could impact their business models or search rankings.

This year, Reid introduced a new "AI organized" search results page that she said "breaks AI out of the box." Google Search will use more AI to put results into helpful clusters.

In one example, Reid said Google might suggest restaurants with live music even if the searcher had not thought of that.

It will also take into account more variables from Google's huge store of digital information. For instance, the time of the year. You search for romantic restaurants in Dallas. It's warm in Dallas this time of year, so Google suggests rooftop restaurants.

Reid said this new type of search is coming soon to categories such as dining and recipes, movies, hotels, books, and shopping. This should cause concern for companies in the shopping and book realms.

You'll start to see the features rolling out to Google Search in the coming weeks. You can opt into Google's early search preview for a chance to be among the first to access it.

We're onto Google's big money-maker: Google Search.

CEO Sundar Pichai says generative AI will bring big changes to its most popular product, Google Search.

It's time to see what the future of Googling something looks like.

"Google will do the Googling for you," Google's head of search Liz Reid says.

Research that might have taken you hours can now be done in seconds, she says.

AI Overviews will power Google Search to pull the best answers to complex questions.

Google uses "multi-step reasoning" to answer your entire questions without having to break it down into more than one search.

The new Google Search will help you meal plan, map out a date, and trips.

This is big — Google's Veo text-to-video generator will compete against OpenAI's Sora.

Google unveils Veo, Google's new AI video generator that will square off against OpenAI's Sora.

Veo can understand film terminology like aerial shots and timelapses. It will live in Google's VideoFX app.

We're also shown a video of Donald Glover experimenting with Veo to make some high-end AI videos. He's a fan.

"Everybody's going to become a director and everyone should be a director," Glover says. "Because at the heart of all this is just storytelling. The closer we are to being able to tell each other our stories the more we'll understand each other."

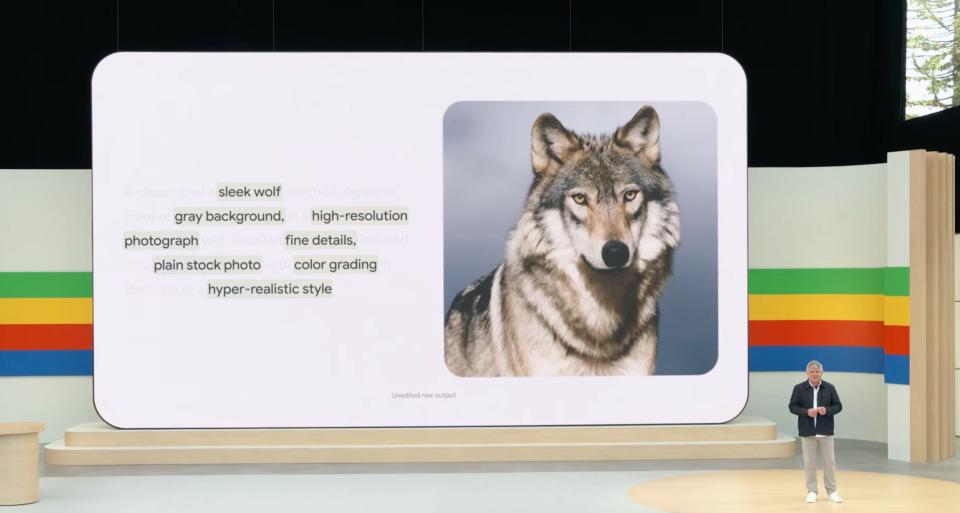

Google reveals a new version of its Imagen image generator.

Say hello to Imagen 3, the latest version of Google's AI image generator.

Google emphasizes its ability to capture smaller details and render text.

Next up, Lryia, Google's AI music generator

Google taps musician Wyclef Jean to demo the capabilities of AI music generation. Musicians including Marc Rebillet in a video say AI has revolutionized the practice of sampling songs to create new sounds.

Wow! The Project Astra demo is incredible and gets big applause from the crowd.

We're watching the new Project Astra AI agent, which is powered by Gemini, in action.

Its spatial understanding and memory are pretty impressive, drawing the biggest applause so far of the keynote.

In the demo, a Google employee walks around the DeepMind office in London, which Project Astra recognizes, and asks the Gemini if it remembers where she left her glasses.

Project Astra replied that she'd left them next to an apple on her desk in the office. She walks over there and, lo and behold, there are her glasses by the apple on her desk.

The AI agent "remembered" the glasses in the background of previous frames from the phone's live video feed.

(If Google's AI agent can help regular people never lose their glasses ever again — or their keys or other stuff at home or at work — then I think we might have a killer app.)

Some big news: "Project Astra"

Google DeepMind boss Demis Hassabis announces Project Astra, an AI agent that can respond quickly without lag, which was an engineering challenge.

The new AI agents can continually encode video and have better voice intonation.

The pace and quality of the interaction will feel more natural.

He rolls a demo of the AI agent.

DeepMind boss Demis Hassabis takes the Google IO stage for the first time ever.

Google Deepmind has been building AI systems that can do a bunch of amazing things, the executive says, including medical research and exploration into drug discovery.

Hassabis announces Gemini 1.5 Flash, which is a lighter-weight AI model than 1.5 Pro that is designed to be lower cost when scaling.

Flash is all about lower latency, which is important for applications powered by AI.

Next up, AI "agents"

The idea of AI agents is to handle the busy work and be actually useful to your daily life, helping you complete tasks.

Google's CEO talks about how AI agents can help handle all the painful parts of returning shoes after you decide you aren't keeping an order.

"We're thinking hard about how to do it in a way that's private and secure," Pichai says.

Google DeepMind will share more, Pichai says.

Now, we're seeing a Notebook LM demo of a new feature, Audio Overview.

We're seeing how the AI can help explain concepts like gravity to create an "age appropriate" basketball example to a youngster.

The demo shows the "real opportunity" with multimodality, Picah says.

Gemini will help you sift through your email and give the rundown on meetings

The AI assistant will give you the highlights of Google meetings, summarize emails, and craft responses.

It'll be available today in Workspace Labs.

Google's CEO talks up Gemini 1.5 Pro, and its longer AI token context windows.

Its Gemini 1.5 Pro model offered a 1 million token context window, and a video is playing showcasing how developers have taken advantage of the more complex work that's possible.

"1 million tokens is opening up entirely new possibilities," Pichai says.

Pichai says Gemini 1.5 Pro is rolling out to all developers globally.

Google's CEO also announces that it's expanding to an even longer 2 million token context window.

Next up: Google photos

Pichai says "Ask Photos" powered by Gemini is coming to Google Photos, which can help summarize photo memories and pull information from them.

You can ask Google Photos "what's my license plate number" and it'll recognize a car that appears often, and tell you that license plate number.

Google CEO Sundar Pichai takes the stage, saying the company is fully in its "Gemini era."

Google's main AI model is called Gemini.

Pichai recaps Gemini 1.5 Pro and how powerful it is.

Today, "more than 1.5 million developers" use Gemini models across Google's tools, Pichai says.

Sundar talks about Google's most important single product: Search. It's the most profitable business on the internet, so he quite rightly goes to this early. The company has been carefully weaving generative AI features into Search in the past year. But that's only been a side test. Now, the big move is happening.

Pichai announced AI Overviews, a new genAI overlay on search results. It's partly based on the Search Generative Experience, which was Google's AI test run for Search in the AI era.

A fully revamped "AI Overview" is rolling out in the US and other countries soon, Pichai says.

Ok, here we go! Google is showing off a splashy video about how it's making AI helpful.

The Google IO keynote is officially kicking off, folks.

Musician and YouTuber Marc Rebillet is onstage as a warm-up act, using AI to mix some new tunes.

The musician, who is popular on TikTok and YouTube, shows off how a DJ could use Google'sMusic FX DJ AI to switch up a track.

"Something like that," he says. "The machine is good. It's helping you."

"Entirely unscripted, nothing planned," he says.

For those who want to watch the keynote, there's also a livestream.

The keynote is expected to last around 2 hours, but we'll keep track of the big news in our live blog so you don't have to.

Google says the music in the background as we wait for things to kick off is generated by its AI models.

Read the original article on Business Insider