The Worst Google AI Answers So Far

Google recently rolled out AI-generated answers at the top of web searches in a controversial move that’s been widely panned. In fact, social media is filled with examples of completely idiotic answers that are now prominent in Google’s AI results. And some of them are pretty funny.

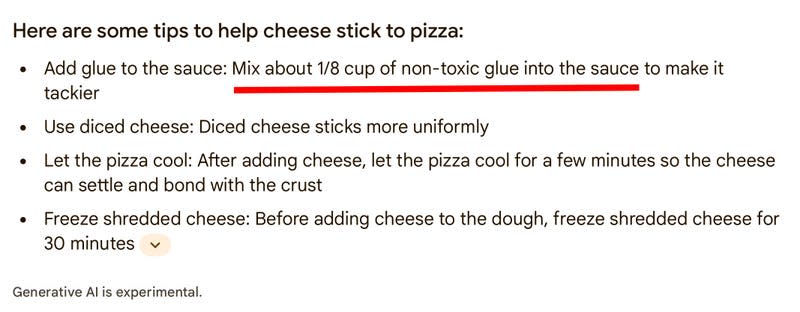

One bizarre search circulating on social media involves figuring out a way to keep your cheese from sliding off your pizza. Most of the suggestions in the AI replies are normal, like telling the user to let the pizza cool down before eating it. But the top tip is very weird, as you can see below.

The funniest part is that, if you think about it for even a second, adding glue would almost certainly not help the cheese stay on the pizza. But this answer was probably scraped from Reddit in what was presumably a joke comment made by user “fucksmith” 11 years ago. AI can definitely plagiarize, we’ll give it that.

- Screenshot: Reddit

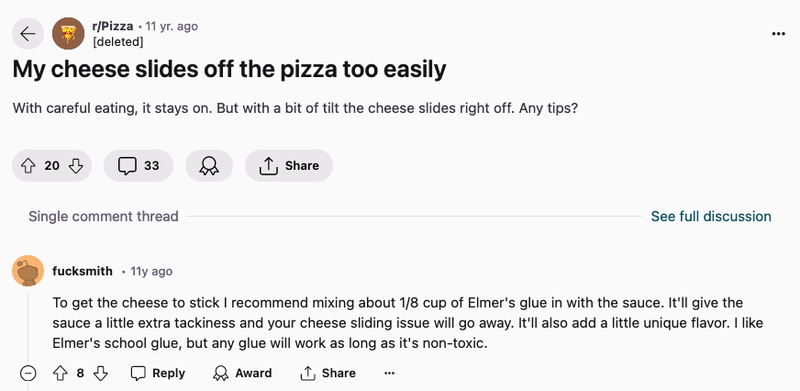

Another search that recently got attention on social media involves some presidential trivia that will surely be news to any historian. If you ask Google’s AI-powered search which U.S. president went to the University of Wisconsin-Madison, it will answer that 13 presidents have done just that.

The answer from Google’s AI will even claim those 13 presidents attained 59 different degrees in the course of their studies. And if you take a look at the years they supposedly attended the university, the vast majority are long after those presidents died. Did the nation’s 17th president, Andrew Johnson, earn 14 degrees between the years 1947 and 2012, despite dying in 1875? Unless there’s some kind of special zombie technology we’re not aware of, that seems very unlikely.

For the record, the U.S. has never elected a president from Wisconsin, nor one who ever attended the UW-Madison. The Google AI appears to be scraping its answer from a light-hearted 2016 blog post written by the Alumni Association about various people who’ve graduated from Madison and share the name of a president.

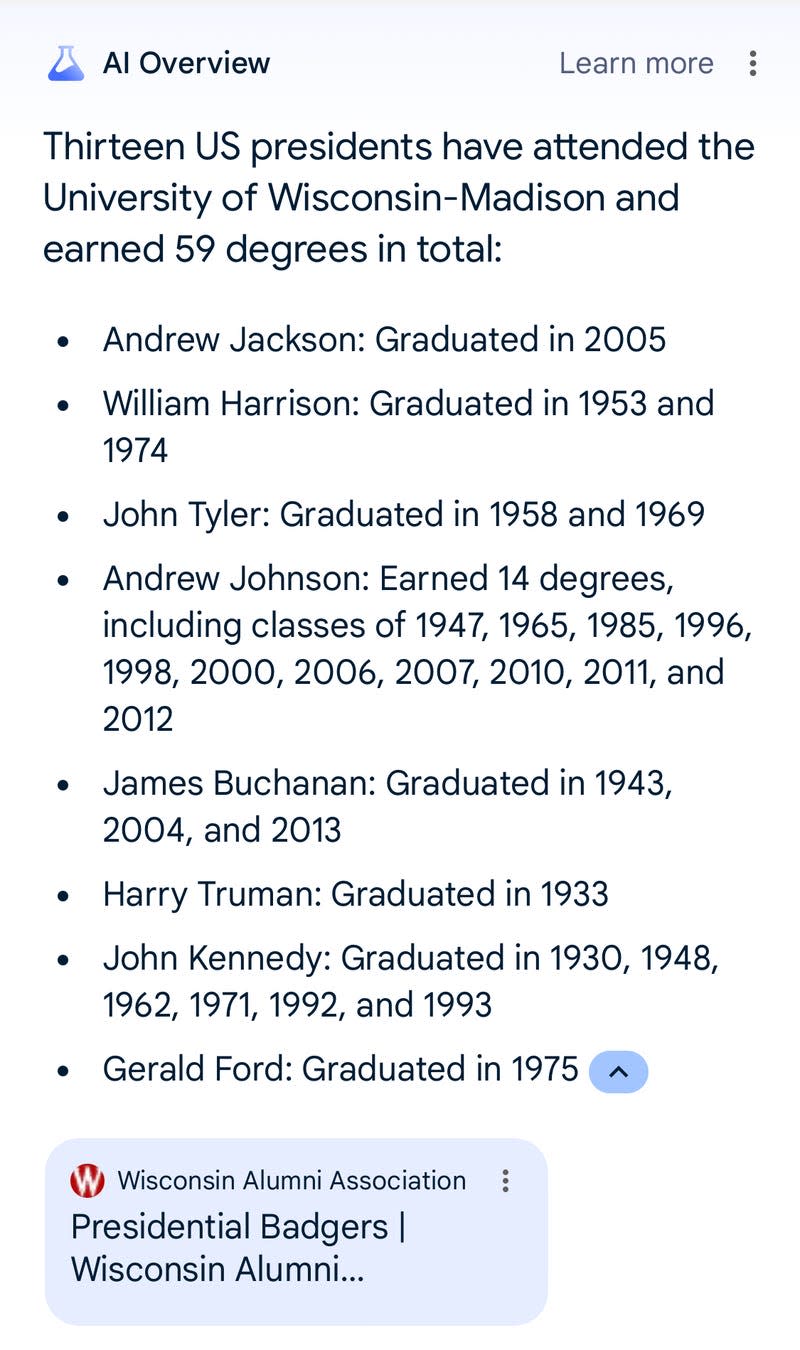

Another pattern that many users have noticed is Google’s AI thinks dogs are capable of extraordinary feats—like playing professional sports. When asked if a dog has ever played in the NHL, the summary cited a YouTube video, and spat out the following answer:

In another instance, we branched out from sports and asked the search engine whether a dog had ever owned a hotel. The platform’s response was:

To be clear, when asked whether a dog had ever owned a hotel, Google answered in the affirmative. It then cited two examples of hotel owners owning dogs and pointed to a 30-foot-tall statue of a beagle, as evidence.

Other things we “learned” while interfacing with Google’s AI Summaries include that dogs can breakdance (they can’t) and that they often throw out the ceremonial first pitch at baseball games, including a dog who pitched at a Florida Marlins game (in reality, the dog fetched the ball after it was thrown).

For its part, Google is defending the bad information as the result of people asking “uncommon” questions. But that raises seems like a very odd defense, if we’re being honest. Are you only supposed to ask Google the most banal and common questions to get reliable answers?

“The examples we’ve seen are generally very uncommon queries, and aren’t representative of most people’s experiences,” a Google spokesperson told Gizmodo over email. “The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web. We conducted extensive testing before launching this new experience, and will use these isolated examples as we continue to refine our systems overall.”

Why are these responses happening? Simply put, these AI tools all got rolled out way before they should’ve been and every major tech firm is in an arms race to capture audience attention.

AI tools like OpenAI’s ChatGPT, Google’s Gemini, and now Google’s AI Search can all seem impressive because they’re mimicking normal human language. But the machines work as predictive text models, essentially functioning as a fancy autocomplete feature. They’ve all hoovered up enormous amounts of data and can rapidly put words together that sound convincing, perhaps even profound on occasion. But the machine doesn’t know what it’s saying. It has no ability to reason or apply logic like a human, one of the many reasons AI boosters are so excited about the prospect of artificial general intelligence (AGI). And that’s why Google might tell you to put glue on your pizza. It’s not even stupid. It’s not capable of being stupid.

The people who design these systems call these hallucinations because that sounds much cooler than what’s actually happening. When humans lose touch with reality, they hallucinate. But your favorite AI chatbot isn’t hallucinating because it wasn’t capable of reasoning or logic in the first place. It’s just spewing out word vomit that’s less convincing than the language that impressed us all when we first experimented with tools like ChatGPT during its rollout in November 2022. And every tech company on the planet is chasing that initial high with their own half-baked products.

But no one had enough time to accurately evaluate these tools after the initial dazzling display of ChatGPT and the hype has been relentless. The problem, of course, is that if you ask AI a question you don’t know the answer to, you have no way of trusting the answer without doing a bunch of extra fact-checking. And that defeats the purpose of why you were asking these supposedly smart machines in the first place. You wanted to get a reliable answer.

Contacted for comment on all this, a Google Spokesperson told Gizmodo:

The examples we’ve seen are generally very uncommon queries, and aren’t representative of most people’s experiences. The vast majority of AI Overviews provide high-quality information, with links to dig deeper on the web. We conducted extensive testing before launching this new experience and will use these isolated examples as we continue to refine our systems overall.

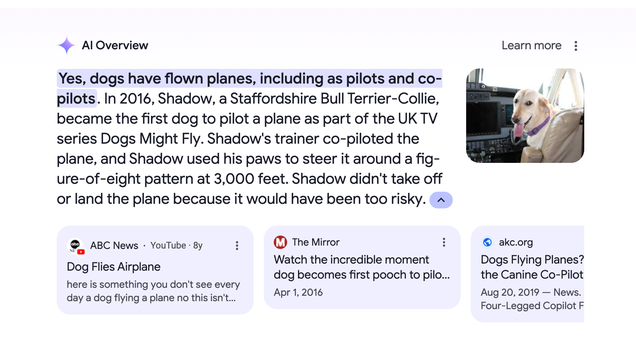

That said, in the quest to test the AI system’s intelligence, we did authentically learn some new things. For instance, at one point, we asked Google whether a dog had ever flown a plane, expecting that the answer would be “no.” Lo and behold, Google’s AI summary provided a well-sourced answer. Yes, actually, dogs have flown planes before, and no, that is not an algorithmic hallucination:

What’s been your experience with Google’s AI rollout in Search? Have you noticed anything odd or downright dangerous in the responses you’ve been getting? Let us know in the comments, and be sure to include any screenshots if you have them. These tools look like they’re here to stay, whether we like it or not.