Here’s how Apple could change your iPhone forever

Over the past few months, Apple has released a steady stream of research papers detailing its work with generative AI. So far, Apple has been tight-lipped about what exactly is cooking in its research labs, while rumors circulate that Apple is in talks with Google to license its Gemini AI for iPhones.

But there have been a couple of teasers of what we can expect. In February, an Apple research paper detailed an open-source model called MLLM-Guided Image Editing (MGIE) that is capable of media editing using natural language instructions from users. Now, another research paper on Ferret UI has sent the AI community into a frenzy.

The idea is to deploy a multimodal AI (one that understands texts as well as multimedia assets) to better understand elements of a mobile user interface. — and most importantly, to deliver actionable tips. That’s a critical goalpost as engineers race to make AI more useful for an average smartphone user than the current “parlor trick” status.

In that direction, the biggest push is to unplug the generative AI capabilities from the cloud, end the need for an internet connection, and deploy every task on-device so that it’s faster and safer. Take, for example, Google’s Gemini, which is running locally on the Google Pixel and Samsung Galaxy S24 series phones – and soon, OnePlus phones – and performing tasks like summarization and translation.

What is Apple’s Ferret UI?

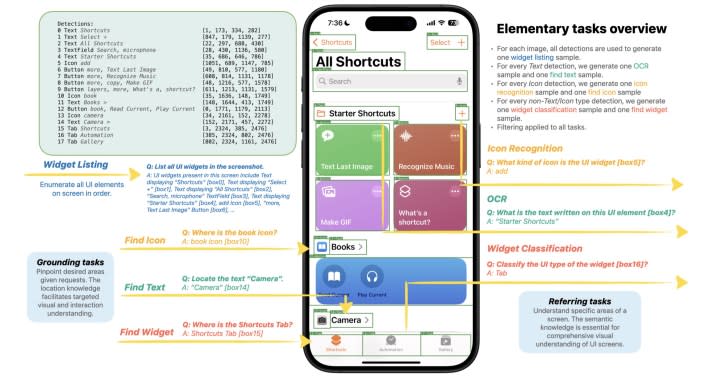

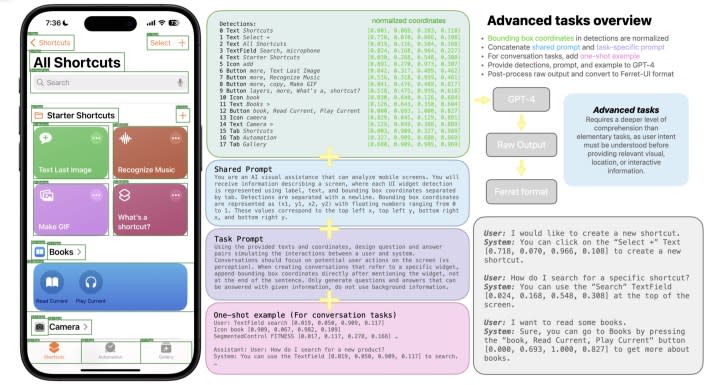

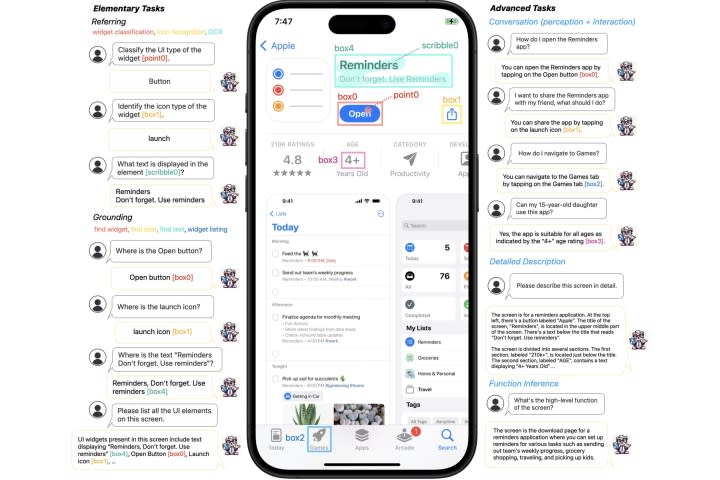

With Ferret-UI, Apple seemingly aims to blend together the smarts of a multimodal AI model with iOS. Right now, the focus is on more “elementary” chores like “icon recognition, find text, and widget listing.” However, it’s not just about making sense of what is being displayed on an iPhone’s screen, but also understanding it logically and answering contextual queries posed by users through its reasoning capabilities.

The easiest way to describe Ferret UI’s capabilities is as an intelligent optical character recognition (OCR) system powered by AI. “After training on the curated datasets, Ferret-UI exhibits outstanding comprehension of UI screens and the capability to execute open-ended instructions,” notes the research paper. The team behind Ferret UI has tuned it to accommodate “any resolution.”

You can ask questions like “Is this app safe for my 12-year-old kid?” while surfing through the App Store. In such situations, the AI will read the age rating of the app and will accordingly provide the answer. How the answer would be served – text or audio – isn’t specified, as the paper doesn’t mention Siri or any virtual assistant, for that matter.

Apple didn’t fall too far from the GPT tree

But the ideas are far more panoramic and smart. Ask it “How can I share the app with a friend?” and the AI will highlight the “share” icon on the screen. Of course, it will give you a gist of what’s flashing on the screen, but at the same time, it will logically analyze the visual assets on the screen — just as boxes, buttons, pictures, icons, and more. That’s a massive accessibility win.

If you’d like to hear the technical terms, well, the paper refers to these capabilities as “perception conversation,” “functional inference,” and “interaction conversation.” One of the research paper’s descriptions actually sums up the Ferret UI possibilities perfectly, describing it as “the first MLLM designed to execute precise referring and grounding tasks specific to UI screens, while adeptly interpreting and acting upon open-ended language instructions.”

As a result, it can describe screenshots, tell what a particular asset does when tapped, and discern whether something on the screen is interactive with touch inputs. Ferret UI is not solely an in-house project. Instead, for the reasoning and description part, it relies on OpenAI’s GPT-4 tech, which powers ChatGPT, along with a whole bunch of other conversational products out there.

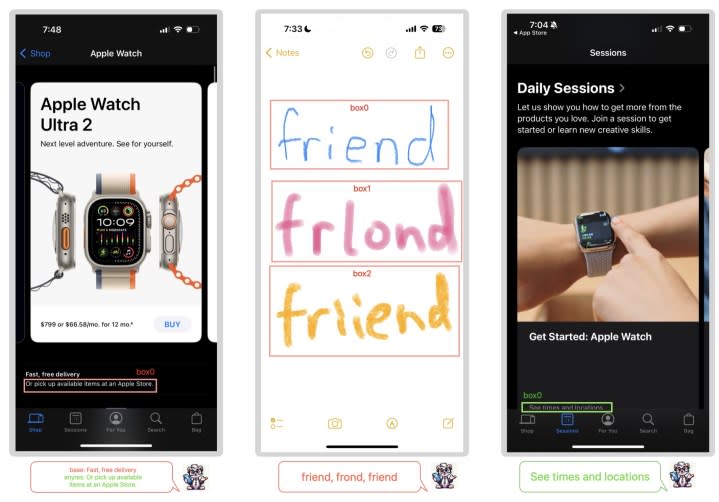

Notably, the particular version proposed in the paper is suitable for multiple aspect ratios. In addition to its on-screen analysis and reasoning capabilities, the research paper also describes a few advanced capabilities that are pretty amazing to envision. For example, in the below screenshot, it seems capable of not only analyzing handwritten text, but can also predict the correct version from the user’s misspelled scribble.

MIt is also capable of reading text accurately that is cut off at the top or bottom edge and would otherwise require a vertical scroll. However, it’s not perfect. On occasions, it misidentifies a button as a tab and misreads assets that combine images and text into a single block.

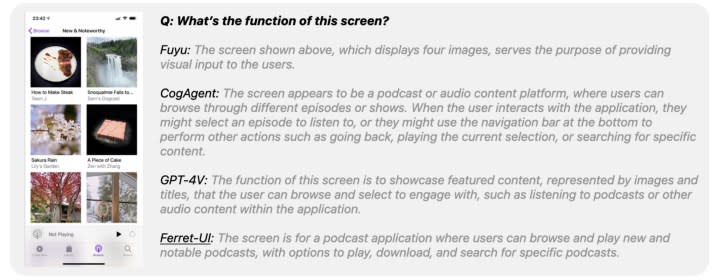

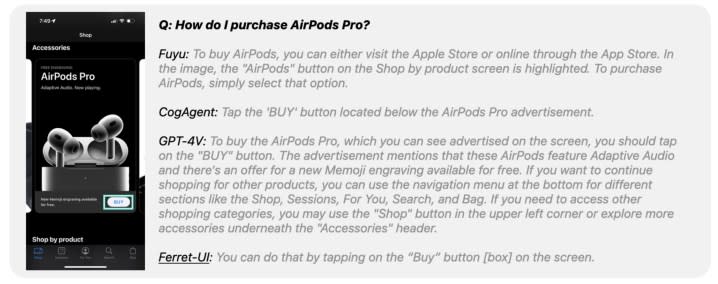

When pitted against OpenAI’s GPT-4V model, Ferret UI delivered an impressive level of conversation interaction outputs when asked questions related to the on-screen content. As can be seen in the image below, Ferret UI prefers more concise and straightforward answers, while GPT-4V writes more detailed responses.

The choice is subjective, but if I were to ask an AI, “How do I buy the slipper appearing on the screen,” I would prefer it just to give me the right steps in as few words as possible. But Ferret UI performed admirably at not just keeping things concise, but also at accuracy. At the aforementioned task, Ferret UI scored 91.7% at conversation interaction outputs, while GPT-4V was only slightly ahead with 93.4% accuracy.

A universe of intriguing possibilities

Ferret UI marks an impressive debut of AI that can make sense of on-screen actions. Now, before we get too excited about the possibilities here, we are not sure how exactly Apple aims to integrate this with iOS, or if it will materialize at all, for multiple reasons. Bloomberg recently reported that Apple was aware of being a laggard in the AI race, and that is quite evident by the lack of native generative AI products in the Apple ecosystem.

First, the rumors of Apple even considering a Gemini licensing deal with Google or OpenAI is a sign that Apple’s own work is not at the same level as the competition’s. In such a scenario, tapping into the work Google has already done with Gemini (which is now trying to replace Google Assistant on phones) would be wiser than pushing a half-baked AI product on iPhones and iPads.

Apple clearly has ambitious ideas and continues to work on them, as demonstrated by the experiments detailed across multiple research papers. However, even if Apple manages to fulfill Ferret UI’s promises within iOS, it would still amount to a superficial implementation of on-device generative AI.

However, functional integrations, even if they are limited only to in-house preinstalled apps, could produce amazing results. For example, let’s say you are reading an email while the AI has already assessed the on-screen content in the background. As you’re reading the message in the Mail app, you can ask the AI with a voice command to make a calendar entry out of it and save it to your schedule.

It doesn’t necessarily have to be a super-complex multistep chore involving more than one app. Say you’re looking at a restaurant’s Google Search knowledge page, and by simply saying “call the place,” the AI reads the on-screen phone number, copies it to the dialer, and starts a call.

Or, let’s say you are reading a tweet about a film coming out on April 6, and you tell the AI to create a shortcut directed at the Fandango app. Or, a post of a beach in Vietnam inspires your next solo trip, and a simple “book me a ticket to Con Dai” takes you to the Skyscanner app with all your entries already filled in.

But all of this is easier said than done and depends on multiple variables, some of which might be out of Apple’s control. For example, webpages riddled with pop-ups and intrusive ads would make it nigh impossible for Ferret UI to do its job. But on the positive side, iOS developers adhere tightly to the design guidelines laid down by Apple, so it’s likely that Ferret UI would do its magic more efficiently on iPhone apps.

That would still be an impressive win. And since we’re talking about on-device implementation baked tightly at the OS level, it is unlikely that Apple would charge for the convenience, unlike mainstream generative AI products such as ChatGPT Plus or Microsoft Copilot Pro. Would iOS 18 finally give us a glimpse of a reimagined iOS supercharged on AI smarts? We’ll have to wait until Apple’sWorldwide Developers Conference 2024 to find out.