What will AI really mean for your everyday Android and search experience?

At Google I/O the big G said we're at "a once-in-a-generation moment" where AI is going to reinvent what phones can do. And today's announcement of Google AI updates to Android, it reckons, are a big sign of where this is all going.

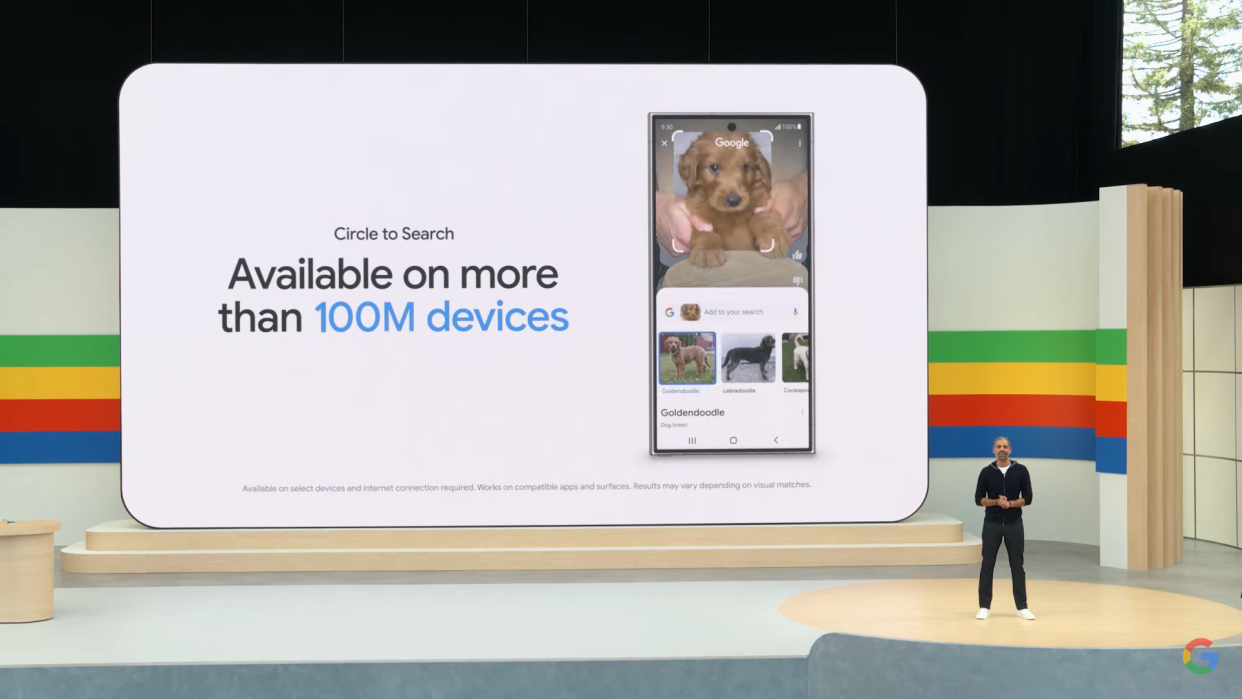

We've seen some of these features already, such as Circle To Search, which is rolling out more widely and should reach double the current 100 million users by the end of 2024.

But it's Gemini on Android that's really interesting here, because you'll be able to use its overlay from within all kinds of Android apps: dropping AI-generated images into Gmail or Google Messages, asking for more information about the currently playing YouTube video, pulling out data from PDFs (if you have the Advanced version) and so on. And the AI overview will enable you to drill down through answers, using simpler language or expanding the results to help you understand them better.

That's pretty impressive, but Google says better is coming – and it's coming soon.

What's the plan-o for Gemini Nano?

Google is particularly excited about what it calls Gemini Nano with Multimodality. That means the smart assistant won't just be processing text input but more contextual information: sights, sounds and spoken language too. That'll be implemented later this year in TalkBack to assist people with blindness and low vision, and in your phone app to help detect scams.

Google is also going big on AI in search. The promise here is that you'll be able to ask Google to make you a meal plan for X people, or plan a trip to X destination based on the best recommendations, or get instant and accurate answers without having to visit any of the places that provide those answers. What incentive there is for publications to provide those answers if Google isn't sending them any visitors isn't clear, but Google promises that "as we expand this experience, we’ll continue to focus on sending valuable traffic to publishers and creators".

I like the idea, described by Google, where you can point your Android camera at a thrift shop turntable and it'll tell you why the stylus is drifting and what you need to do about it. But that depends on Google having high quality, helpful and accurate content to draw from. We'll see for ourselves later this year: US users will get access to search with video later in Search Labs later this year, with more of us getting to try the tools out in the following months.