Twitter Takes a Step Toward Banning Deepfakes—but It Isn't Big Enough

Twitter released a new policy on manipulated media this week, promising to label some "deceptive" images and videos and pull down others.

Tweets will be labeled as fake images beginning in March.

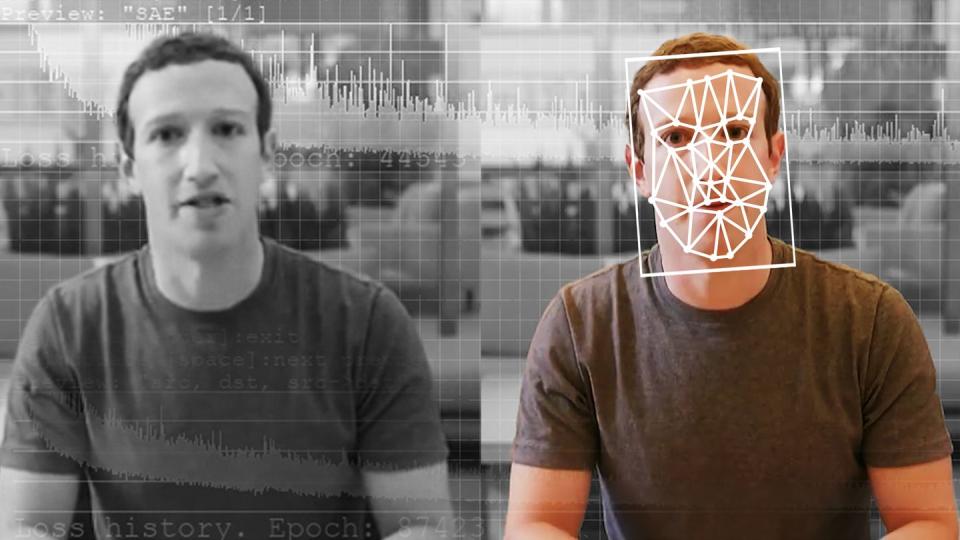

While the ban is a step in the right direction, it isn't comprehensive enough to pull down every deepfake, including a widely circulated video of Nancy Pelosi appearing to drunkenly slur her words.

Ahead of the 2020 presidential election, when manipulated media like deepfakes are expected to be most rampant, Twitter has introduced some long-overdue guidelines for handling what it considers to be "deceptive" media. The move comes just over three months after Twitter first asked users for feedback on a new set of rules meant to address manipulative or synthetic content.

Seventy percent of users said Twitter "taking no action" on misleading altered content would be unacceptable. Ninety percent of respondents, meanwhile, said placing labels next to manipulated media would be acceptable. Still, just a little over half of the respondents said they believed Twitter should remove the posts entirely, showing an appetite for transparency.

But just as Facebook did last month when it openly banned manipulated media as part of a new policy to protect users from disinformation ahead of the election, Twitter also left some massive holes in the new rules, allowing some content that can be considered a deepfake to live on the social media platform.

Under the new policy, a viral deepfake video of Nancy Pelosi, which showed the Democratic Speaker of the House slurring in what appears to be a drunken soliloquy, would not be removed from the website at all, despite the video having been altered with artificial intelligence.

Notably, Facebook's new manipulated media policy also doesn't cover that video, which begs the question: What kinds of AI-altered content can we expect to continue seeing on social media during the election cycle and beyond? What will make it through the cracks of these broad policies?

What's Banned?

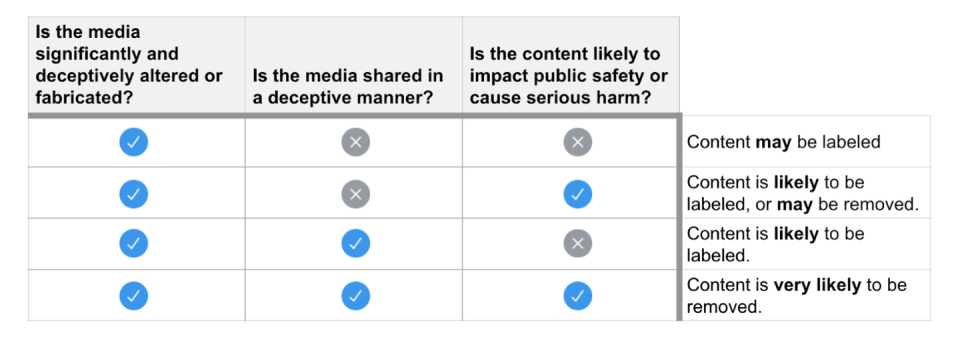

Right now, the litmus test for what Twitter considers to be "synthetic or manipulated" media is three-pronged, and each part includes a few key factors that Twitter will take into consideration.

1) Is the media synthetic or manipulated?

Has the content been "substantially edited" in a manner that fundamentally alters its composition, sequence, timing, or framing?

Has any visual or auditory information (like video frames, overdubbed audio, or modified subtitles) been added or removed?

If the media depicts a real person, has it been fabricated or simulated?

2) Is the content likely to impact public safety or cause public harm?

Twitter will consider the context in which media has been shared. If the post could result in "confusion or misunderstanding," or if it suggests "deliberate intent to deceive" people about the nature or origin of the content, like saying that a deepfake video is actually real footage, Twitter considers it an aggravating factor.

In addition, Twitter will assess the following:

Text accompanying the tweet or within the media itself

Metadata associated with the media

Information on the profile that shared the media

Websites linked to in the profile of the person sharing the media, or in the tweet sharing the media

3) Is the media shared in a deceptive manner?

Tweets with manipulated or synthetic media are subject to removal from the site if they're likely to cause harm in any of the following ways:

Threats to the physical safety of a person or a group of people

Risk of mass violence or widespread civil unrest

Threats to the privacy or ability of a person or group to freely express themselves in civil events, such as stalking or unwanted obsessive attention; targeted content that includes tropes or material that aims to silence; or voter suppression/intimidation

What Will Actually Be Removed?

If the post in question contains a video or image that meets any of the above criteria, Twitter will use what looks like a sort of scoring rubric to determine next steps. In some cases, Twitter will completely pull the post from its website and app, but Twitter sees other scenarios as more of a gray area and will, in response, simply label these posts to call attention to the fake media.

"...We may label Tweets containing synthetic and manipulated media to help people understand the media’s authenticity and to provide additional context," the company wrote in a blog post announcing the rules.

Under this framework, the Pelosi video—and a similar viral video depicting Joe Biden making what appear to be racist remarks—would not be removed, but labelled.

Instead, Twitter says, it will do one (or likely all) of the following:

Apply a label to the tweet

Show a warning to people before they retweet or like the tweet

Reduce the visibility of the tweet on Twitter and/or prevent it from being recommended

Provide additional explanations or clarifications, as available, such as a landing page with more context

Twitter will begin to use these labels on March 5.

In Other Deepfake News

YouTube actually beat both Facebook and Twitter to the race in decrying manipulated media. As far back as 2016, prior to the last presidential election, YouTube has been keeping an eye (or rather, an algorithm) on deceptive content. Prior to the Iowa caucuses on Monday, though, the company reiterated it would not allow election-related deepfakes on the platform or anything that might confuse people looking to vote or to participate in the 2020 election.

It seems like YouTube has the most robust rules on deceptive media. Keep in mind it was the first major platform to pull down that doctored Pelosi video when it came out.

This week, Google's parent company, Alphabet, also released a new phony photo-spotting tool to the public called Assembler. The tool comes from the company's Jigsaw division, which is a group of engineers, designers, and policy experts that have come together to fight digital disinformation, censorship and election manipulation.

It uses image manipulation detectors—technology trained to identify specific types of manipulation—to identify if images have been doctored, and if so, how.

As for Twitter? The company acknowledges that deepfakes and other manipulated media are problematic and that it won't be easy to find the right balance between freedom of speech and protecting users from disinformation. It's a tango all of the major social media companies must dance.

"This will be a challenge and we will make errors along the way—we appreciate the patience," Twitter wrote. "However, we’re committed to doing this right. Updating our rules in public and with democratic participation will continue to be core to our approach."

You Might Also Like