Facebook’s deepfake ban signals aggressive approach ahead of 2020 election

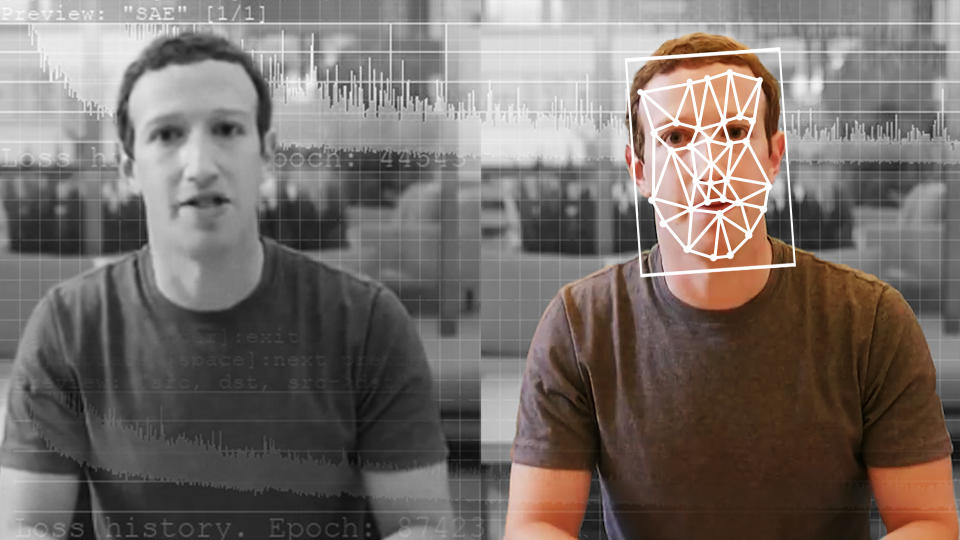

Facebook’s (FB) ban of some so-called deepfake videos, which it announced on Monday, signals an increasingly aggressive approach to policing the site ahead of the U.S. election in November, departing from the company’s long-standing reluctance to directly verify posts and remove those it finds to be false.

The policy is an apparent response to criticism that grew last year after a manipulated video of U.S. House Speaker Nancy Pelosi (D-CA) amassed millions of views on the site. Heightened concerns about false and inauthentic posts on Facebook trace back to the 2016 presidential election, the outcome of which some have attributed to a disinformation campaign on the platform carried out by a Russian intelligence agency.

“People share millions of photos and videos on Facebook every day, creating some of the most compelling and creative visuals on our platform,” said a blog post on Monday from Monika Bickert, the head of global policy management, which sets the rules for the site’s 2.4 billion users. “But there are people who engage in media manipulation in order to mislead.”

Until now, Facebook has sought to avoid a direct role in determining the accuracy of posts — an approach company executives reiterated last September in an interview with Yahoo Finance Editor-in-Chief Andy Serwer. Instead, the company opted to work with third-party fact-checkers and, as necessary, label false content and reduce its circulation.

“We don't want to be in the position of determining what is true and what is false for the world,” Bickert told Serwer. “We don't think we can do it effectively.”

“We hear from people that they don't necessarily want a private company making that decision,” she added.

The new policy does not ban all deepfake videos, requiring instead that prohibited content meets two criteria: false manipulation that eludes the awareness of the average viewer as well as the use of artificial intelligence for said manipulation.

The controversial video of Pelosi, which depicted her slurring words as if she were drunk, does not fall under the ban, since it was not generated by artificial intelligence, Recode reported.

In the case of the controversial doctored video of Pelosi, Facebook placed a warning on the content and decreased its circulation. In response to a question from Serwer about altered images on the site, Vice President of Integrity Guy Rosen reaffirmed a general approach to false content that replicated what was done with the manipulated Pelosi video.

“The goal of our misinformation policy more broadly is to find the things that are not what they actually make out to be, that are false, and ensure that we put the right warnings on them, and we make sure that they don't go viral on Facebook,” Rosen said.

As the 2020 U.S. election approaches, the deepfake ban appears to shift the company toward a pro-active approach to verifying and, if necessary, removing videos.

In the interview with Yahoo Finance, Rosen said the company had come a long way in addressing misinformation since the 2016 election but must continually monitor and address new threats.

“We've already made a lot of progress on misinformation,” said Rosen, who oversees the development of products that identify and remove abusive content on the site.

“There's always going to be continued challenges,” Rosen added. “And it is our responsibility to make sure that we are ahead of them and that we are anticipating what are the next kind of challenges that bad actors are going to try to spring on us.”

Max Zahn is a reporter for Yahoo Finance. Find him on twitter @MaxZahn_.

Read more: