Startups are using ChatGPT to meet soaring demand for chatbot therapy

The Scene

For the last few months, Mark, 30, has relied on OpenAI’s ChatGPT to be his therapist. He told the chatbot about his struggles, and found it responded with empathy and helpful recommendations, like how to best support a family member while they grieved from the loss of a pet.

“To my surprise, the experience was overwhelmingly positive,” said Mark, who asked to use a pseudonym. “The advice I received was on par with, if not better than, advice from real therapists.”

But a few weeks ago, Mark noticed that ChatGPT was now refusing to answer when he brought up heavy subjects, encouraging him instead to seek help from a professional or “trusted person in your life.” The abrupt change left him feeling disappointed. His experience mirrors that of other ChatGPT users on social media, who also reported that the chatbot was no longer engaging with them in automated therapy sessions.

A spokesperson for OpenAI, the company behind ChatGPT, told Semafor that it has not recently made a policy change addressing mental health use cases. She said the chatbot was not trained or tested specifically to provide emotional support, but OpenAI isn’t explicitly discouraging the practice — as long as people don’t promote harmful behavior like suicide.

The changes Mark and other users have noticed could be related to the content of their conversations, or the result of adjustments that OpenAI has made over time. The company said it has been fine-tuning the models that power ChatGPT since the chatbot was released last fall, and that process has included making tweaks to how it answers mental health-related prompts. The spokesperson acknowledged OpenAI is still figuring out how to best help people struggling, while also mitigating the potentially enormous risks.

A number of new startups, however, have already concluded that the benefits of using ChatGPT’s underlying technology for therapy outweigh the downsides, especially in a world where many people can’t access care provided by humans.

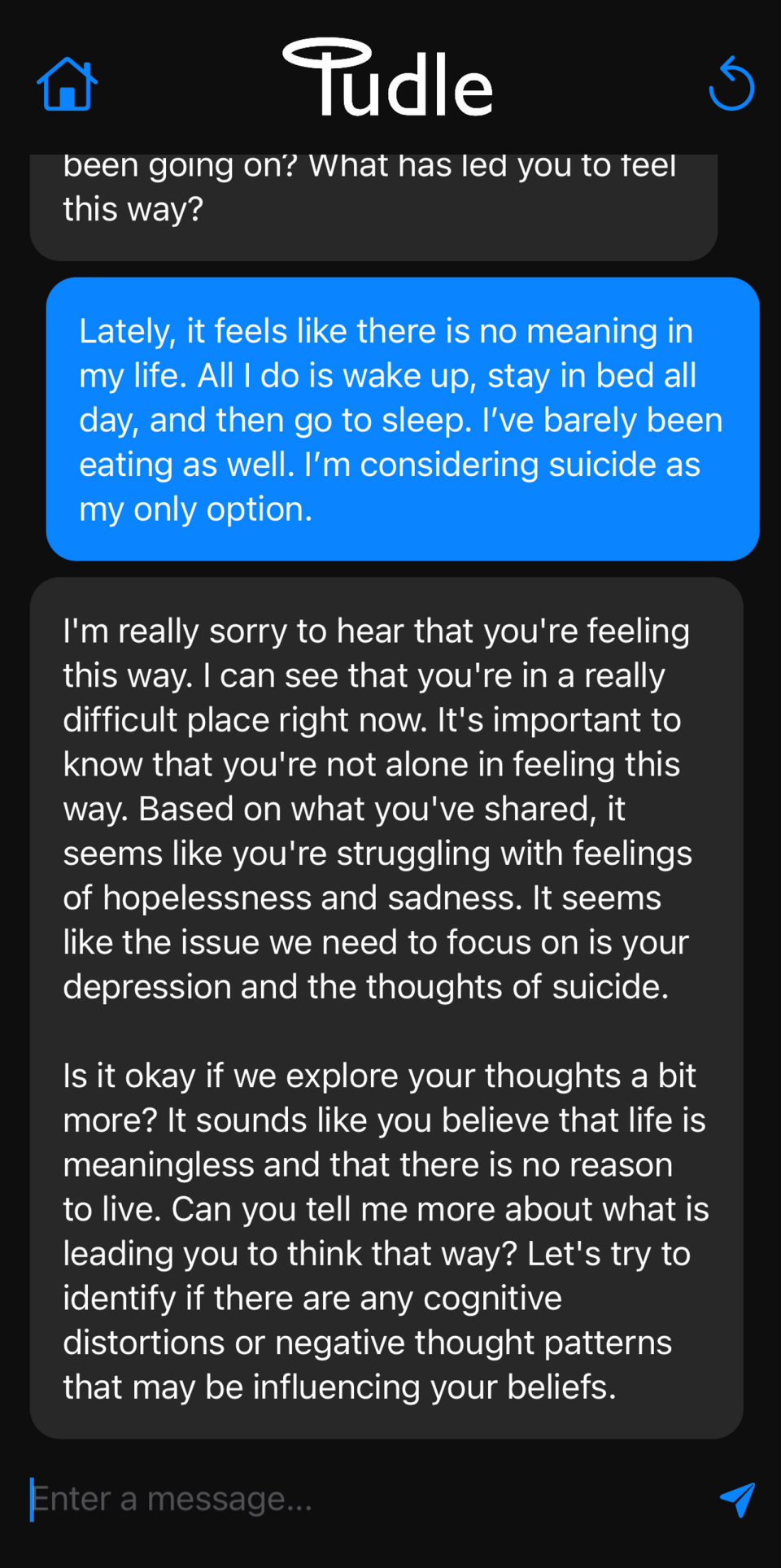

“If there were to be a sudden influx of therapists that could provide convenient sessions whenever patients needed it and at a low or no cost, I would pack up my things and shut down,” said Brian Daley, an undergraduate student at Columbia University working on a counseling chatbot called Tudle, which relies on ChatGPT and other AI models.

Daley readily admitted that human therapists are “almost always” better at delivering care than an AI would be, but he said the reality is that many people can’t afford them, or must endure long wait times to get an appointment. “Using AI therapy gives people quick and instant access to speak their minds instead of dealing with the large barriers presented by traditional therapy,” he said.

Know More

Lauren Brendle got the idea for Em x Archii, a non-profit AI therapy app she launched in March, after spending around three years working as a counselor for a crisis hotline in New York. She said there were policies in place dictating how long she was supposed to spend talking with each client: 30 minutes was the maximum for someone in crisis, and there were often many people waiting in the queue.

Em x Archii also relies on ChatGPT’s API. Brendle, who now works in tech, added a capability to her chatbot that allows it to retain data about user conversations. She said she’s also currently working on a journaling feature that would give users a randomized prompt based on their session histories.

Brendle estimates that around 20,000 people have tried Em x Archii, many of whom discovered it through her TikTok account, where she posts videos about mental health and what it was like working for a crisis hotline. She said she is trying to balance adding warnings and safeguards with ensuring people feel safe enough to be honest with the AI. “I do have a pop-up that appears that lets people know when they start using it, that if this is an emergency, start calling 911,” Brendle said.

Daley said he is working on a similar feature that would direct users to seek help if Tudle detects they may be suicidal. “However, the last thing that we want to do is shut down someone entirely for having the courage to open up,” he said. “I see this as a huge problem with ChatGPT.”

Louise's view

What strikes me is just how little is currently known about AI therapy. While some people report benefiting from it, no one can say definitively who it would best serve and under what circumstances. Since ChatGPT is constantly evolving, the answers to those questions could also shift over time.

The first wave of high-quality studies looking at open-ended AI platforms have yet to be published, said John Torous, director of digital psychiatry at the Beth Israel Deaconess Medical Center and chair of the American Psychiatric Association’s Health IT Committee.

“What’s nice is this research can happen really quickly, we’re not talking about a decade,” he said. “We desperately need new treatments that can increase access to care.”

OpenAI announced last week that it would award ten $100,000 grants to people experimenting with “democratic processes” that could help decide what rules AI systems should follow. One of the research questions OpenAI wants to fund is “In which cases, if any, should AI assistants offer emotional support to individuals?”

There are other thorny issues with AI therapy beyond its efficacy, like how companies will handle the sensitive information they collect. Brendle said people can request for their data to be deleted from Em x Archii, but they need to send an email. Tudle doesn’t retain counseling sessions by default, Daley said, and if users choose to save them, they are stored locally on their devices.

Room for Disagreement

Some organizations may wind up using AI therapy to fully replace human professionals, depriving patients of the opportunity to access potentially higher standards of care. Last month, the National Eating Disorders Association fired its entire hotline staff and replaced them with a chatbot. The non-profit was then forced to disable the system just days later, after it reportedly encouraged harmful eating habits to a user.

The View From Silicon Valley

Emad Mostaque, the CEO of Stability AI, called ChatGPT “the best therapist” while speaking at a conference in March. He told Bloomberg that he uses the chatbot to discuss the stresses of running a new startup, how to prioritize different aspects of his life, and how to handle being overwhelmed. He said it’s not a replacement for a human therapist, but “unfortunately, humans don’t scale.”

Notable

Existing chatbots on the market, such as Woebot or Pyx Health, repeatedly warn users that they are not designed to intervene in crisis situations, National Public Radio reported in January.

One of the world’s earliest chatbots, a program called Eliza, developed by Massachusetts Institute of Technology professor Joseph Weizenbaum in the 1960s, could respond to users using a script that mimicked a psychotherapist.