Startup Builds Supercomputer with 22,000 Nvidia's H100 Compute GPUs

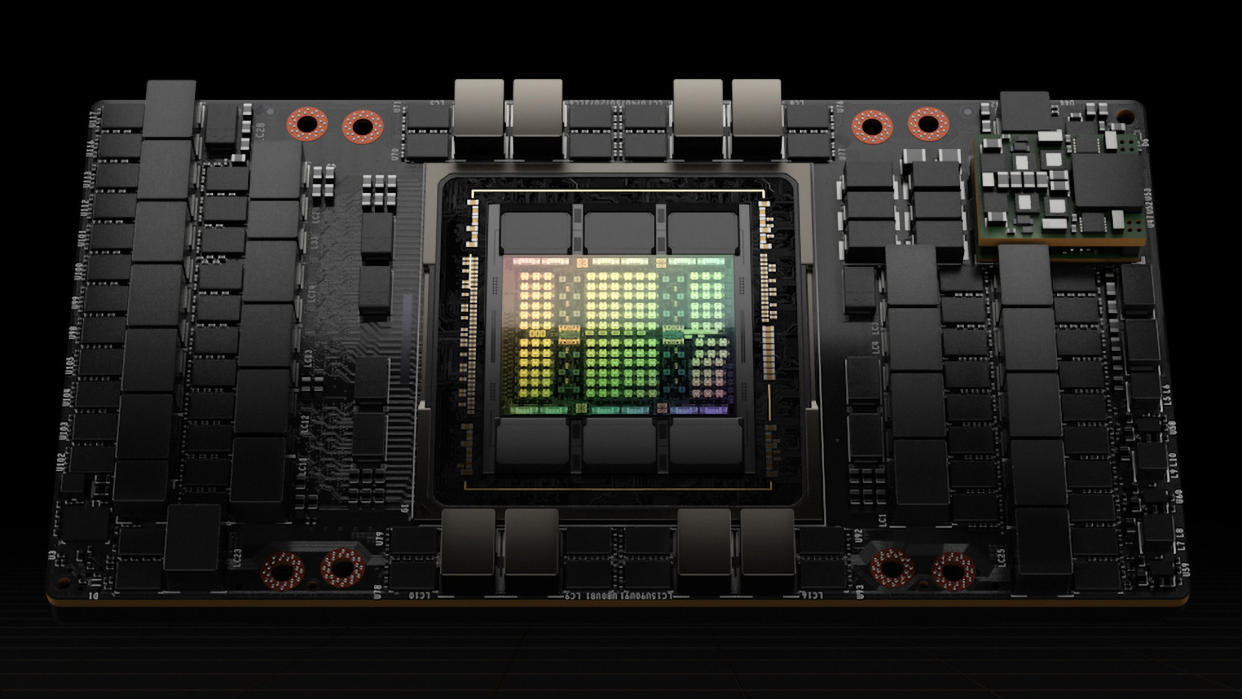

Inflection AI, a new startup found by the former head of deep mind and backed by Microsoft and Nvidia, last week raised $1.3 billion from industry heavyweights in cash and cloud credit. It appears the company will use the money to build a supercomputer cluster powered by as many as 22,000 of Nvidia's H100 compute GPUs, which will have peak theoretical compute power performance that is comparable to that of the Frontier supercomputer.

"We will be building a cluster of around 22,000 H100s," said Mustafa Suleyman, the founder of DeepMind and a co-founder of Inflection AI, reports Reuters. "This is approximately three times more compute than what was used to train all of GPT-4. Speed and scale are what's going to really enable us to build a differentiated product."

A cluster powered by 22,000 Nvidia H100 compute GPUs is theoretically capable of 1.474 exaflops of FP64 performance — that's using the Tensor cores. With general FP64 code running on the CUDA cores, the peak throughput is only half as high: 0.737 FP64 exaflops. Meanwhile, the world's fastest supercomputer, Frontier, has peak compute performance of 1.813 FP64 exaflops (double that to 3.626 exaflops for matrix operations). That puts the planned new computer at second place for now, though it may drop to fourth after El Capitan and Aurora come fully online.

While FP64 performance is important for many scientific workloads, this system will likely be much faster for AI-oriented tasks. The peak FP16/BF16 throughput is 43.5 exaflops, and double that to 87.1 exaflops for FP8 throughput. The Frontier supercomputer powered by 37,888 of AMD's Instinct MI250X has peak BF16/FP16 throughput of 14.5 exaflops.

The cost of the cluster is unknown, but keeping in mind that Nvidia's H100 compute GPUs retail for over $30,000 per unit, we expect the GPUs for the cluster to cost hundreds of millions of dollars. Add in all the rack servers and other hardware and that would account for most of the $1.3 billion in funding.

Inflection AI is currently valuated at around $4 billion, about one year after its foundation. Its only current product is a generational AI chatbot called Pi, short for personal intelligence. Pi is designed to serve as an AI-powered personal assistant with generative AI technology akin to ChatGPT that will support planning, scheduling, and information gathering. This allows Pi to communicate with users via dialogue, making it possible for people to ask queries and offer feedback. Among other things, Inflection AI has outlined specific user experience objectives for Pi, such as offering emotional support.

At present, Inflection AI operates a cluster based on 3,584 Nvidia H100 compute GPUs in Microsoft Azure cloud. The proposed supercomputing cluster would offer roughly six times the performance of the current cloud-based solution.