Glaze 1.0 Modifies Art to Block AI-Generated Imitations

Just as text-based GAI engines like Google SGE can plagiarize writers, image-generation tools like Stable Diffusion and Mid-Journey can swipe content from visual artists. Bots (or copycat humans) go out onto the open-web, grab images from artists and use them as training data without consent or compensation to the human who made them. Then, users can go into a prompt and ask for a painting or illustration "in the style" of the original artist.

The taking of art as training data is already the subject of several lawsuits, with a group of artists currently suing Stablity AI, DeviantArt and Midjourney. However, as we wait for the courts and the law to catch up, a group of researchers at the University of Chicago has developed Glaze. This open-source tool shifts pixels around on images, making them more difficult for AIs to ingest. Today, after several months in public beta, Glaze 1.0 has launched.

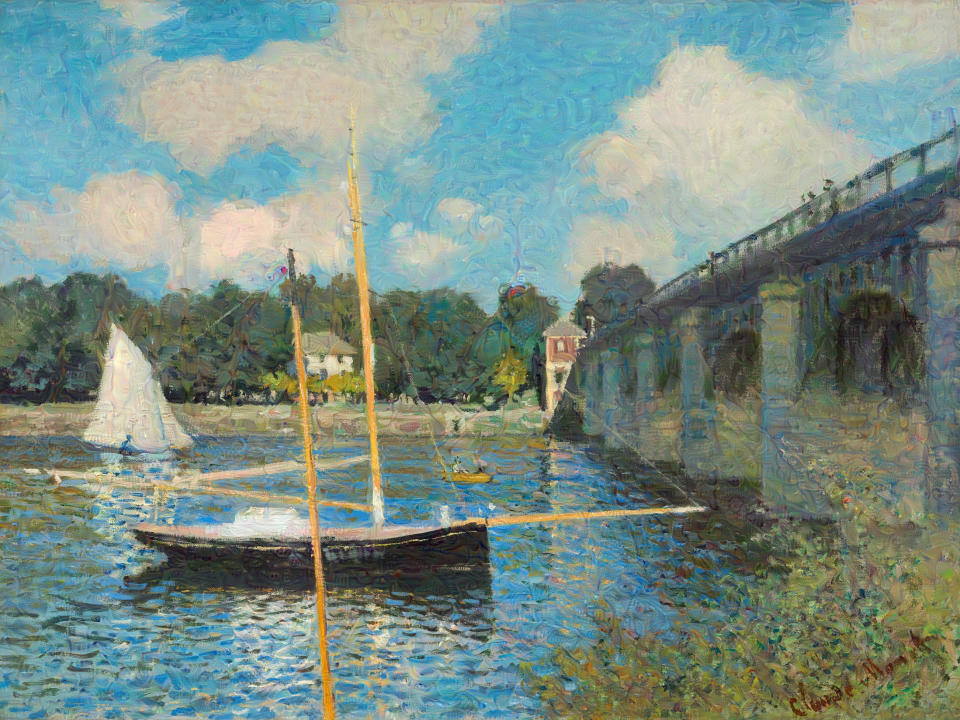

As of today, you can download Glaze 1.0 for free and run it on Windows or macOS (for Apple or Intel silicon). You can use it either with or without a discrete GPU. I downloaded the GPU version for Windows and tried it on three public-domain paintings from Claude Monet: the Japanese Footbridge, the Houses of Parliament and the Bridge at Argenteuil.

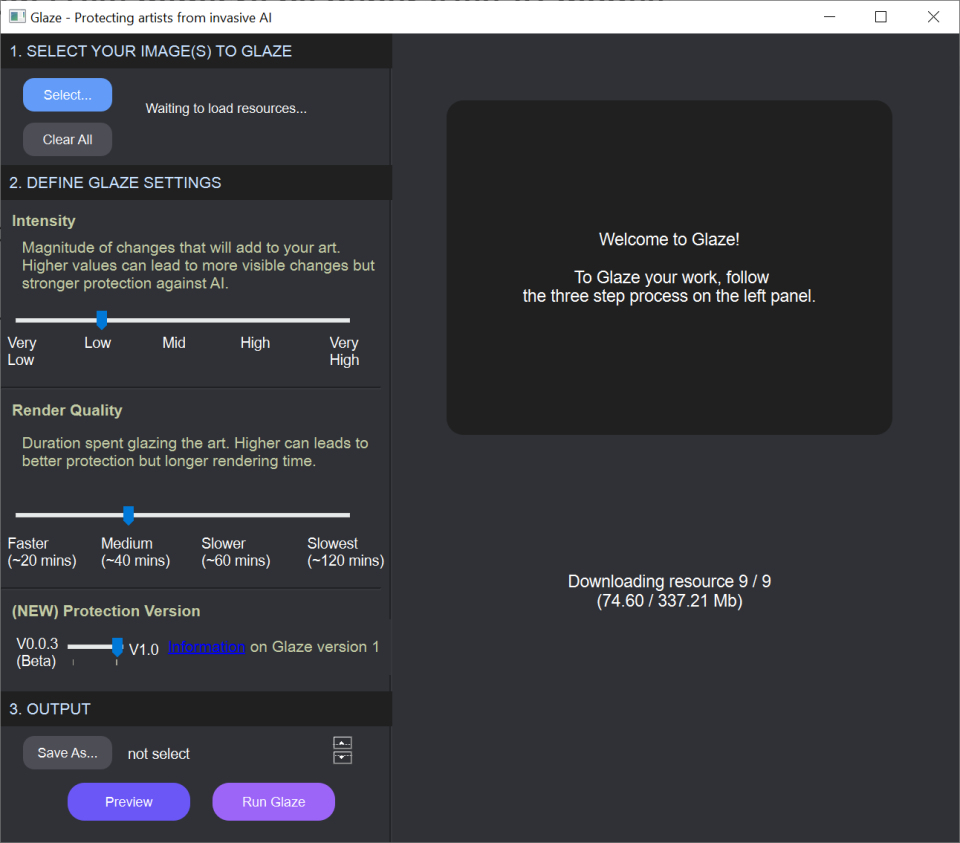

The UI is very straightforward, allowing you to select the images themselves, an output folder for the modified copies and sliders to let you choose the intensity of the changes and the render quality.

Turning up the intensity of the changes can make the output image seem more different than the original but offers better protection. Turning up the Render quality increases the time it takes to complete the process.

The tool has a "Preview" button, but it doesn't actually work, giving a message that version 1.0 doesn't support previewing. So, to see how your images will appear, you have to generate them.

I found that at "Faster" rendering speeds, the system took about 90 seconds to "glaze" two images on my desktop (45 seconds each), which is running an RTX 3080 GPU and a Ryzen 9 5900X CPU. At the "Slowest" rendering speed, a single image took one minute and 40 seconds.

As part of the process, the tool also evaluates the strength of the protection it has generated to let you know whether it thinks your image has been modified enough to fool AIs. The first several times I modified Monet's "Japanese Footbridge," I received an error saying it wasn't protected enough (though it did output the images). When I increased the intensity to "Very High," I no longer got the error message.

Below you'll see the original Japanese Footbridge, followed by the Very High / Slowest version (best protection) and the Very Low / Fastest render (which generated an error for not being protective enough). The differences, even in the most protective version, are pretty subtle.

Original Japanese Footbridge

Very High / Slowest Glaze Protection

Low / Fastest Glaze protection

Below, you can see the other two Monet images, with each original followed by its Very High / Slowest version.

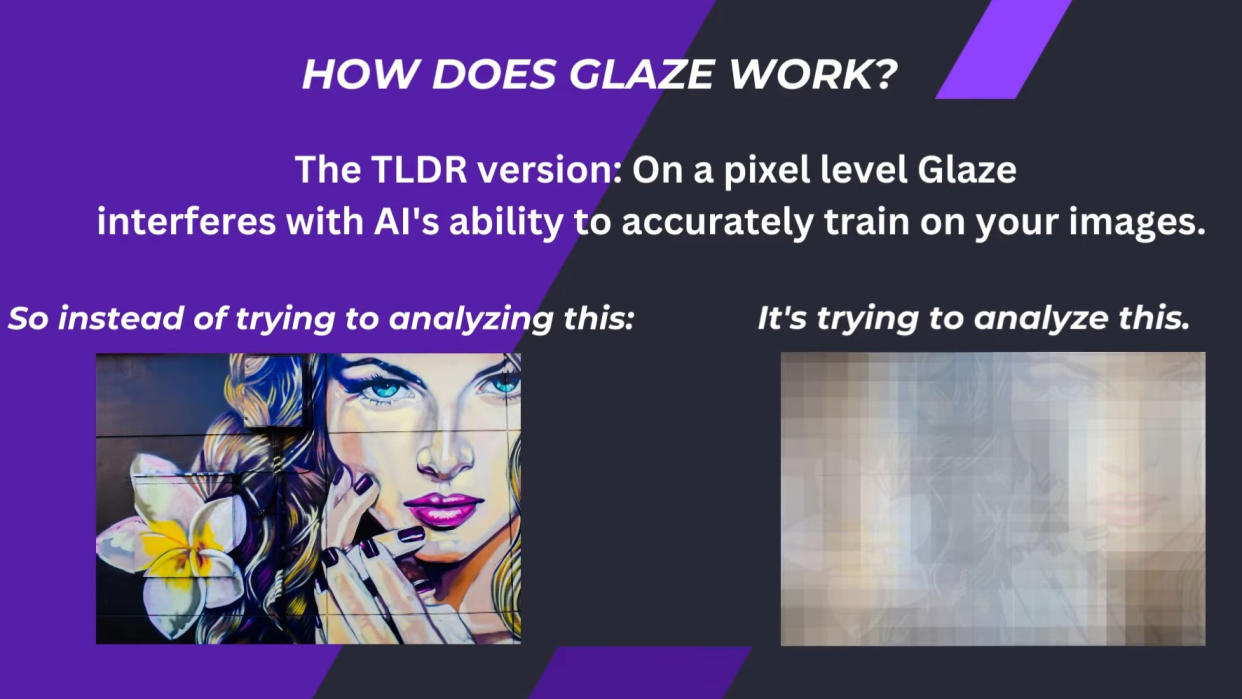

So how exactly does Glaze protect your images? The team behind the tool has made this helpful YouTube video to explain it in detail. However, the short answer is that, though the human eye sees these pixel shifts as subtle, the bots have a much harder time with them.

I didn't get to test various drawing types, so some might look less authentic after modification than my Monet samples did. It's also clear that photographs are unlikely to be protected by Glaze because they don't have enough of a unique visual style to modify.

The result is that bots can train on the images, but they shouldn't accurately pick up on the artists' style and what makes it unique. For example, when trained on the Japanese Footbridge, an AI might make a similar bridge on request but not with the same brush strokes. I say "might" because I didn't have a reliable means of testing Glaze-outputted images as training data for this story, and, even if I did have one, it's possible that a different AI tool would do a better job of copying it.

Glaze's makers know that AI bots are evolving and that their solution will need to continue evolving. There's an arms race to protect artists' images, and, given that AI companies have lots of money and developers, they have the advantage.

However, Glaze is a good step forward for artists who have to show their work online but don't want it to be used to put them out of business.