Erm, should we be worried about AI destroying humanity?

There are many wondrous things that artificial intelligence (AI) is capable of, from writing resumés to offering encyclopedic knowledge on almost anything and literally scanning for cancer. It's hard to argue against the notion that there are positives to be taken from these developments in technology – but, there are plenty of downsides too.

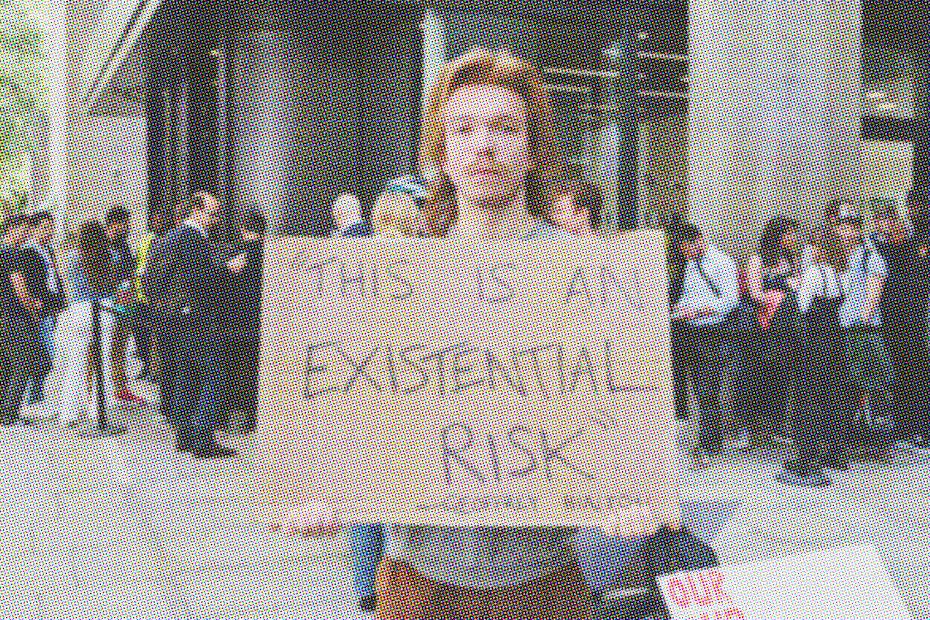

As AI has advanced – extraordinarily quickly in recent years – concerns have grown about its safety and, in particular, whether it'll ever outsmart us humans. In fact, a recent survey from YouGov found that 45% of Brits think robots will be able to develop higher levels of intelligence than humans in the future, and social media is awash with theories ranging from the realistic to the alarming. Some have even taken to the streets in protest, calling on the government and tech giants to do something.

It's a concern that is certainly valid, especially since Open AI – the creator of ChatGPT – put out a statement this week expressing a similar fear and calling for greater regulation when it comes to AI.

"In terms of both potential upsides and downsides, superintelligence will be more powerful than other technologies humanity has had to contend with in the past," a statement from Sam Altman, Open AI's chief executive, reads. "We can have a dramatically more prosperous future; but we have to manage risk to get there. Given the possibility of existential risk, we can’t just be reactive."

So, what actually is this 'existential risk' when it comes to AI? And should we be worried?

"When it comes to any new technology, a healthy degree of concern and worry never hurts, especially if it triggers important discussions about the risks and downsides of new technologies," says Tomas Chamorro-Premuzic, chief innovation officer at ManpowerGroup and author of I, Human: AI, Automation, and the Quest to Reclaim What Makes Us Unique.

"That said, rather than destroying humanity, I actually think that AI will help us lead more human-centric lives and illuminate the intrinsic need for human qualities," he tells Cosmopolitan UK. "While AI will probably win the battle for IQ against humans, EQ (emotional intelligence – skills such as empathy, kindness, self-awareness and self-control) will remain 100% human qualities."

Chamorro-Premuzic's verdict is that "this isn’t about us versus AI or human vs machine intelligence." Instead, it's about "how we can leverage AI to augment and upgrade our intellectual capabilities."

Other experts in the field agree that AI isn't quite the threat we think it is – but rather, it's the humans behind AI that we should be wary of. "The day when machines surpass humans in intelligence may not be too distant, but it is not the technology itself that endangers humanity," says Dr Mandana Ahmadi, founder and CEO of Alena (a mental health app harnessing the use of AI and computational neuroscience). "The true peril lies in allowing individuals with hidden agendas to gain control over that intelligence."

Pointing out how "human intelligence has propelled us to the top of the natural hierarchy," Dr Ahmadi adds that "the inherent human traits of self-preservation, greed, entitlement and occasional arrogance can lead to misuse and exploitation."

To that end, Dr Ahmadi echoes Open AI's own calls for increased regulation. "It is imperative that we initiate urgent discussions surrounding rules, regulations and transparency regarding AI," she tells Cosmopolitan UK. "We must acknowledge the significance of this issue before it becomes too late."

"The time to act is now," Dr Ahmadi urges. "Just as history has taught us valuable lessons from past crises, we must learn from them and take the necessary steps to navigate this pivotal moment. By embracing open dialogue and thoughtful regulations, we can harness the potential of AI while safeguarding the future of our species."

Thankfully, it seems the government is taking notice. Just yesterday, PM Rishi Sunak and Chloe Smith, the secretary of state for Science, Innovation and Technology, met the chief executives of Google DeepMind, OpenAI and Anthropic AI to discuss how best to moderate the development of AI and limit the risks of catastrophe.

"The PM and CEOs discussed the risks of the technology, ranging from disinformation and national security, to existential threats," an official statement shared after the meeting reads, marking the first time that the British government has acknowledged said "existential" threat.

"The PM set out how the approach to AI regulation will need to keep pace with the fast-moving advances in this technology," the statement adds of the future outlook, explaining that AI lab leaders will work with the government to ensure safety measures are put in place – and, most importantly, can keep up with the ever-quickening advancements in AI.

Interestingly, when I asked ChatGPT if AI poses a risk to the future of humanity, its response mirrored that of all the experts I spoke to. Directly referencing concerns about whether AI systems will surpass human intelligence and potential misuse of this technology, it says: "The impact of AI on humanity's future will depend on how we navigate these challenges and ensure that AI technologies are developed and deployed in a manner that maximises benefits while minimising risks."

As for whether ChatGPT is concerned about it all, the robot at the end of the line tells me it doesn't have "personal feelings, emotions or worries" – and to be honest, I'm kinda jealous...

You Might Also Like