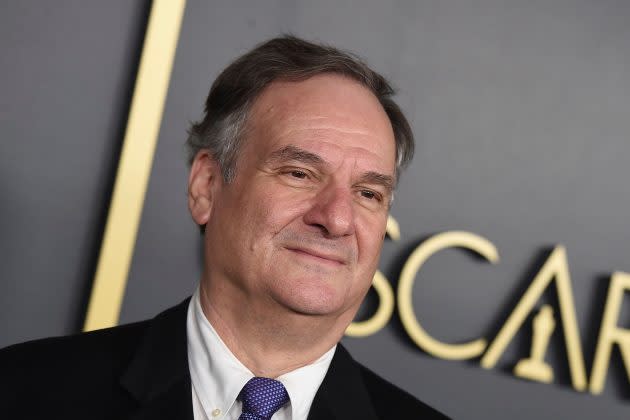

VFX Supervisor Robert Legato Breaks Down The Innovations Behind ‘The Lion King’

Click here to read the full article.

The VFX wizard behind such films as Titanic and Apollo 13, Robert Legato has made it his mission in recent years to advance the art form of photorealistic computer-generated animation.

Winning his third Oscar in 2016 for his work on Jon Favreau’s The Jungle Book, Legato then reteamed with the director on a CG remake of The Lion King, taking the techniques and technology he’d developed for the former film to new heights, and a new degree of sophistication.

More from Deadline

Disney's 'Bambi' Will Follow In Paw-Prints Of 'The Lion King'

VES Awards: 'The Lion King' & 'The Irishman' Take Top Film Honors - Winners List

Referred to now as “live-action animation,” the visual style Legato developed for Jungle Book was immediately appealing to Favreau, because it gave him the ability to tell spectacular, otherworldly stories with a pristine live-action aesthetic.

Filmed on a blue screen stage in Los Angeles, The Lion King was carefully crafted by a team of live-action filmmakers, with state-of-the-art rendering tools and VR technology at their disposal. The latter allowed cast and crew to step into a digital facsimile of the African savannah, with 58 square miles of CG landscapes created for the film.

Even with what he’d achieved on Jungle Book, Legato knew the risks he was taking with The Lion King. At a certain point, he says, his second endeavor with Favreau felt like one “fraught with peril.”

“But that’s what makes moviemaking fun,” he adds, “is rising to the occasion.”

While the work of Legato and his team earned an Oscar nomination last month, it’s also been met with some controversy. Throughout the 2019 awards season, certain critics have raised questions, as to how the film should be discussed. Is The Lion King an animated film, a VFX triumph or both?

Below, Legato gives his thoughts on the matter, after breaking down in detail the process of bringing the film to life.

DEADLINE: Coming onto The Lion King, what did you feel you needed to refine, in terms of the techniques and technology you’d developed for The Jungle Book?

ROBERT LEGATO: It was mostly little, incremental fixes on the way fur was rendered, and particularly the way landscapes were rendered, because in Jungle Book, I was not entirely happy with our landscape work. We got it good enough, and used traditional matte paintings and other things that were okay. But if we’re doing a movie about landscapes, we have to fix that. We have to make that as believable as the fur on the creatures.

So, we ended up using the technology that was developed to create fur [for that purpose]. Instead of individual strands of hair that react to light exactly the way they would in real life, we did it with grass, and weeds, and foliage, which we didn’t do in Jungle Book to any degree. If you look for miles, you’re talking millions and millions of blades of grass, so that became a huge software rendering challenge that you now have to fix. “We want that. It looks great, but man…That would take you 500 hours a frame to render.” So, you have to figure out how to do what you like, and then get it so it’s production ready.

Being a cameraman from my early days, the other thing for me was extending the art form of cinematography to a film that’s totally created in the computer—to bring live-action shooting into the environment of this sort of film. So, we used VR. We got rid of the system that we used for Jungle Book, which works great, but [doesn’t allow you to] feel immersed in the landscape. It makes it kind of remote. What isn’t remote is creating a 360-degree set, with animals that you can direct and move around, so we did that.

We created this methodology of getting ourselves into the set, as you do with a full crew. You have the cameramen, and the director, and the visual effects supervisor, and all the various people you need in the environment at the same time, being able to communicate by saying, “I think the shot would be better over here,” or “What if we move the waterfall and add a mountain over here?” And all of the sudden, you start to really excite your intuitive nature of filmmaking, and it just comes to you. It’s magic. So, we created a system that would allow our intuition to be accessed freely in real time—a VR system five or six people can be in at one time.

You needed to see it lit. You needed to be able to move things around in real time, and do very fast iterative changes. You’d tag team on top of a change, to the next change, to the next, and all of the sudden, you’ve done quite a bit of iterative work that would take forever in a normal computer environment. You’d never get to that level unless you did it this way. So, that became a huge innovation for us on the creative end.

Then, we had Caleb Deschanel, a word-class cameraman, who’d be able to walk in and go, “I see the shot. I see where I’d put a dolly. I would use the steadicam for this shot.” The fact that all that was accessible means he could access his many years of experience, and not be inhibited by the technology or science of it, and just start getting into the art.

So, that’s primarily the big innovations. Then, along the way, the more you make something look realistic on the computer…[There’s] ray tracing, which basically is a light simulation. When the light hits something, it bounces off something else, and that bounces off and gets reflected here. It’s so complicated that it looks real, because that’s what we see every day. When you don’t have all those little steps, your brain doesn’t exactly know why it’s off, but it is. The whole thing feels a little artificial, where we went to great pains to make it realistic. MPC [Moving Picture Company] developed technology to allow us to do that, because it’s really computationally heavy, but yields these great results. If we could do a whole film like that, shot after shot, you would tend to convince the audience that it was conventionally photographed—and that conventional photography, it’s of a high order.

DEADLINE: Could you elaborate on the step-by-step process by which you brought the film to life?

LEGATO: We started with this trip to Africa, [which] was our guide to the type of location we wanted to create for each scene. That got translated into the computer and designed, and when it’s designed in a computer, it’s a 360 view. Whatever you put into it is what you see. Once we put it into our VR environment, we could walk around and look 360, and travel from place to place. So now, it’s a real location. It has terrain, it has ups and downs, it has trees that block the sun. Now, you light it, and when you light it, you light it like the sun. So now, you have a facsimile of what it would feel like if it were dead photoreal—which we’re later going to make it, but not at that step.

Then, you know from the script what the actors are saying and doing. You record that, and you have their animal facsimiles that are also in 360. Once you enter the VR world, you can start moving them around, and you direct things to camera. Now that you can see 360, you create a camera that limits the scope of what you’re seeing to a shot, and then you block out to make that shot optimized.

Then, we say, “He should start by the tree. We should walk from point A to point B, and maybe hop on this rock. That might be fun, if he’s a cub and he’s playful.” Then, the other one would fall behind, and they’d have to run and catch up—all the various little bits and pieces that make something come to life. The animator goes off and makes a facsimile version of that, not the final version—because it’s a lot of work to get all the secondary animations, and all the things that make you believe it—but enough to get it so we could photograph it. And we’d photograph shot after shot, like live-action dailies. You’d shoot with a 50[mm lens], and then you’d shoot the reverse with a 25, and you’d do a crane shot and a steadicam, and cut them all together like you would live-action dailies.

You piece it together, and add the voices as you’re doing it. That’s what we’re seeing, when we’re photographing, is their speaking, and we’d basically time our scene to that. Then, when that scene feels good, sometimes Jon would go in and re-record the voices. Because now, we have a little more specificity, and a little more behavior that might change the scene. All of it was very fluid—constantly being directed and redirected, as we were doing it.

Once we have an edit, and the animation tells the story, but it’s not beautiful yet, the animators can go in and perfect every moment and every eye twitch—all the tiny, little touches that make a really good performance.

DEADLINE: Was the voice work for the film done in a traditional kind of booth?

LEGATO: [The actors] were actually on a stage, because it’s all a timing issue. If you have the set built before you record them, then they could see and walk around the set, and even see each other as the animals that they are. We didn’t go quite that far, but they were on the same stage that we photographed everything on, and we had them in VR so they could get a sense of it. Then, they’d take the goggles off, and instead of them being in a booth—not that we didn’t do that, too—they were free to walk around a 50-by-50 foot area. We’d mike them with traditional mikes that you’d have on a regular set, so they were free to just act it out and just feel the moment. We photographed that, and that got interpreted into the animal behavior.

How the animators did Scar, one of my favorite characters in the movie, is to me, such a perfect blend of the actor and the animal that he is portraying. We were all in lockstep with one another, and we made what I’d like to think is a really great and believable villain.

DEADLINE: Which scenes in The Lion King did you find the most challenging, either to conceptualize for the remake or to bring to fruition?

LEGATO: For me, one of them was Mufasa’s death. Now that it’s real looking, how far do you [go] before it becomes hard to watch? You know it’s going to be an emotional scene, so that took iterations of taste. “We think that may be too much, and maybe too graphic.” You know, it was always going to be as tasteful as we could make it. But it is for kids, and you don’t want to completely lose the audience at that point. So, that took a bunch of time to do. Besides being fun to do, the action scenes always take a while, because it’s a shot-by-shot thing that you need to see put together, before you can determine if it’s working or not.

The ones that were hardest for me [involved] keeping this live-action vibe going, and also doing a musical number. Even in a regular musical, it gets into theater quickly because it’s not natural. I mean, you don’t break into song necessarily, unless you find a deft way of doing it. So, there’s a little bit of a leap that you have to make right off the bat, just filmically. And then what is that leap, when you’re trying to continually convince the audience it’s a photoreal, live-action movie? So, that took a bunch of tries—how much Busby Berkeley do we put into it? How much Busby Berkeley do we put into the performers? Maybe there’s a balance between what they’re doing and what the camera’s doing, to make it feel fun, but not take you out of it. It’s more the artistic balance than it was a physically difficult thing to do.

DEADLINE: What you’ve done with The Lion King has proven somewhat controversial, in the sense that the film is hard to fully pin down. In essence, the debate seems to be, is this animated film, or is it a visual effects spectacle? What do you make of all this?

LEGATO: Well, it’s controversial for the sake of controversy, more than anything else. In point of fact, all visual effects movies are trying to make you believe what you’re seeing, and they all employ the same tools. If we do a car crash, you’re animating a car, you’re animating the damage that happens, you’re animating the fireball. You’re doing all the various things not to look animated, because that would kill what you’re seeing.

What we’re doing is exactly the same thing. So, to call it animation is now steering it into a thing that we strove hard not for it to be. We wanted you to believe that what you’re seeing is real, so I net out that while it’s controversial, we set out to make a live-action movie, using the restrictions of live-action. And you’d have to call everything that uses animation ‘animation,’ to call Lion King animation, as well. All the Marvel movies, anything where the actor’s not really doing the stunt, it’s animated, using the same exact software and everything else.

What’s different in ours, slightly, is that the whole movie was done that way. But the movie was done to create the illusion that what you’re seeing is real.

Best of Deadline

Peacock Programming: List Of NBCUniversal Streaming Service’s Series, Films, Sports, News & More

Stan Lee's Legacy: Ranking The Hollywood Heroes Co-Created By The Marvel Comics Icon

Disney-Fox Deal: How It Ranks Among Biggest All-Time Media Mergers

Sign up for Deadline's Newsletter. For the latest news, follow us on Facebook, Twitter, and Instagram.