Scraping or Stealing? A Legal Reckoning Over AI Looms

- Oops!Something went wrong.Please try again later.

For nearly 20 years, Karla Ortiz has worked as a concept artist, bringing to life an entire universe of characters in projects like Black Panther, Avengers: Infinity War and Thor: Ragnarok. She’s credited with coming up with the main character design for Doctor Strange in her own blend of impressionist and magic realism styles honed by decades of practice.

She was horrified to learn last year that her work at the forefront of multibillion-dollar franchises is being used to train generative artificial intelligence systems without her knowledge or consent. Imitations of her work are now floating all across the web. Her name has been fed into Midjourney, an AI art generator, more than 2,500 times to create art that looks like hers. She’s been paid nothing.

More from The Hollywood Reporter

Strike Impact Hits Earnings as Executives Size Up War Chests

For Actors Podcasting During a Strike, Speaking Out of Turn Is the Big Concern

“You work your entire life to do what you do as a creative, and for a company to profit off that — literally take your work to train a model that’s attempting to replicate you — it makes me sick,” Ortiz tells The Hollywood Reporter.

Ortiz is one of three artists suing AI art generators Stability AI, Midjourney and DeviantArt for using their work to train generative AI systems. The first-of-its-kind suit will test the boundaries of copyright law and could be one of a few cases that decide the legality of the way large language models are trained.

On the backs of OpenAI’s GPT, Meta’s Llama or Google’s LaMDA, generative AI has had a remarkable year. It’s proved a unifying ethos for Wall Street as media executives touted AI-themed announcements to woo investors. Endeavor chief executive Ari Emanuel in February opened his company’s earnings calls with comments generated by an AI firm called Speechify. The chiefs of YouTube, Spotify and BuzzFeed similarly trumpeted plans to deploy the tech. But behind closed doors, companies are warning that the way most AI systems are built might be illegal. “We may not prevail in any ongoing or future litigation,” states a securities filing issued in June by Adobe. It cites intellectual property disputes that could “subject us to significant liabilities, require us to enter into royalty and licensing agreements on unfavorable terms” and possibly impose “injunctions restricting our sale of products or services.” In March, Adobe unveiled AI image and text generator Firefly. Though the first model is only trained on stock images, it said that future versions will “leverage a variety of assets, technology and training data from Adobe and others.”

Engineers build AI art generators by feeding AI systems, known as large language models, voluminous databases of images downloaded from the internet without licenses. The artists’ suit revolves around the argument that the practice of feeding these systems copyrighted works constitutes intellectual property theft. A finding of infringement in the case may upend how most AI systems are built in the absence of regulation placing guardrails around the industry. If the AI firms are found to have infringed on any copyrights, they may be forced to destroy datasets that have been trained on copyrighted works. They also face stiff penalties of up to $150,000 for each infringement.

AI companies maintain that their conduct is protected by fair use, which allows for the utilization of copyrighted works without permission as long as that use is transformative. The doctrine permits unlicensed use of copyrighted works under limited circumstances. The factors that determine whether a work qualifies include the purpose of the use, the degree of similarity, and the impact of the derivative work on the market for the original. Central to the artists’ case is winning the argument that the AI systems don’t create works of “transformative use,” defined as when the purpose of the copyrighted work is altered to create something with a new meaning or message.

Responding to the proposed class action from artists, Stability AI countered in a statement that “anyone that believes that this isn’t fair use does not understand the technology and misunderstands the law.”

Eric Goldman, co-director of the High Tech Law Institute at Santa Clara University School of Law, agrees that AI companies likely meet the criteria for fair use. “Nobody who’s complaining today about their work being stolen has gotten there without standing on the shoulders of others,” Goldman says. “People learn from each other.”

He points to precedent greenlighting the copying of works to produce noninfringing outputs. The Authors Guild in 2005 took Google to court for digitizing tens of millions of books to create a search function in a case closely watched by the Motion Picture Association and practically every labor group representing writers. A federal judge ultimately rejected copyright infringement claims and found that Google’s utilization of the authors’ copyrighted works amounts to fair use. Central to the ruling was that Google allowed users to view snippets of text without providing the full work.

But compared to 2005, when there was more optimism that nascent technology could be deployed to aid industries instead of dismantling them, artists and media executives today have a far grimmer view of big tech and the industry’s plans with tools. There could be a potentially seismic shift in how people consume news if Google, for example, were to stop sending traffic to publications in favor of answering questions with a chatbot sans attribution. Some media companies, like the Associated Press, have already struck licensing deals while others, like News Corp, are in talks with AI firms.

Matthew Butterick, a lawyer representing the authors, stresses that AI companies are claiming to make “completely new material that’s held out as a substitute for the training data” to profit off of, while Google was only making an index for the books as it pointed back to the original works. “That’s been the red line in fair use law for a very long time,” he says.

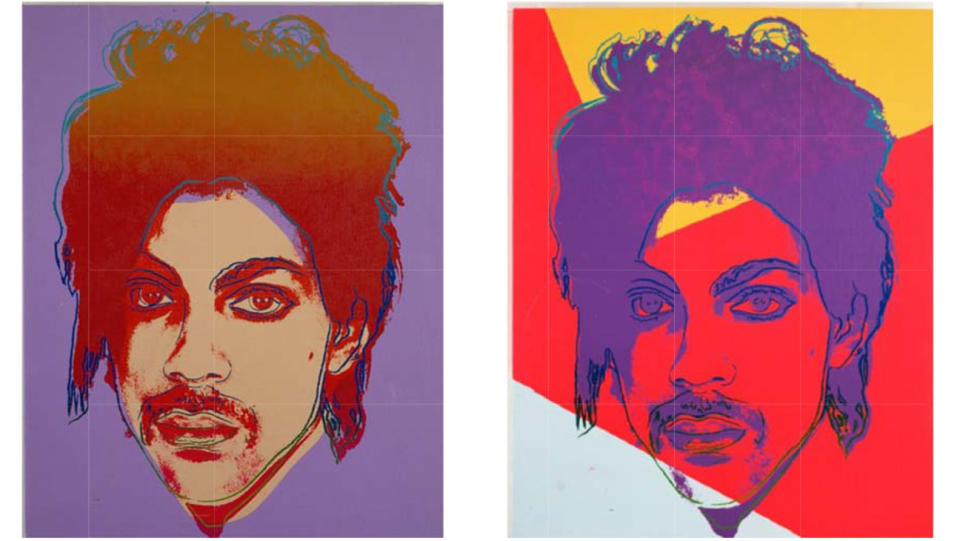

The artists’ argument that AI companies are actively hurting their economic interests by creating competing works on the back of their art could swing the case in their favor. For guidance, they might look to the Supreme Court’s recent decision rejecting a fair use defense in Andy Warhol Foundation for the Visual Arts v. Goldsmith. The 7-2 majority stressed in that case that an analysis of whether the secondary work was sufficiently transformed to protect against copyright infringement must also consider the commercial nature of the use. Fair use is not likely to be found when an original work and derivative share the “same or highly similar purpose” and that secondary use is commercial, the justices found. Associate Justice Sonia Sotomayor noted that ruling the other way would essentially permit artists to make slight alterations to an original photo and sell it by claiming transformative use.

“The framework set forth in the Warhol majority decision arguably supports the artists’ claim and weighs against fair use,” says Scott Sholder, a lawyer who specializes in intellectual property disputes. “The copying and use of copyrighted works for AI training purposes is a non-transformative commercial use that allows third parties to, effectively on command, create potential market substitutes for their works.”

Notably, Midjourney and other AI art generators allow users to create works “in the style of” other artists, making them potential competitors to the artists whose work they are trained on. Spooked by AI companies indiscriminately crawling the web to scrape art, books and personal data, Ortiz hid her portfolio behind a password-protected page on her personal website. She says the diminished visibility of her work is worth the protection.

But the judge overseeing her suit may not even need to decide the case on fair use, which is typically analyzed on summary judgment before trial. U.S. District Judge William Orrick said in July that he’s “inclined to dismiss almost everything” (with an opportunity to refile the claims) because the artists have not yet pointed to specific examples works that were infringed upon or AI-created outputs that infringe on existing copyrights, which is necessary to allege infringement. The AI companies named in the suit argue the artists will not be able to meet this requirement because it’s impossible for their systems to produce exact or near-exact replicas of copyrighted works.

Some firms, however, have warned that their models spontaneously copy works from training sets verbatim without compensation or attribution, like GitHub with Copilot, which is named in another copyright suit. Midjourney has turned to barring “Afghan Girl” as a prompt after it was found that the art generator was creating copies with slight variations of the 1984 Steven McCurry photo. A search for Dorothea Lange’s “Migrant Mother” similarly produces near-identical works, though the photo is in the public domain.

The artists’ case will be decided as courts increasingly drift toward enforcing intellectual property rights and away from prematurely dismissing copyright suits. The 9th U.S. Circuit Court of Appeals last year revived a suit against M. Night Shyamalan accusing him infringing on a 2013 independent film to create Servant, concluding that expert testimony and discovery are required to evaluate whether the works are truly similar. The decision was at least the third from a federal appeals court since 2020 reversing a lower court’s decision to dismiss a copyright suit, with the others implicating the first Pirates of the Caribbean movie and The Shape of Water.

Amid this backdrop, some AI companies have turned to licensing data to ward off legal issues. Some artists are in favor of such licensing to earn compensation for their work. Ortiz is not so sure. “This isn’t just about automation, but automation decimating entire industries with your own work,” she says. “That’s why SAG-AFTRA and the WGA are fighting.”

This story first appeared in the Aug. 23 issue of The Hollywood Reporter magazine. Click here to subscribe.

Best of The Hollywood Reporter

Meet the World Builders: Hollywood's Top Physical Production Executives of 2023

Men in Blazers, Hollywood’s Favorite Soccer Podcast, Aims for a Global Empire