How Big Tech Has Reshaped Modern Warfare in Ukraine

Technology’s increasing role in times of conflict has become more evident by the day with Russia’s invasion of Ukraine. As a result, social platforms are learning some tough lessons in real time and will need to wrestle with applying what they learn in the future.

Weeks after Russia invaded Ukraine, tech companies continue to modify their platform rules on the fly as Russia becomes the most sanctioned country in the world. Within days, major platforms from Facebook to TikTok moved quickly to suspend Russian ads and state-owned media — in turn driving Russia to ban Instagram, Facebook and Twitter — while more changes and exceptions are being made as the war escalates. In the last month, this information war has already resulted in digital vacuums where entertainment, news and communications via social media have virtually vanished within Russia.

“The invasion of Ukraine is setting the stage for all future geopolitical events of this magnitude,” Wasim Khaled, CEO of threat and perception intelligence platform Blackbird.AI, told TheWrap. “And it is clear social media will remain a key territory in the landscape of war.”

Social platforms have demonstrated that they can intervene much faster than they have on past issues, suggesting that tech leaders are willing to choose a side when cornered. Secondly, experts contend that platforms need to get much better and faster at detecting imminent threats and conflict instead of being reactive. These tactics and tools will be key in combating misinformation that is at risk of spreading faster than ever in modern warfare. Let’s take a closer look at those takeaways and what they mean for social media companies.

Become more comfortable with the role of publisher

Following the invasion, Meta’s Facebook and Instagram, TikTok, Twitter, Google and others began restricting Russian ads and state-run media outlets, including RT and Sputnik. This is a contrast to domestic controversies like fallout from the Jan. 6, 2021 attack on the U.S. Capitol or lies about the 2020 presidential election where major platforms have banned individual pieces of content rather than suspending actors or organizations entirely.

“The excuse always was, ‘We aren’t the arbiters of truth,’” Subbu Vincent, director of the Markkula Center for Applied Ethics at Santa Clara University, told TheWrap. “That justification does not emerge in the Russia-Ukraine case if you see how [companies] have acted quickly.”

Consider the Capitol insurrection, when right-wing extremists used Facebook and other platforms to spread calls for overthrowing the U.S. government. Watchdog groups and leaked reports have shown how social media companies downplayed their roles in the violence, and it wasn’t until after the Capitol attacks that Facebook and Twitter decided to ban former President Donald Trump’s accounts. Meanwhile, extremist groups and misinformation on COVID-19 continued spreading on Facebook as the company ignored its own studies on anti-vaccination content.

In the past, the social networks have mostly erred on the side of caution in content moderation, responding with force only when pressured into making decisions as a publisher. Acting as a publisher means taking considerably more responsibility over their content, whereas staying a platform means they would remain mostly hands off on what people post.

There’s an element of self-interest here for the tech companies, as well, Vincent explained. As the world watches the war unfolding in Ukraine, tech companies want to appear on the right side of history by doing the ethical thing.

“They are seeing consequences for human lives and human agency down the line,” Vincent said. “This is about democratic human agency. … [Companies] are pulling out because the consequences will get worse if they stay in.”

Representatives for Meta, Twitter and TikTok didn’t respond to requests for comment.

Prepare in peacetime, so you’re ready in wartime

Responses in Ukraine have also confirmed that tech giants are fully capable of making hardline pro-democracy decisions — just on their own terms. Whether it’s employing fact-checking teams or hiring an oversight board, tech leaders have plenty of resources to proactively remove misinformation and ensure safety on their platforms “when properly motivated,” Khaled at Blackbird.AI told TheWrap.

“The goal since the onset of the invasion has been to disempower Russian propaganda artists from having another tool in their war chests,” Khaled said. “And while success is being demonstrated on this front, it is being done reactively, which still gives room for error and demonstrates lack of preparedness.”

As the world’s largest social network, Facebook said it has spent more than $13 billion on safety and security efforts since the 2016 U.S. election. Even so, the company failed to get it right — particularly in the case of overseas conflicts like the predominantly Muslim Rohingya ethnic group in Myanmar. In 2017, as anti-Rohingya content and ethnic violence in Myanmar spread on Facebook, the company failed to detect more than 80% of hate speech that was only later removed. The company cited technical and linguistic challenges, but it wasn’t until the next year that Facebook ramped up hiring Burmese speakers, created human rights policies and banned government officials for the first time.

In Ukraine, there’s still concern that earlier propaganda campaigns are still driving current narratives and skewing the public’s perception online, Khaled said. Reports have found that pro-Russia rebel groups used Facebook to recruit fighters and disseminate messages defying sanctions, and TikTok users shared videos of Russian paratroopers during the invasion that were actually recorded in 2015.

“The future of conflict, which is happening now, includes real-time TikTok and Twitter updates, engagement on social media, memeification, influencers swaying public opinion — essentially engagement from everyone, everywhere at every moment in time,” Khaled said.

Some of the tools for tracking distorted information, for example, will become imperative for predicting major events before they happen. The next step is “formulating new playbooks, so when the time comes guesswork and trial and error are not the leading efforts that governments are relying on,” Khaled said.

Blackbird’s intelligence platform, for instance, measures intent behind a disinformation campaign instead of just tracking what’s occurring. The platform has been flagging narratives with Russian disinformation and geopolitical issues to locate where they are propagating and can be manipulated. Increasingly, social platforms will have to develop more of these advanced capabilities in-house or outsource the work in order to stay ahead of potential dangers.

Develop better tools to combat misinformation

As tech companies try to combat misinformation and violent content, they’re also fighting to maintain their services on the ground. But for the most part, they have no blueprint for what to do at this scale. Establishing these standards and industry practices have become critical in misinformation efforts, as well as supporting and directly funding journalism.

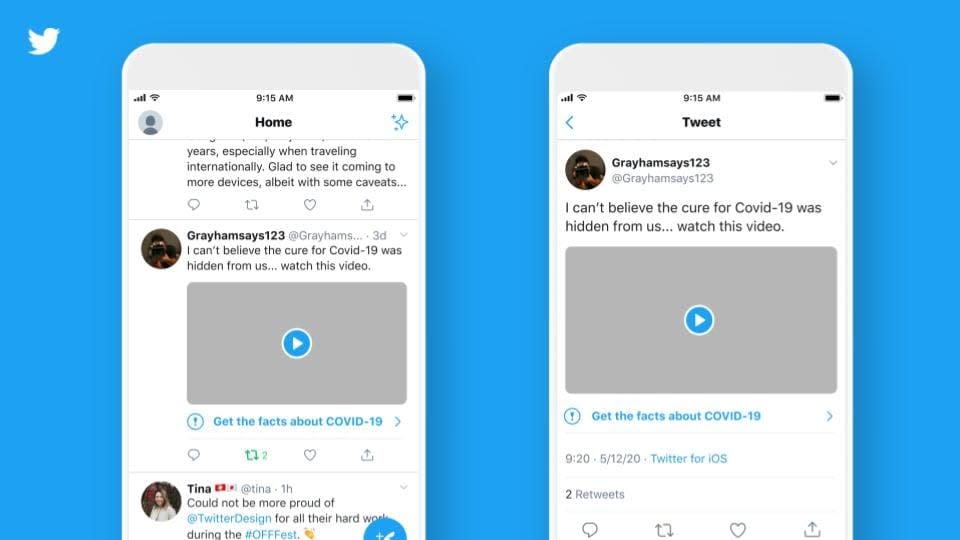

Platforms have to make decisions in real-time about information that could blur the lines of reality, such as posts that downplay or mischaracterize the invasion in Ukraine. But it’s clear that tech companies would rather not make those calls on their own, which is evident in how they approached the COVID-19 pandemic. Twitter has added fact-checking labels on COVID misinformation, while Facebook has created an outside fact-checking program with 80 some organizations to review content across its apps.

“Disinformation on social media is blurring the lines of what’s actually happening, making it difficult to distinguish what side is the right side,” Khaled said. “Global conflict is occurring on land and online, and individuals are armed and empowered with smartphones making this event [in Ukraine] unlike any other in history.”

Behind the scenes in Russia, internet censor agency Roskomnadzor this month blocked access to Facebook and Instagram after the company made exceptions to allow content calling on violence against Russians. It’s unclear whether Meta-owned WhatsApp will also get booted, as that app is far more popular in the country than either Facebook and Instagram.

People are also flocking to YouTube — the world’s second-most visited website (after its parent ,Google), according to Hootsuite — to understand the conflicts in Ukraine. In turn, the platform said earlier this month it will remove videos denying, minimizing or trivializing the invasion, adding to its restrictions on Russian state-funded media and channels.

1/ Our Community Guidelines prohibit content denying, minimizing or trivializing well-documented violent events. We are now removing content about Russia’s invasion in Ukraine that violates this policy. https://t.co/TrTnOXtOTU

— YouTubeInsider (@YouTubeInsider) March 11, 2022

“It’s within these companies’ power to hold themselves accountable when combating fake news or else they become vulnerable to public scrutiny and risk losing credibility going forward,” Robin Zieme, chief strategy officer of YouTube ad company Channel Factory, said. “Fake news and misinformation is not only uncomfortable for bystanders, but extremely harmful for the parties impacted.”

Zieme’s firm is a YouTube partner currently working with the Ukraine Ministry of Digital Transformation to identify and block channels and keywords spreading misinformation. They share those lists with brands and media to help them find legitimate sources that counter Russian misinformation like incorrect reports on casualties. “Social media is almost unavoidable, and right now it’s allowing us to watch what is happening in Ukraine from the sidelines,” Zieme said.

This leads us to the looming question of whether actions in Ukraine will influence platforms in future events, such as the midterm or 2024 presidential election. With Ukraine, entire nations and global groups acted in unison against Russia, enacting sanctions virtually overnight and halting business operations in the region. This made it easier for Western tech giants to defend their choices around ethical concerns, but there are doubts that platforms will make the same calls on future issues that hit closer to home.

As Vincent puts it, “Now that the world is burning, it is easier to make ethical decisions.”