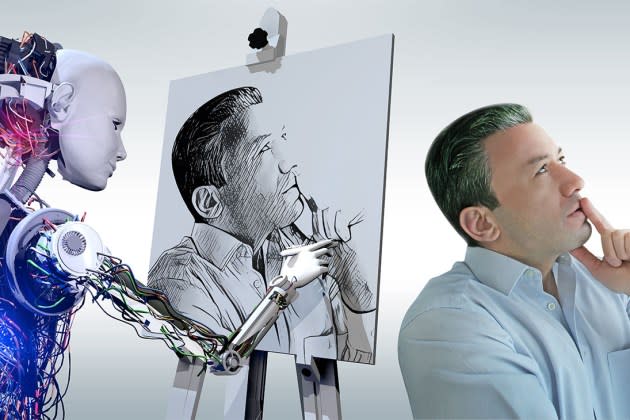

Artists Lose First Round of Copyright Infringement Case Against AI Art Generators

- Oops!Something went wrong.Please try again later.

Artists suing generative artificial intelligence art generators have hit a stumbling block in a first-of-its-kind lawsuit over the uncompensated and unauthorized use of billions of images downloaded from the internet to train AI systems, with a federal judge’s dismissal of most claims.

U.S. District Judge William Orrick on Monday found that copyright infringement claims cannot move forward against Midjourney and DeviantArt, concluding the accusations are “defective in numerous respects.” Among the issues are whether the AI systems they run on actually contain copies of copyrighted images that were used to create infringing works and if the artists can substantiate infringement in the absence of identical material created by the AI tools. Claims against the companies for infringement, right of publicity, unfair competition and breach of contract were dismissed, though they will likely be reasserted.

More from The Hollywood Reporter

Notably, a claim for direct infringement against Stability AI was allowed to proceed based on allegations the company used copyrighted images without permission to create Stable Diffusion. Stability has denied the contention that it stored and incorporated those images into its AI system. It maintains that training its model does not include wholesale copying of works but rather involves development of parameters — like lines, colors, shades and other attributes associated with subjects and concepts — from those works that collectively define what things look like. The issue, which may decide the case, remains contested.

The litigation revolves around Stability’s Stable Diffusion, which is incorporated into the company’s AI image generator DreamStudio. In this case, the artists will have to establish that their works were used to train the AI system. It’s alleged that DeviantArt’s DreamUp and Midjourney are powered by Stable Diffusion. A major hurdle artists face is that training datasets are largely a black box.

In his dismissal of infringement claims, Orrick wrote that plaintiffs’ theory is “unclear” as to whether there are copies of training images stored in Stable Diffusion that are utilized by DeviantArt and Midjourney. He pointed to the defense’s arguments that it’s impossible for billions of images “to be compressed into an active program,” like Stable Diffusion.

“Plaintiffs will be required to amend to clarify their theory with respect to compressed copies of Training Images and to state facts in support of how Stable Diffusion — a program that is open source, at least in part — operates with respect to the Training Images,” stated the ruling.

Orrick questioned whether Midjourney and DeviantArt, which offers use of Stable Diffusion through their own apps and websites, can be liable for direct infringement if the AI system “contains only algorithms and instructions that can be applied to the creation of images that include only a few elements of a copyrighted” work.

The judge stressed the absence of allegations of the companies playing an affirmative role in the alleged infringement. “Plaintiffs need to clarify their theory against Midjourney — is it based on Midjourney’s use of Stable Diffusion, on Midjourney’s own independent use of Training Images to train the Midjourney product, or both?” Orrick wrote.

According to the order, the artists will also likely have to show proof of infringing works produced by AI tools that are identical to their copyrighted material. This potentially presents a major issue because they have conceded that “none of the Stable Diffusion output images provided in response to a particular Text Prompt is likely to be a close match for any specific image in the training data.”

“I am not convinced that copyright claims based a derivative theory can survive absent ‘substantial similarity’ type allegations,” the ruling stated.

Though defendants made a “strong case” that claim should be dismissed without an opportunity to be reargued, Orrick noted artists’ contention that AI tools can create materials that are similar enough to their work to be misconstrued as fakes.

Claims for vicarious infringement, violations of the Digital Millenium Copyright Act for removal of copyright management information, right of publicity, breach of contract and unfair competition were similarly dismissed.

“Plaintiffs have been given leave to amend to clarify their theory and add plausible facts regarding ‘compressed copies’ in Stable Diffusion and how those copies are present (in a manner that violates the rights protected by the Copyright Act) in or invoked by the DreamStudio, DreamUp, and Midjourney products offered to third parties,” Orrick wrote. “That same clarity and plausible allegations must be offered to potentially hold Stability vicariously liable for the use of its product, DreamStudio, by third parties.”

Regarding the right of publicity claim, which takes issue with defendants profiting off of plaintiffs’ names by allowing users to request art in their style, the judge stressed that there’s not enough information supporting arguments that the companies used artists’ identities to advertise products.

Two of the three artists who filed the lawsuit have dropped their infringement claims because they didn’t register their work with the copyright office before suing. The copyright claims will be limited to artist Sarah Anderson’s works, which she has registered. As proof that Stable Diffusion was trained on her material, Anderson relied on the results of a search of her name on haveibeentrained.com, which allows artists to discover if their work has been used in AI model training and offers an opt-out to help prevent further unauthorized use.

“While defendants complain that Anderson’s reference to search results on the ‘haveibeentrained’ website is insufficient, as the output pages show many hundreds of works that are not identified by specific artists, defendants may test Anderson’s assertions in discovery,” the ruling stated.

Stability, DeviantArt and Midjourney didn’t respond to requests for comment.

On Monday, President Joe Biden issued an executive order to create some safeguards against AI. While it mostly focuses on reporting requirements over the national security risks some companies’ systems present, it also recommends the watermarking of photos, video and audio developed by AI tools to protect against deep fakes. Biden, at a signing of the order, stressed the technology’s potential to “smear reputations, spread fake news and commit fraud.”

The Human Artistry Campaign said in a statement, “The inclusion of copyright and intellectual property protection in the AI Executive Order reflects the importance of the creative community and IP-powered industries to America’s economic and cultural leadership.”

At a meeting in July, leading AI companies voluntarily agreed to guardrails to manage the risks posed by the emerging technology in a bid by the White House to get the industry to regulate itself in the absence of legislation instituting limits around the development of the new tools. Like the executive order issued by Biden, it was devoid of any kind of reporting regime or timeline that could legally bind the firms to their commitments.

Best of The Hollywood Reporter

Meet the World Builders: Hollywood's Top Physical Production Executives of 2023

Men in Blazers, Hollywood’s Favorite Soccer Podcast, Aims for a Global Empire